Microsoft uses an internally written service called MSAsset to manage all Microsoft data center hardware around the world. MSAsset is used for tracking Microsoft’s servers, switches, storage devices, and cables across the company and requires 24/7 availability to accommodate break-fix requirements.

Before migrating to Azure SQL Database last year, MSAsset’s data tier consisted of a 107 GB database with 245 tables on SQL Server. The database was part of a SQL Server Always On Availability Group topology used for high availability and the scaling out of read-activity.

The MSAsset engineering team faced the following issues:

◈ Aging hardware was not keeping up with stability and scale requirements.

◈ There was an increase in high severity data-tier incidents and no database administrator on staff to help with troubleshooting, mitigation, root cause analysis and ongoing maintenance.

◈ MSAsset’s database ran on SQL Server 2012. Developers and internal customers were increasingly requesting access to new SQL Server functionality.

After exploring various options and weighing several factors, the MSAsset engineering team decided that Azure SQL Database was the appropriate data tier for their future investment and would address all of their key pain points. With the move to Azure SQL Database, they gained increased scalability, built-in manageability and access to the latest features.

With 24/7 availability requirements, the engineering team needed to find a way to migrate from SQL Server to Azure SQL Database without incurring downtime for read-only activity. MSAsset is a read-heavy service, with a much smaller percent of transactions involving data modifications. Using a phased approach, they were able to move to Azure SQL Database with zero downtime for read-only traffic and less than two hours of down time for read-write activity. This case study will briefly describe how this was accomplished.

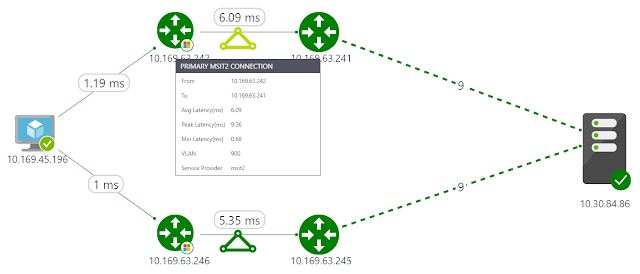

The original MSAsset application architecture consisted of a web tier with read-write access to the primary database located on a SQL Server 2012 instance. The database was contained within an Always On Availability Group with one synchronous read-only secondary replica and three read-only asynchronous secondary replicas. The application used an availability group listener to direct incoming write traffic to the primary replica. To accommodate the substantial amount of read-only reporting traffic, a proprietary load balancer was used to direct requests across the read-only secondary replicas using a round-robin algorithm.

Before migrating to Azure SQL Database last year, MSAsset’s data tier consisted of a 107 GB database with 245 tables on SQL Server. The database was part of a SQL Server Always On Availability Group topology used for high availability and the scaling out of read-activity.

The MSAsset engineering team faced the following issues:

◈ Aging hardware was not keeping up with stability and scale requirements.

◈ There was an increase in high severity data-tier incidents and no database administrator on staff to help with troubleshooting, mitigation, root cause analysis and ongoing maintenance.

◈ MSAsset’s database ran on SQL Server 2012. Developers and internal customers were increasingly requesting access to new SQL Server functionality.

After exploring various options and weighing several factors, the MSAsset engineering team decided that Azure SQL Database was the appropriate data tier for their future investment and would address all of their key pain points. With the move to Azure SQL Database, they gained increased scalability, built-in manageability and access to the latest features.

With 24/7 availability requirements, the engineering team needed to find a way to migrate from SQL Server to Azure SQL Database without incurring downtime for read-only activity. MSAsset is a read-heavy service, with a much smaller percent of transactions involving data modifications. Using a phased approach, they were able to move to Azure SQL Database with zero downtime for read-only traffic and less than two hours of down time for read-write activity. This case study will briefly describe how this was accomplished.

The original MSAsset architecture

The original MSAsset application architecture consisted of a web tier with read-write access to the primary database located on a SQL Server 2012 instance. The database was contained within an Always On Availability Group with one synchronous read-only secondary replica and three read-only asynchronous secondary replicas. The application used an availability group listener to direct incoming write traffic to the primary replica. To accommodate the substantial amount of read-only reporting traffic, a proprietary load balancer was used to direct requests across the read-only secondary replicas using a round-robin algorithm.

When planning for a move to Azure SQL Database as with the legacy SQL Server solution, the proposed new solution needed to accommodate one read-write database and depending on the final migrated workload volume and associated Azure SQL Database resource consumption one or more read-only replicas.

Using a phased migration approach

The MSAsset engineering team used a phased incremental approach for moving from SQL Server to Azure SQL Database. This incremental approach helped reduce the risk of project failure and allowed the team to learn and adapt to the inevitable unexpected variables that arise with complex application migrations.

The migration phases were as follows:

1. Configure hybrid SQL Server and Azure SQL Database read-only activity, while keeping all read-write activity resident on the legacy SQL Server database.

◈ Set up transactional replication from SQL Server to Azure SQL Database, for use in accommodating read-only activity.

◈ Monitor the replication topology for stability, performance, and convergence issues.

◈ As needed, create up to four active geo-replication readable secondary databases in the same region to accommodate read-only traffic scale requirements.

◈ Once it is confirmed the topology is stable for a sustained period of time, use load-balancing to direct read-only activity to Azure SQL Database, beginning with 25 percent of the read-only traffic. Over a period of weeks, increase to 50 percent, and then 75 percent. For load balancing, the MSAsset engineering team uses a proprietary application-layer library.

◈ Along the way, use Query Performance Insight to monitor overall resource consumption and top queries by CPU, duration, execution count. MSAsset also monitored application metrics, including API latencies and error rates.

◈ Adjust the Azure SQL Database service tiers and performance levels as necessary.

◈ Move or redirect any high-resource consuming unnecessary legacy traffic to bulk access endpoints.

2. After stabilizing in the prior phase of 75 percent read-only activity on Azure SQL Database, move 100 percent of the read-only traffic to Azure SQL Database.

◈ Again, use Query Performance Insight to monitor overall resource consumption and top queries by CPU, duration, execution count. Adjust the Azure SQL Database service tiers and performance levels as necessary and create up to four active geo-replication readable secondary databases in the same region to accommodate read-only traffic.

3. Prior to the final cut-over to Azure SQL Database, develop and fully test a complete rollback plan. The MSAsset team used SQL Server Data Tools (SSDT) data comparison functionality to collect the delta between Azure SQL Database and a four day old backup and then applied the delta to the SQL Server database.

4. Lastly, move all read-write traffic to Azure SQL Database. In MSAsset’s case, in preparation for the final read-write cutover they reseeded, via transactional replication a new database in Azure SQL Database for read-write activity moving forward. Steps they followed:

5. After the full reseeding, wait for remaining transactions on SQL Server to drain before removing the transactional replication topology.

6. Change the web front-end configuration to use the Azure SQL Database primary database for all read-write activity. Use read-only replicas for read-only traffic.

7. After a full business cycle of monitoring, de-commission the SQL Server environment.

This phased approach allowed the MSAsset team to incur no downtime for read-only activity and also helped them minimize risk, allowing enough time to learn and adapt to any unexpected findings without having to revert to the original environment.

The final MSAsset architecture uses one read-write Azure SQL Database replica and four active geo-replication readable secondary databases.

The remaining sections will talk about key aspects and lessons learned from the migration effort.

Creating a read-only Azure SQL Database using Transactional Replication

The first phase involved setting up transactional replication from SQL Server to Azure SQL Database, ensuring a stable replication topology with no introduced performance or convergence issues.

The MSAsset engineering team used the following process for setting up transactional replication:

◈ They first reviewed the existing SQL Server database against the requirements for replication to Azure SQL Database. These requirements are detailed in the Replication to SQL Database documentation. For example, a small number of the legacy tables for MSAsset did not have a primary key, and so a primary key had to be added in order to be supported for transactional replication. Some of the tables were no longer being used, and so it was an opportunity to clean up stale objects and associated code.

◈ Since the MSAsset publication was hosted on an Always On Availability Group, the MSAsset team followed the steps detailed here for configuration transactional replication: Configure Replication for Always On Availability Groups (SQL Server).

Once transactional replication was configured and fully synchronized, read-only traffic was first directed to both SQL Server and Azure SQL Database with read-write activity continuing to go just against the SQL Server-resident database.

The read-only traffic against Azure SQL Database was incrementally increased over time to 25 percent, 50 percent, and 75 percent, with careful monitoring along the way to ensure sufficient query performance and DTU availability. The MSAsset team used a proprietary load balancing application library to distribute load across the various read-only databases. Once stabilized at 75 percent, the MSAsset team moved 100 percent of read-only activity to Azure SQL Database and continued with the other phases described earlier.

Cleanup opportunities

The MSAsset team also used this as an opportunity to clean up rogue reporting processes. This included in-house Microsoft reporting tools and applications that, while being permitted to access the database had other data warehouse options that were more appropriate for ongoing use than MSAsset. When encountering rogue processes, the MSAsset team reached out to the owners and had them re-route to appropriate data stores. Disused code and objects, when encountered, were also removed.

Redesigning around compatibility issues

The MSAsset team discovered two areas that required re-engineering prior to migration to Azure SQL Database:

◈ Change Data Capture (CDC) was used for tracking data modifications on SQL Server. This process was replaced with a solution that leverages temporal tables instead.

◈ SQL Server Agent Jobs were used for executing custom T-SQL scheduled jobs on SQL Server. All SQL Server Agent Jobs were replaced with Azure worker roles that invoked equivalent stored procedures instead.

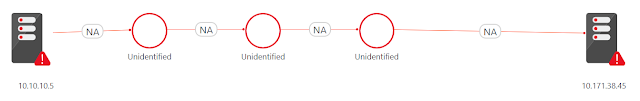

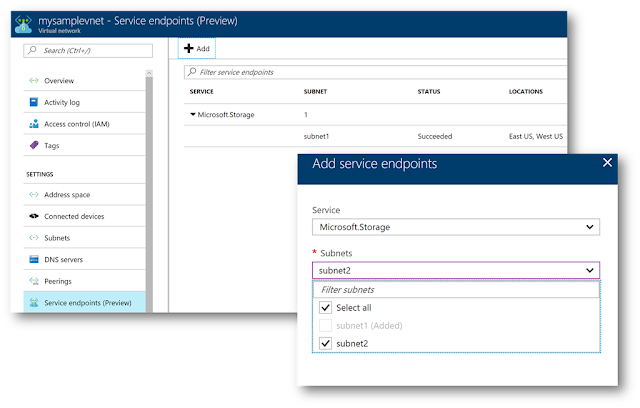

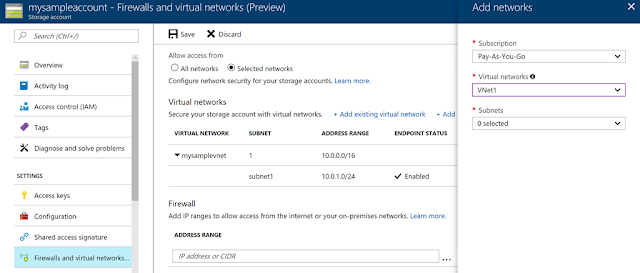

Understanding networking and connectivity with Azure SQL Database

With an array of services requiring access to MSAsset’s data tier, the engineering team had to familiarize themselves with Azure SQL Database networking and connectivity requirements as well as fundamentals. Having this background was a critical aspect of the overall effort and should be a core focus area of any migration plan to Azure SQL Database.

Modernizing the platform and unlocking cloud scalability

The original MSAsset SQL Server hardware was powerful, but old. Before moving to Azure SQL Database, the MSAsset engineering considered replacing the servers. But they were concerned about the projected cost and ability for the hardware to keep up with MSAsset’s projected workload growth over the next five years. The MSAsset engineering team was also concerned about keeping up with the latest SQL Server versions and having access to the latest features.

Moving to Azure SQL Database means that the MSAsset team can scale resources much more easily and no longer have to worry about outgrowing their existing hardware. They can also now access new features as they become available in Azure SQL Database without having to explicitly upgrade. They are also now able to leverage built-in capabilities unique to Azure SQL Database like Threat Detection and Query Performance Insight.

Reducing high severity issues and database management overhead

The MSAsset engineering team has no database administrator on staff, so coupled with the support of old hardware and standard DBA maintenance requirements, these factors were a major contributor to increasingly frequent high severity incidents.

Moving to Azure SQL Database, the MSAsset team no longer worries about ongoing database server patching, backups, or complex high availability and disaster recovery topology configuration. Since moving to Azure SQL Database, the MSAsset engineering team has seen an 80 percent reduction in high severity issues for their data tier.