Managing IT costs is critical during this time of economic uncertainty. The global pandemic is challenging organizations across the globe to reinvent business strategies and make operations more effective and productive. Faster than ever, you’ll need to find ways to increase efficiencies and optimize costs across your IT organizations.

When it comes to cloud cost optimization, organizations typically divide responsibilities between central IT departments and distributed workload teams. Central IT departments manage overall cloud strategy and governance, setting and auditing corporate policies for cost management. In compliance with central IT policy, workload teams across the organization assume end-to-end ownership for cloud applications they’ve built, including cost management.

In this new normal, if you’re a workload owner, it’s doubly challenging for you and your teams who are taking on new cost responsibilities daily, all while continuously adapting to working in a cloud environment. We created the Microsoft Azure Well-Architected Framework for you to help you design, build, deploy, and manage successful cloud workloads across five key pillars: security, reliability, performance efficiency, operational excellence, and cost optimization. While we’re uniquely focusing on cost optimization here, we’ll soon be addressing best practices on how to balance the priorities of your organization against the other four pillars of the framework in order to deploy high-quality, well-architected workloads.

So how can the Azure Well-Architected Framework help you, as a workload owner, optimize your workload costs?

The four stages of cost optimization

The Azure Well-Architected Framework offers comprehensive guidance for cost optimization across four stages: design, provision, monitor, and optimize.

The design stage covers the initial planning of your workload’s architecture and cost model, including:

◉ Capturing clear requirements.

◉ Estimating initial costs.

◉ Understanding your organization’s policies and constraints.

Once your design stage is complete, you move into the provision stage, where you choose and deploy the resources that will make up your workload. There are many considerations and tradeoffs impacting cost at this stage, like which services to choose and which SKUs and regions to select.

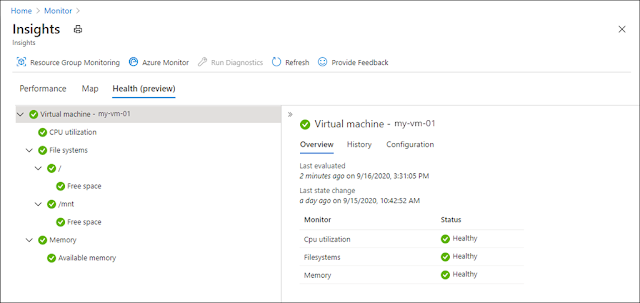

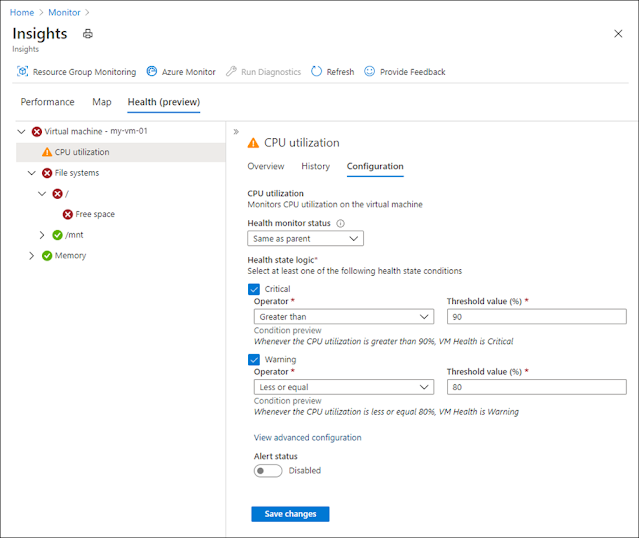

After you provision your resources, the next stage, monitor, is about keeping a close watch on your deployed workload, how it’s being used, and your overall spend. This includes activities like:

◉ Building spending reports based on tags.

◉ Conducting regular cost reviews with your team.

◉ Responding to alerts, for example, when you’re approaching a certain spending limit or have detected an anomaly in spending.

Finally, you move to the last stage, optimize, where you’ll make your workload more efficient through techniques like:

◉ Resizing underutilized resources.

◉ Using reserved instances for long-running, consistent workloads.

◉ Taking advantage of licensing offers for cost benefits.

◉ Re-evaluating your initial design choices, for example, your storage tier and data store.

The initial design and provision stages generally apply to new workloads you’re planning to develop. The last two, monitor and optimize, contain guidance primarily geared towards workloads you’ve already deployed and are running in the cloud.

If you’re like most workload owners at our customers right now, you’re probably wondering now, what can I do that will have the biggest cost impact on existing workloads?

High-impact techniques to cost optimize your existing workloads

While each cost optimization stage contributes significantly to overall cost efficiency, as a workload owner undertaking a cost optimization initiative, you’ll probably first want to look into the latter two stages of the Azure Well-Architected Framework for your existing workloads: monitor and optimize. We provide several tools to help you with these stages. First, there’s the Microsoft Azure Well-Architected Review, which you can use to assess your workloads across the five pillars of the Well-Architected Framework, including cost optimization. The Well-Architected Review provides a holistic view into cost optimization for your deployed workloads and actionable recommendations to help you optimize your workloads. Additionally, tools like Azure Advisor and Azure Cost Management and Billing provide you with cost analysis and optimization guidance to help you achieve the cost efficiency you need.

By using these tools and referencing the framework itself, you’ll find many monitoring and optimization opportunities for your existing workloads. They broadly tend to fall into four high impact categories: increasing cost awareness, eliminating cloud waste, taking advantage of licensing offers, and modernizing your workload architecture.

Increasing cost awareness

As a customer, this means improving your cost visibility, and accountability. Increasing cost awareness starts with implementing workload budgets and operational practices to enforce those budgets, such as:

◉ Tag to break down costs by tag values, so you can pull reports easily.

◉ Alerts to notify you (the budget owner) when you’re approaching certain spending thresholds.

◉ Regular reviews to strengthen your team’s culture of cost management.

While it might not immediately reduce your cloud bill, increasing cost awareness is a necessary foundation that will provide you with critical insights that will be useful to you later.

Eliminating cloud waste

Here is where you start to really see cost efficiencies and cost avoidance. This can include cost optimization techniques like:

◉ Shutting down your unused resources.

◉ Right-sizing under-utilized resources.

◉ Using autoscaling and auto-shutdown for resource flexibility and scalability.

For many customers, the most immediate financial impact will come from eliminating cloud waste.

Taking advantage of licensing offers

Azure has several licensing offers that can provide significant cost benefits for your workloads:

◉ The Azure Hybrid Benefit allows you to bring your on-premises Windows Server and SQL Server licenses with active Software Assurance—and your Linux subscriptions—to Azure and save.

◉ Azure Reservations enables you to spend less against the pay-as-you-go rate when you commit to use Azure resources over a longer period.

Licensing offers can contribute substantially to reducing cloud costs, and often represent a great opportunity for collaborations between central IT and workload teams.

Modernizing your workload architecture

While you might be hesitant to revisit your workload’s architecture, modernizing your applications using the latest services and cloud-native design can drastically increase your cost efficiency. For example, you might want to:

◉ Revisit your initial architectural and design decisions. Search and find a more cost-efficient way to accomplish your objectives, such as your choice of data store or storage tier.

◉ Assess the types of Azure services you are using. Explore and find out if other SKUs or other types of services such as PaaS or serverless might offer you cost benefits while still meeting your workload needs.

Source: microsoft.com