Since March 1, 2022, a new Azure region in North China will gain unrestricted access by customers, which adds the fifth Azure region to the China market and doubles the capacity of Microsoft’s intelligent cloud portfolio in China.

Announced in 2012, and officially launched in March 2014 with two initial regions, Microsoft Azure operated by 21Vianet was the first international public cloud service that became generally available in the China market. Following Azure, Microsoft Office 365, Microsoft Dynamics 365, and Microsoft Power Platform operated by 21Vianet successively launched in China in 2014, 2019, and 2020 respectively.

“We see fast-growing needs for global public cloud services in the China market, both from multi-national companies coming to China, Chinese companies seeking for global presence, and Chinese companies to digitally transform their businesses and processes on clouds—that’s the strong momentum driving us to keep expanding and upgrading our cloud services for almost eight years here in China,” said Dr. Hou Yang, Microsoft Corporate Vice President Chairman and CEO of Microsoft Greater China Region (GCR). “Microsoft’s intelligent cloud, the most comprehensive approach to security in the world, has been empowering hundreds of thousands of developers, partners, and customers from both China and the world to achieve more with technical innovation and business transformation. The new Azure region will further reinforce Microsoft’s capabilities to enable and empower innovation, growth, and discover opportunities across the business, ecosystem, and a future with sustainability."

Cloud for innovation

According to an IDC report, China has become the world’s fastest-growing public cloud market with a year-on-year growth rate of 49.7 percent, and the China market’s global share will increase to more than 10.5 percent by 2024. The fast development of China’s digital economy demands advanced technologies and services like Microsoft Azure, to support its emerging digital innovation and industrial digital transformation.

With the availability of the new Azure region, Microsoft will better empower our customers and partners from both China and global to harness the opportunities of China’s digital development, with capabilities over hybrid and multi-cloud deployment, IoT, edge computing, data intelligence, and more.

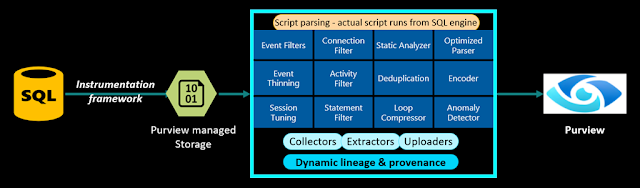

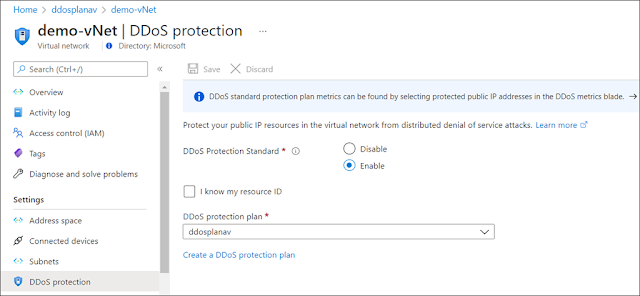

Along with the new Azure region launch, a set of new cloud innovation capabilities will be available in China in 2022, which includes: Azure availability zone, which gives customers industry-leading 99.99 percent SLA when VMs are running in two or more availability zones and provides the most comprehensive resiliency strategy to protecting against large scale events with failover to separate regions. Azure Digital Twins, an IoT capability that enables customers to create ”digital twins” of physical objects in the cloud; Azure Arc, which helps customers manage data and applications across hybrid and multi-cloud environments; Flexible Server deployment option for Azure Database for MySQL, to provide maximum control for an organization’s databases, high availability options to help ensure zero data loss, built-in capabilities for cost optimization, and increased productivity enabled by the Azure ecosystem. Azure Purview is a unified data governance solution that helps you manage and govern your on-premises, multi-cloud, and software-as-a-service (SaaS) data.

"As Microsoft’s global partner, we’ve been working closely to develop and deploy digital solutions for industries,” said Jin Jia, Managing Director and Lead of Technology in Accenture Greater China. “With the new Azure region and services coming to China, we will further enable end-to-end transformations, by delivering a broad range of Azure services across infrastructure, platform, data, IoT, and cognitive computing."

Cloud for sustainability

Cloud computing provides massive efficiency that reduces the collective carbon footprint required to support the world’s computing needs. As Microsoft cloud scales its computing power, we’re also pursuing breakthrough technologies to incorporate sustainability into datacenter design and operations. Microsoft cloud’s latest key advanced development initiatives include:

◉ Reducing water use in datacenters: a new approach to datacenter temperature management will further reduce the amount of water used in our evaporative cooled datacenters.

◉ Research in liquid immersion cooling, toward waterless cooling options: Microsoft achieved the first cloud provider that is running two-phase liquid immersion cooling in a production environment. The efficiencies in both power and cooling that liquid cooling unlock new potential for datacenter rack design.

◉ Datacenter design to support local ecosystems: Microsoft benchmarked the ecosystem performance in terms of water, air, carbon, climate, biodiversity, and more in twelve datacenter regions, to renew and revitalize the surrounding area so that we can restore and create a pathway to provide regenerative value for the local community and environment.

◉ Cutting carbon footprint in datacenter design and construction: Embodied carbon counts for emissions associated with materials and construction processes throughout the whole lifecycle of a building or infrastructure. We use a tool called the Embodied Carbon in Construction Calculator (EC3) to identify building materials and reduce concrete and steel embodied carbon by 30–60 percent. Some of all the initiatives will have been adapted in the new Azure region in China step by step.

In addition to the investments in cloud infrastructure, Microsoft Cloud for Sustainability is now available for preview globally, which allows organizations to record, report, and reduce their carbon emissions on a path to net-zero more effectively. It provides a common foundation to ensure carbon emissions are measured in an accurate, consistent, and reliable manner globally. Now, both multi-national companies and Chinese companies could use this offering on global Azure to measure their sustainability efforts.

At 2021 China International Import Expo (CIIE), SGS China announced the first global and China dual-standard carbon management platform, S-Carbon, based on Microsoft Azure. Dr. Sandy Hao, Managing Director of SGS China, said, “SGS’s expertise in carbon emission, combined with Microsoft’s world-leading cloud platform, will enable more Chinese companies to accelerate the implementation of their sustainable development strategies.”

“We are pleased to be a Net Zero Technology Partner to Microsoft, jointly enabling customers with a full suite of end-to-end carbon neutrality solutions to support their green transition and ambitions,” said Michael Ding, Global Executive Director of Microsoft’s global net-zero partner Envision Group.

“We are partnering with Microsoft to conceive, build and manage smart and sustainable buildings in China and worldwide,” said Michael Zhu, Vice President and General Manager, Building Solutions, China, Johnson Controls. “Our OpenBlue digital platform, closely connected with Microsoft’s cloud platform and workplace technologies, represents an unbeatable opportunity to help our customers make modern spaces safer, more agile, and more sustainable.”

“DELTA’s Energy Management System (EMS) is built on Microsoft Azure platform completed with IoT, machine learning, and data insights from Power BI,” said Kevin Tan, General Manager of Delta GreenTech (China) Co., Ltd. ”By cooperating with Microsoft, we empower customers effectively monitor, manage, and save energy consumption to achieve a green factory.”

Cloud with trust and compliance

Microsoft cloud is comprised of over 200 physical datacenters across more than 34 markets. It serves over one billion customers and over 20 million companies worldwide—95 percent of Fortune 500 businesses run on Microsoft cloud services. With over 90 compliance certifications globally, Microsoft’s cloud platform meets a broad range of industry and regulatory standards in China, Europe, the US, and many other global markets. For China, Microsoft Azure operated by 21Vianet has also obtained a number of certifications for local compliance.

Microsoft Azure was the first international public cloud service compliantly launched in the China market. In accordance with Chinese regulatory requirements, Azure regions operated by 21Vianet in China are physically separated instances from Microsoft’s global cloud but are built on the same cloud technology as other regions operated by Microsoft globally.

The consistent architecture across China and global markets makes it easy, efficient, and secure for multinational companies to roll out their IT systems and business applications to China or vice versa.

Source: microsoft.com