Thursday 30 March 2023

Enhanced Azure Arc integration with Datadog simplifies hybrid and multicloud observability

Wednesday 29 March 2023

Powerful Microsoft MB-910 Exam Preparation Strategies Everyone Should Know

Overview of Microsoft Dynamics 365 Fundamentals (CRM) MB-910 Exam

This certification is designed for people who want to prove that they have the expertise and practical experience working with the customer engagement applications of Microsoft Dynamics 365.

To increase their chances of success in obtaining this certification, candidates are recommended to have prior experience in IT, customer engagement, and business operations.

The Microsoft Dynamics 365 Fundamentals (CRM) exam, also known as MB-910, will consist of 40-60 questions answerable in 60 minutes. The exam questions are of different types, such as case studies, short answers, multiple-choice, mark review, drag and drop, and more. To pass the exam, you must score at least 700 out of a possible 1000 points. The cost of the MB-910 exam is $99 USD, including additional taxes. The exam is available only in English, Japanese, Chinese (Simplified), Korean, French, Spanish, Portuguese (Brazil), Russian, Arabic (Saudi Arabia), Chinese (Traditional), Italian, and German languages.

Target Audience

Individuals at different levels of their professional journey and working in various roles can gain advantages from this basic course. Here are some instances:

How to Prepare for the Microsoft MB-910 Exam?

Preparation is a crucial element when it comes to passing an exam. Even if you have extensive experience, it is beneficial to familiarize yourself with the content, characteristics, and strategies of the specific test you intend to take. If you are planning to take the Microsoft Dynamics 365 Fundamentals (CRM) MB-910 exam, this article provides some helpful tips to help you get ready. Let’s take a closer look at them.

Benefits of Taking the MB-910 Practice Test

Taking MB-910 practice tests is like sowing a seed, which will eventually allow you to reap the benefits of achieving your desired score and obtaining the Microsoft Certified - Dynamics 365 Fundamentals (CRM) certification. When investing time and effort into something, it is natural to wonder about the benefits. So, what exactly can you gain from taking MB-910 practice tests?

Apart from helping you pinpoint your areas of weakness and strength, taking MB-910 practice tests will give you a more accurate perception of the actual exam questions. This is, of course, on the condition that you regularly take one or two MB-910 practice tests since it’s impossible to become familiar with something after just one attempt unless you possess a photographic memory.

Another advantage of taking MB-910 practice tests is the ability to create a personalized and effective study schedule to achieve a realistic score. As previously stated, these practice tests will give you an idea of the format of the actual exam. As a result, you can approach the real exam more confidently because you are already familiar with its structure. TRY OUT FREE MB-910 SAMPLE QUESTIONS NOW!

Conclusion

If you follow the exam preparation strategies we have provided for Microsoft MB-910, you will likely achieve a good result. You can make your preparation even more effective by taking practice tests and utilizing the feedback you receive to improve further. Keep in mind that learning the strategies is not enough; you need to take action and implement them. Taking action will demonstrate your commitment to passing the exam. So, take action and see how well these strategies work for you!

Tuesday 28 March 2023

Monitor Azure Virtual Network Manager changes with event logging

What attributes are part of this event log category?

| Attribute | Description |

| time | Datetime when the event was logged. |

| resourceId | Resource ID of the network manager. |

| location | Location of the virtual network resource. |

| operationName | Operation that resulted in the virtual network being added or removed. Always the “Microsoft.Network/virtualNetworks/networkGroupMembership/write” operation. |

| category | Category of this log. Always “NetworkGroupMembershipChange.” |

| resultType | Indicates successful or failed operation. |

| correlationId | GUID that can help relate or debug logs. |

| level | Always “Info.” |

| properties | Collection of properties of the log. |

| Properties attribute | Description |

| Message | Basic success or failure message. |

| MembershipId | Default membership ID of the virtual network. |

| GroupMemberships | Collection of what network groups the virtual network belongs to. There may be multiple “NetworkGroupId” and “Sources” listed within this property since a virtual network can belong to multiple network groups simultaneously. |

| MemberResourceId | Resource ID of the virtual network that was added to or removed from a network group. |

| GroupMemberships attribute | Description |

| NetworkGroupId | ID of a network group the virtual network belongs to. |

| Sources |

Collection of how the virtual network is a member of the network group. |

Within the Sources attribute are several nested attributes:

| Sources attribute | Description |

| Type | Denotes whether the virtual network was added manually (“StaticMembership”) or conditionally via Azure Policy (“Policy”). |

| StaticMemberId | If the “Type” value is “StaticMembership,” this property will appear. |

| PolicyAssignmentId |

If the “Type” value is “Policy,” this property will appear. ID of the Azure Policy assignment that associates the Azure Policy definition to the network group. |

| PolicyDefinitionId |

If the “Type” value is “Policy,” this property will appear. ID of the Azure Policy definition that contains the conditions for the network group’s membership. |

How do I get started?

Saturday 25 March 2023

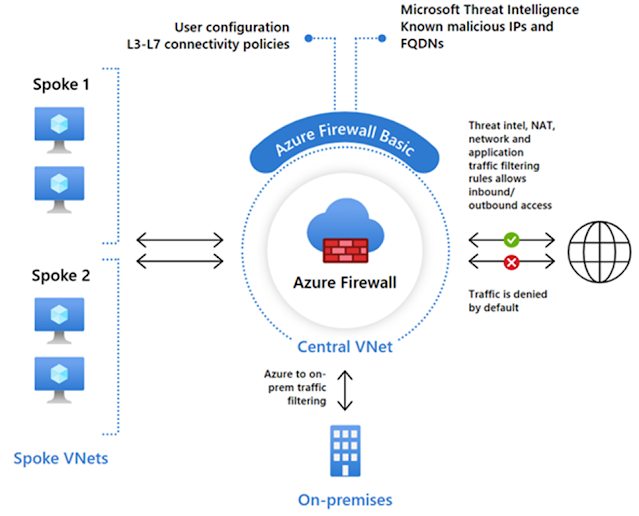

Protect against cyberattacks with the new Azure Firewall Basic

Providing SMBs with a highly available Firewall at an affordable price point

Key features of Azure Firewall Basic

Choose the right Azure Firewall SKU for your business

Azure Firewall Basic pricing

Tuesday 21 March 2023

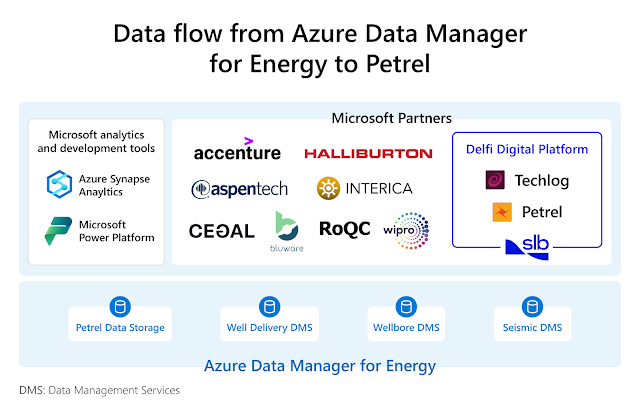

Azure Data Manager for Energy: Achieve interoperability with Petrel

“We all benefit from making the world more open. As an industry, our shared goal is that openness in data will enable a fully liberated and connected data landscape. This is the natural next step towards data-driven workflows that integrate technologies seamlessly and leverage AI for diverse and creative solutions that take business performance to the next level.”—Trygve Randen, Director, Data & Subsurface at Digital & Integration, SLB.

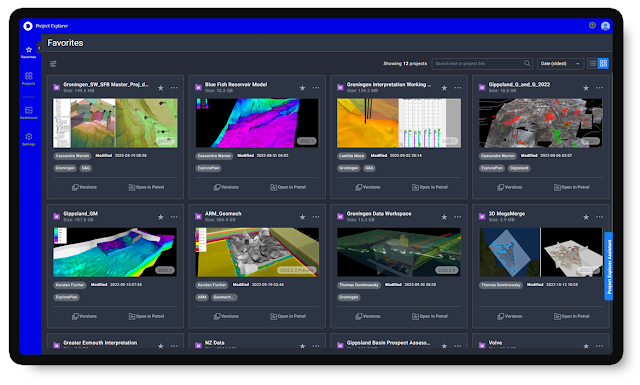

Petrel and Project Explorer on Azure Data Manager for Energy

Interoperable workflows that de-risk and drive efficiency

Thursday 16 March 2023

Microsoft and Rockwell Automation collaborate on digital solutions for manufacturers

Building solutions for both physical and digital operations

How manufacturers are working with Microsoft and Rockwell Automation

Tuesday 14 March 2023

Azure previews powerful and scalable virtual machine series to accelerate generative AI

“Co-designing supercomputers with Azure has been crucial for scaling our demanding AI training needs, making our research and alignment work on systems like ChatGPT possible.”—Greg Brockman, President and Co-Founder of OpenAI.

Azure's most powerful and massively scalable AI virtual machine series

Delivering exascale AI supercomputers to the cloud

"Our focus on conversational AI requires us to develop and train some of the most complex large language models. Azure's AI infrastructure provides us with the necessary performance to efficiently process these models reliably at a huge scale. We are thrilled about the new VMs on Azure and the increased performance they will bring to our AI development efforts."—Mustafa Suleyman, CEO, Inflection.

“NVIDIA and Microsoft Azure have collaborated through multiple generations of products to bring leading AI innovations to enterprises around the world. The NDv5 H100 virtual machines will help power a new era of generative AI applications and services.”—Ian Buck, Vice President of hyperscale and high-performance computing at NVIDIA.