The era of generative AI has arrived, offering new possibilities for every person, business, and industry. At the same time, the speed, scale, and sophistication of cyberattacks, increasing regulations, an ever-expanding data estate, and business demand for data insights are all converging. This convergence pressurizes business leaders to adopt a modern data governance and security strategy to confidently ensure AI readiness.

A modern data governance and security solution unifies data protection and governance capabilities, simplifies actions through business-friendly profiles and terminology with AI-powered business efficiency, and enables federated governance across a disparate multi-cloud data estate.

Microsoft Purview is a comprehensive set of solutions that can help your organization govern, protect, and manage data, wherever it lives. Microsoft Purview provides integrated coverage and helps address the fragmentation of data across organizations, the lack of visibility that hampers data protection and governance, and the blurring of traditional IT management roles.

Today, we are excited to announce a reimagined data governance experience within Microsoft Purview, available in preview April 8, 2024. This new software-as-a-service (SaaS) experience offers sophisticated yet simple business-friendly interaction, integration across data sources, AI-enabled business efficiency, and actions and insights to help you put the ‘practice’ into your data governance practice.

Modern data governance with Microsoft Purview

I led Microsoft through our own modern data governance journey the past several years and this experience exposed the realities, challenges, and key ingredients of the modern data governance journey.

Our new Microsoft Purview data governance solution is grounded in years of applied learning and proven practices from navigating this data transformation journey along with the transformation journeys of our enterprise customers. To that end, our vision for a modern data governance solution is based on the following design principles:

Anchored on durable business concepts

The practice of data governance should enable an organization to accelerate the creation of responsible value from their data. By anchoring data governance investments to measurable business objectives and key results (OKRs), organizations can align their data governance practice to business priorities and demonstrate business value outcomes.

A unified, integrated, and extensible experience

A modern data governance solution should offer a single-pane-of-glass experience that integrates across multi-cloud data estate sources for data curation, management, health controls, discovery, and understanding, backed with compliant, self-serve data access. The unified experience reduces the need for laborious and costly custom-built or multiple-point solutions. This enables a focus on accelerating data governance practices, activating federated data governance across business units, and ensuring leaders have real-time insights into governance health.

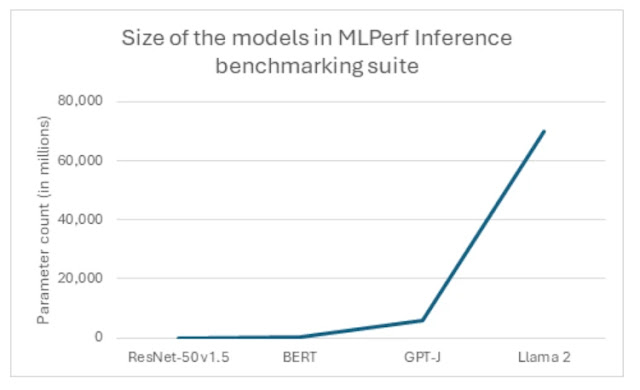

Scale success with AI-enabled experiences

An ever-growing and changing data estate demands simplicity in how it is governed and to ensure business adoption and implementation efficiencies. Natural language interactions and machine learning (ML)-based recommendations across governance capabilities are critical to this simplification and accelerating data governance adoption.

A culture of data governance and protection

Data governance solutions must be built for the practice of federated data governance, unique to each organization. Just as adopting cloud solutions requires one to become a cloud company, adopting data governance requires one to become a data governance company. Modern data governance success requires C-Suite alignment and support, and must be simple, efficient, customizable, and flexible to activate your unique practice.

Introducing data governance for the business, by the business

We are thrilled to introduce the new Microsoft Purview data governance experience. Our new data governance capabilities will help any organization of any size to accelerate business value creation in the era of AI.

A business-friendly approach to govern multi-cloud data estates

Designed with the business in mind, the new governance experience supports different functions across the business with clear role definitions for governance administrators, business domain creators, data health owners, and data health readers.

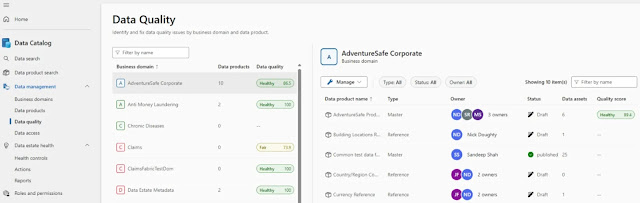

Within Data Management, customers can easily define and assign business-friendly terminology (such as Finance and Claims). Business-friendly language follows the data governance experience through Data Products (a collection of data assets used for a business function), Business Domains (ownership of Data Products), Data Quality (assessment of quality), Data Access, Actions, and Data Estate Health (reports and insights).

This new data governance experience allows you to scan and search data across your data estate assets.

Built-in data quality capabilities and rules which follow the data

The new data quality model enables your organization to set rules top down with business domains, data products, and the data assets themselves. Policies can be set on a term or rule which flows through and helps save data stewards hours to days of manual work depending on the scale of your estate. Once rules and policies are applied, the data quality model will generate data quality scores at the asset, data product, or business domain level giving you snapshot insights into your data quality relative to your business rules.

Within the data quality model, there are two metadata analysis capabilities: 1) profiling—quick sample set insights 2) data quality scans—in-depth scans of full data sets. These profiling capabilities use your defined rules or built-in templates to reason over your metadata and give you data quality insights and recommendations.

Apply industry standard controls in data estate health management

In partnership with EDM Council, new data health controls include a set of 14 standards for cloud data management controls. These standards govern how data is to be managed while controls create fidelity of how data assets are used/accessed. Examples are metadata completeness, cataloging, classification, access entitlement, and data quality. A data office can configure rules which determine the score and define what constitutes a red/yellow/green indicator score, ensuring your rules and indicators reflect the unique standards of your organization.

Summarized insights help activate and sustain your practice

Data governance is a practice which is nurtured over time. Aggregated insights help you put the “practice” into your data governance practice by showcasing the overall health of your governed data estate. Built-in reports surface deep insight across a variety of dimensions: assets, catalog adoption, classifications, data governance, data stewardship, glossary, and sensitivity labels.

The image below is the Data Governance report which can be filtered by business domain, data product, and status for deeper insights.

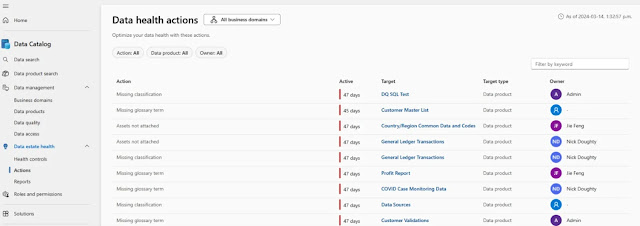

Stay on top of data governance health with aggregated actions

The new Actions center aggregates and summarizes governance-related actions by role, data product, or business domain. Actions stem from usage or implementation being out of alignment from defined controls. This interactive summary makes it easy for teams to manage and track actions—simply click on the action to make the change required. Cleaning up outstanding actions helps improve the overall posture of your data governance practice—key to making governance a team sport.

Announcing technology partnerships for even greater customer value

We are excited to announce a solution initiative with Ernst & Young LLP (EY US), who will bring their extensive experience in data solutions within financial services, to collaborate with Microsoft on producing data governance reports and playbooks purpose-built for US-oriented financial services customers. These reports and playbooks aim to accelerate the customer time to value for activating a governance practice that adheres to the unique regulation needs of the financial sector. These assets will be made available in Azure Marketplace over the course of preview and the learnings from this will also help inform future product roadmap.

Additionally, a modern data governance solution integrates and extends across your technology estate. With this new data governance experience, we are also excited to announce technology partnerships that will help seamlessly extend the value of Microsoft Purview to customers through pre-built integration. Integrations will light up over the course of preview and be available in Azure Marketplace.

Master Data Management

◉ CluedIn brings native Master Data Management and Data Quality functionality to Microsoft Fabric, Microsoft Purview, and the Azure stack.

◉ Profisee Master Data Management is a complimentary and necessary piece of your data governance strategy.

◉ Semarchy combines master data management, data intelligence, and data integration into a singular application in any environment.

Data Lineage

◉ Solidatus empowers data-rich enterprises to visualize, understand, and govern data like never before.

Source: microsoft.com