Today, many organizations are leveraging digital transformation to deliver their applications and services in the cloud. At Microsoft Build 2019, we announced the general availability of Azure Quickstart Center and received positive feedback from customers. Azure Quickstart Center brings together the step-by-step guidance you need to easily create cloud workloads. The power to easily set up, configure, and manage cloud workloads while being guided by best practices is now built right into the Azure portal.

There are two ways to access Azure Quickstart Center in the Azure portal. Go to the global search and type in Quickstart Center or select All services on the left nav and type Quickstart Center. Select the star button to save it under your favorites.

How do you access Azure Quickstart Center?

There are two ways to access Azure Quickstart Center in the Azure portal. Go to the global search and type in Quickstart Center or select All services on the left nav and type Quickstart Center. Select the star button to save it under your favorites.

Get started

Azure Quickstart Center is designed with you in mind. We created setup guides, start a project, and curated online training for self-paced learning so that you can manage cloud deployment according to your business needs.

Setup guides

To help you prepare your organization for moving to the cloud, our guides Azure setup and Azure migration in the Quickstart Center give you a comprehensive view of best practices for your cloud ecosystem. The setup guides are created by our FastTrack for Azure team who has supported customers in cloud deployment and turned these valuable insights to easy reference guides for you.

The Azure setup guide walks you through how to:

◈ Organize resources: Set up a management hierarchy to consistently apply access control, policy, and compliance to groups of resources and use tagging to track related resources.

◈ Manage access: Use role-based access control to make sure that users have only the permissions they really need.

◈ Manage costs: Identify your subscription type, understand how billing works, and how you can control costs.

◈ Governance, security, and compliance: Enforce and automate policies and security settings that help you follow applicable legal requirements.

◈ Monitoring and reporting: Get visibility across resources to help find and fix problems, optimize performance, or get insight to customer behavior.

◈ Stay current with Azure: Track product updates so you can take a proactive approach to change management.

The Azure migration guide is focused on re-host also known as lift and shift, and gives you a detailed view of how to migrate applications and resources from your on-premises environment to Azure. Our migration guide covers:

◈ Prerequisites: Work with your internal stakeholders to understand the business reasons for migration, determine which assets like infrastructure, apps, and data are being migrated and set the migration timeline.

◈ Assess the digital estate: Assess the workload and each related asset such as infrastructure, apps, and data to ensure the assets are compatible with cloud platforms.

◈ Migrate assets: Identify the appropriate tools to reach a "done state" including native tools, third-party tools, and project management tools.

◈ Manage costs: Cost discussion is a critical step in migration. Use the guidance in this step to drive the discussion.

◈ Optimize and transform: After migration, review the solution for possible areas of optimization. This could include reviewing the design of the solution, right-sizing the services, and analyzing costs.

◈ Secure and manage: Enforce and set up policies to manage the environment to ensure operations efficiency and legal compliance.

◈ Assistance: Learn how to get the right support at the right time to continue your cloud journey in Azure.

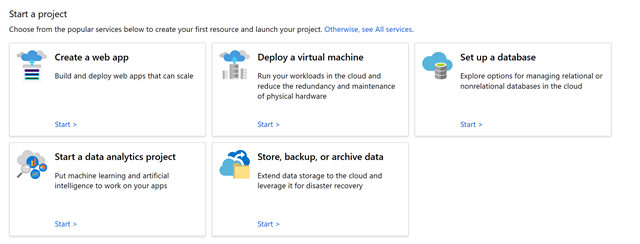

Start a project

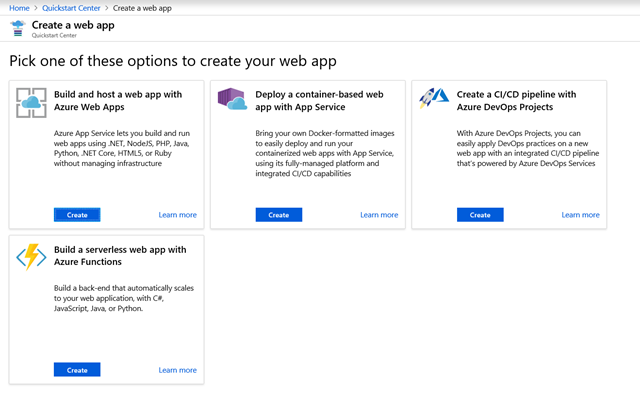

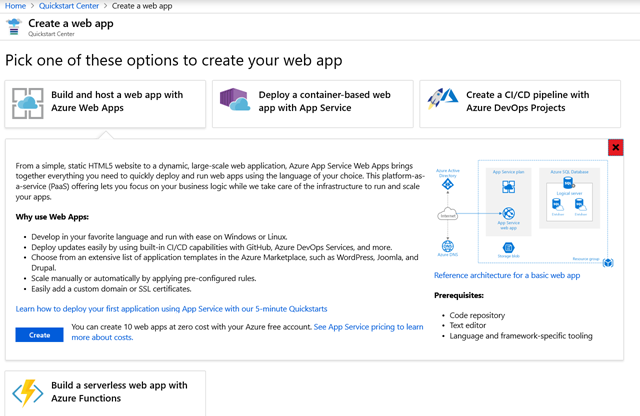

Compare frequently used Azure services available for different solution types, and discover the best fit for your cloud project. We’ll help you quickly launch and create workloads in the cloud. Pick one of the five common scenarios shown below to compare the deployment options and evaluate high-level architecture overviews, prerequisites, and associated costs.

After you select a scenario, choose an option, and understand the requirements, select Create.

We’ll take you to the create resource page where you’ll follow the steps to create a resource.

Take an online course

Our recommended online learning options let you take a hands-on approach to building Azure skills and knowledge.