Security is based on the inherent need for safety. Today, we see that need challenged more than ever. In the past year alone, we’ve witnessed an exponential increase in ransomware, supply chain attacks, phishing, and identity theft. These activities fundamentally threaten our human desire for security in situations such as ransomware attacks on hospitals or supply chain attacks on industrial environments.

This security challenge meets us on a variety of fronts. While attacks like Nobelium demonstrate the level of sophistication of organized nation-state actors, most attacks exploit far simpler vulnerabilities, that are often publicly documented with patches already available. Why is security so much more challenging today?

For defenders, the surface area to protect has never been larger. Security teams are often chronically understaffed. On top of that, they’re overwhelmed by the volume of security signals—much of which is noise—and often spending valuable resources on non-security work, like maintaining infrastructure.

Something needs to change. Security needs to be a foundational principle, permeating every phase of the development process, from the cloud platform itself to the DevOps lifecycle, to the security operations processes.

Azure: The only cloud platform built by a security vendor

Facing these challenges requires you to embed security into every layer of architecture. As the only major cloud platform built by a security vendor, Azure empowers you to do that. Microsoft has deep security expertise, serving 400,000 customers including 90 out of the Fortune 100, and achieving $10 billion in security revenue as a result. Microsoft’s security ecosystem includes products that are leaders in a total of five Gartner Magic Quadrants and seven Forrester Waves, plus:

◉ Microsoft employs more than 3,700 security experts and spends more than $1 billion on security every single year.

◉ The volume of security signals that Microsoft analyzes is staggering—more than 8 trillion signals every 24 hours.

◉ In 2020 alone Microsoft 365 Defender blocked 6 billion malware threats.

In a nutshell, we get security. It’s this extensive experience that informs our approach to Azure security—security that is built-in, modern, and holistic.

Security is not a destination, but a continuous journey. Well-funded nation-state attackers will always continue to innovate. That’s why it’s so important to choose a cloud vendor who is constantly monitoring for security threats, constantly raising the bar on the security of the platform, and constantly assessing best practices.

Built-in: Security integrated into the DevOps lifecycle

Protecting your cloud innovation requires security to be built into every stage of the lifecycle and every level of architecture. If it isn’t, then developers struggle to integrate security into the DevOps cycle and security analytics may be required to slow down innovation or assets go unprotected. That’s why Azure has security built-in at every layer of architecture—not only at the runtime level but also when writing code.

GitHub Advanced Security features, for example, empower developers to deliver more secure code with built-in security capabilities like code scanning for vulnerabilities, secret scanning to avoid putting secrets like keys and passwords in code repositories. Another key area of focus is dependency reviews so that developers can update vulnerable open-source dependencies before merging code. This is important because 94 percent of projects use open source code in some form (GitHub Octoverse 2020 report).

Easily discover and turn on security tools with controls that are built directly into the Azure platform. Controls built into resources like the virtual machine, SQL, storage, and container blades puts security within easy reach for users beyond security professionals. Tools like Azure Defender help security operations (SecOps) to work at scale and enable protection and monitoring for all cloud resources. Azure also offers is broad policy support, automation, and actionable best practices.

Zero trust principles enforce security at every level of the organization. Azure is built on top of these key principles: verify explicitly and assume breach. Azure has a consistent Azure Resource Manager (ARM) layer to manage resources. This layer combines with our identity capabilities to deliver multi-factor authentication and least privilege access. What’s more, you get an architecture literally designed for Zero Trust with Azure’s built-in networking capabilities—spanning micro-segmentation to firewalls.

Modern: Security fueled by AI and the scale of the cloud

When you’re leveraging the power of AI and the scale of the cloud, defenders can protect, detect, and respond at a pace that enables them to get ahead of threats. It’s here that Microsoft’s wholesale commitment to security truly shines. Azure’s security approach is also very modern, especially compared to the tools that customers are using on-premises.

The AI used in Azure security solutions is powered by threat intelligence from across Microsoft’s entire security portfolio, encompassing trillions of signals per day and a large diversity of signals from the Microsoft Cloud. This allows Azure solutions to prioritize the most important incidents to raise to the security team, drastically cutting down on noise and saving SecOps precious time.In addition, cloud-scale means that you always have the capacity you need, without investing in infrastructure setup and maintenance.

Holistic: Secure your entire organization, including Azure, hybrid, and multi-cloud

The attacks we’ve seen in recent years have proven that the age of effective point solutions is long over. Relying on a patchwork of disparate security solutions not only makes it harder for security teams to do their jobs—forcing them to pivot between many different tools—it also introduces far too many gaps for attackers to slip between.

That’s why it’s so important that security is holistic. Azure security solutions don’t just help you protect Azure—they protect your whole organization, including multi-cloud and hybrid environments. This gives you a unified view of your entire environment and enables SecOps to be more efficient with fewer tools.

For example, at the development phase, GitHub Advanced Security helps secure code deployed on any cloud. SecOps can get a bird’s eye view of your entire organization, including other clouds and your non-Microsoft security ecosystem, with Azure Sentinel, Microsoft’s cloud-native SIEM. Or, take it to the next level with integrated SIEM and XDR with Azure Sentinel, Azure Defender, and Microsoft 365 Defender and get comprehensive coverage combined with a view of the more important incidents that need attention immediately.

Plus, manage your cloud security posture across Azure, AWS, Google Cloud, and on-premises within one user experience in Azure Defender.

Where do you start?

Azure’s built-in, modern, and holistic solutions drastically simplify the process of securing your estate. But where do you start? Security is a shared responsibility. As an Azure customer here are five steps that we advise you to take now, whether you’re a new customer or an existing user:

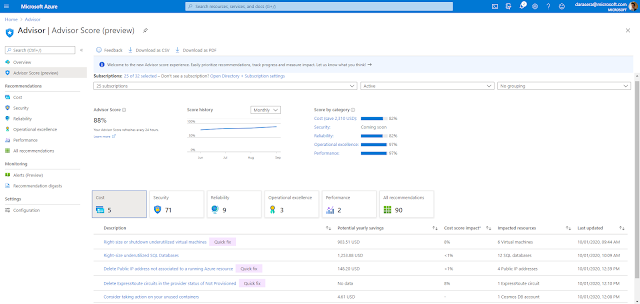

1. Turn on Azure Secure Score. Azure Secure Score, located in Azure Security Center, gives a numeric view of your Azure security posture.

2. Turn on multi-factor authentication. Identity is such an important threat vector, and multi-factor authentication significantly reduces risk.

3. Turn on Azure Defender for all cloud workloads. Azure Defender protects against threats like remote desktop protocol (RDP) brute-force attacks, SQL injections, attacks on storage accounts, and much more. You can turn on Azure Defender with just a few clicks.

4. Turn on Azure WAF and DDoS protection for every website. This will ensure your web applications are protected from malicious attacks and common web vulnerabilities.

5. Turn on Azure Firewall for every subscription to protect Azure virtual networks.

Ongoing, it’s important that you assign a team member or partner to raise your Azure secure score percentage and engage your security operations team to action important incidents. This goes a long way towards improving your cloud security posture and lowering security risk.

Source: microsoft.com