Companies have long collected data from various sources, leading to the development of data lakes for storing data at scale. However, data lakes lacked critical features such as data quality. The Lakehouse architecture emerged to address the limitations of data warehouses and data lakes. Lakehouse is a robust framework for enterprise data infrastructure, with Delta Lake as the storage layer which has gained popularity. Databricks, a pioneer of the Data Lakehouse, an integral component of their Data Intelligence Platform is available as a fully managed first party Data and AI solution on Microsoft Azure as Azure Databricks, making Azure the optimal cloud for running Databricks workloads. This blog post discusses the key advantages of Azure Databricks in detail:

1. Seamless integration with Azure.

2. Regional availability and performance.

3. Security and compliance.

4. Unique partnership: Microsoft and Databricks.

1. Seamless integration with Azure

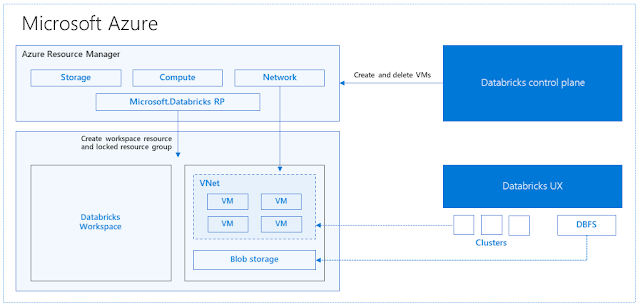

Azure Databricks is a first-party service on Microsoft Azure, offering native integration with vital Azure Services and workloads that add value, allowing for rapid onboarding onto a Databricks workspace with just a few clicks.

Native integration—as a first party service

◉ Microsoft Entra ID (formerly Azure Active Directory): Azure Databricks integrates with Microsoft Entra ID, enabling managed access control and authentication effortlessly. Engineering teams jointly at Microsoft and Databricks have natively built this integration out of the box with Azure Databricks, so they don’t have to build this integration on their own.

◉ Azure Data Lake Storage (ADLS Gen2): Databricks can directly read and write data from ADLS Gen2 which has been collaboratively optimized for fastest possible data access, enabling efficient data processing and analytics. The integration of Azure Databricks with Azure Storage platforms such as Data Lake and Blob Storage provides a more streamlined experience on data workloads.

◉ Azure Monitor and Log Analytics: Azure Databricks clusters and jobs can be monitored using Azure Monitor and gain insights through Log Analytics.

◉ Databricks extension to VS code: The Databricks extension for Visual Studio Code is specifically designed to work with Azure Databricks, providing a direct connection between the local development environment and Azure Databricks workspace.

Integrated services that deliver value

◉ Power BI: Power BI is a business analytics service that provides interactive visualizations with self-service business intelligence capabilities. Using Azure Databricks as a data source with Power BI brings the advantages of Azure Databricks performance and technology beyond data scientists and data engineers to all business users. Power BI Desktop can be connected to Azure Databricks clusters and Databricks SQL warehouses. Power BI’s strong enterprise semantic modeling and calculation capabilities allows defining calculations, hierarchies, and other business logic that’s meaningful to customers, and orchestrating the data flows into the model with Azure Databricks Lakehouse. It is possible to publish Power BI reports to the Power BI service and enable users to access the underlying Azure Databricks data using single sign-on (SSO), passing along the same Microsoft Entra ID credentials they use to access the report. With a Premium Power BI license, it is possible to Direct Publish from Azure Databricks, allowing you to create Power BI datasets from tables and schemas from data present in Unity Catalog directly from the Azure Databricks UI. Direct Lake mode is a unique feature currently available in Power BI Premium and Microsoft Fabric FSKU ( Fabric Capacity/SKU) capacity that works with Azure Databricks. It allows for the analysis of very large data volumes by loading parquet-formatted files directly from a data lake. This feature is particularly useful for analyzing very large models with less delay and models with frequent updates at the data source.

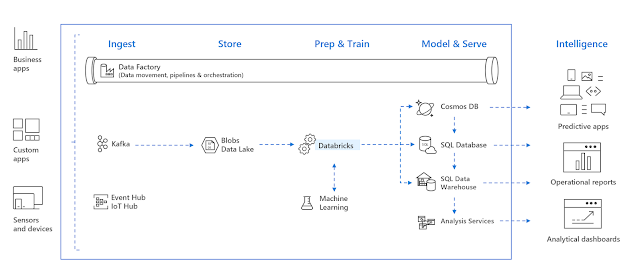

◉ Azure Data Factory (ADF): ADF provides the capability to natively ingest data to the Azure cloud from over 100 different data sources. It also provides graphical data orchestration and monitoring capabilities that are easy to build, configure, deploy, and monitor in production. ADF has native integration with Azure Databricks via the Azure Databricks linked service and can execute notebooks, Java Archive file format (JARs), and Python code activities which enables organizations to build scalable data orchestration pipelines that ingest data from various data sources and curate that data in the Lakehouse.

◉ Azure Open AI: Azure Databricks includes built-in tools to support ML workflows, including AI Functions, a built-in DB SQL function, allowing you to access Large Language Models (LLMs) directly from SQL. With this launch, customers can now quickly experiment with LLMs on their company’s data from within a familiar SQL interface. Once the correct LLM prompt has been developed, it can turn quickly into a production pipeline using existing Databricks tools such as Delta Live Tables or scheduled Jobs.

◉ Microsoft Purview: Microsoft Azure’s data governance solution, Microsoft Purview integrates with Azure Databricks Unity Catalog’s catalog, lineage and policy Application Programming Interfaces (APIs). This allows discovery and request-for-access within Microsoft Purview, while keeping Unity Catalog as the operational catalog on Azure Databricks. Microsoft Purview supports metadata sync with Azure Databricks Unity Catalog which includes metastore catalogs, schemas, tables including the columns, and views including the columns. In addition, this integration enables discovery of Lakehouse data and bringing its metadata into Data Map which allows scanning the entire Unity Catalog metastore or choosing to scan only selective catalogs. The integration of data governance policies in Microsoft Purview and Databricks Unity Catalog enables a single pane experience for Data and Analytics Governance in Microsoft Purview.

Best of both worlds with Azure Databricks and Microsoft Fabric

Microsoft Fabric is a unified analytics platform that includes all the data and analytics tools that organizations need. It brings together experiences such as Data Engineering, Data Factory, Data Science, Data Warehouse, Real-Time Intelligence, and Power BI onto a shared SaaS foundation, all seamlessly integrated into a single service. Microsoft Fabric comes with OneLake, an open and governed, unified SaaS data lake that serves as a single place to store organizational data. Microsoft Fabric simplifies data access by creating shortcuts to files, folders, and tables in its native open format Delta-Parquet into OneLake. These shortcuts allow all Microsoft Fabric engines to operate on the data without the need for data movement or copying with no disruption to existing usage by the host engines.

For instance, creating a shortcut to Delta-Lake tables generated by Azure Databricks enables customers to effortlessly serve Lakehouse data to Power BI via the option of Direct Lake mode. Power BI Premium, as a core component of Microsoft Fabric, offers Direct Lake mode to serve data directly from OneLake without the need to query an Azure Databricks Lakehouse or warehouse endpoint, thereby eliminating the need for data duplication or import into a Power BI model enabling blazing fast performance directly over data in OneLake as an alternative to serving to Power BI via ADLS Gen2. Having access to both Azure Databricks and Microsoft Fabric built on the Lakehouse architecture, Microsoft Azure customers have a choice to work with either one or both powerful open governed Data and AI solutions to get the most from their data unlike other public clouds. Azure Databricks and Microsoft Fabric together can simplify organizations’ overall data journey with deeper integration in the development pipeline.

2. Regional availability and performance

Azure provides robust scalability and performance capabilities for Azure Databricks:

- Azure Compute optimization for Azure Databricks: Azure offers a variety of compute options, including GPU-enabled instances, which accelerate machine learning and deep learning workloads collaboratively optimized with Databricks engineering. Azure Databricks globally spins up more than 10 million virtual machines (VMs) a day.

- Availability: Azure currently has 43 available regions worldwide supporting Azure Databricks and growing.

3. Security and compliance

All the enterprise grade security, compliance measures of Azure apply to Azure Databricks prioritizing it to meet customer requirements:

- Azure Security Center: Azure Security Center provides monitoring and protection of Azure Databricks environment against threats. Azure Security Center automatically collects, analyzes, and integrates log data from a variety of Azure resources. A list of prioritized security alerts is shown in Security Center along with the information needed to quickly investigate the problem along with recommendations on how to remediate an attack. Azure Databricks provides encryption features for additional control of data.

- Azure Compliance Certifications: Azure holds industry-leading compliance certifications, ensuring Azure Databricks workloads meet regulatory standards. Azure Databricks is certified under PCI-DSS (Classic) and HIPAA (Databricks SQL Serverless, Model Serving).

- Azure Confidential Compute (ACC) is only available on Azure. Using Azure confidential computing on Azure Databricks allows end-to-end data encryption. Azure offers Hardware-based Trusted Execution Environments (TEEs) to provide a higher level of security by encrypting data in use in addition to AMD-based Azure Confidential Virtual Machines (VMs) which provides full VM encryption while minimizing performance impact.

- Encryption: Azure Databricks supports customer-managed keys from Azure Key Vault and Azure Key Vault Managed HSM (Hardware Security Modules) natively. This feature provides an additional layer of security and control over encrypted data.

4. Unique partnership: Databricks and Microsoft

One of the standout attributes of Azure Databricks is the unique partnership between Databricks and Microsoft. Here’s why it’s special:

- Joint engineering: Databricks and Microsoft collaborate on product development, ensuring tight integration and optimized performance. This includes dedicated Microsoft resources in engineering for developing Azure Databricks resource providers, workspace, and Azure Infra integrations, as well as customer support escalation management in addition to growing engineering investments for Azure Databricks.

- Service operation and support: As a first party offering, Azure Databricks is exclusively available in the Azure portal, simplifying deployment and management for customers. Azure Databricks is managed by Microsoft with support coverage under Microsoft support contracts subject to the same SLAs, security policies, and support contracts as other Azure services, ensuring quick resolution of support tickets in collaboration with Databricks support teams as needed.

- Unified billing: Azure provides a unified billing experience, allowing customers to manage Azure Databricks costs transparently alongside other Azure services.

- Go-To-Market and marketing: Co-marketing, GTM collaboration, and co-sell activities between both organizations that include events, funding programs, marketing campaigns, joint customer testimonials, and account-planning and much more provides elevated customer care and support throughout their data journey.

- Commercial: Large strategic enterprises generally prefer dealing directly with Microsoft for sales offers, technical support, and partner enablement for Azure Databricks. In addition to Databricks sales teams, Microsoft has a global footprint of dedicated sales, business development, and planning coverage for Azure Databricks meeting unique needs of all customers.

Let Azure Databricks help boost your productivity

Choosing the right data analytics platform is crucial. Azure Databricks, a powerful data analytics and AI platform, offers a well-integrated, managed, and secure environment for data professionals, resulting in increased productivity, cost savings, and ROI. With Azure’s global presence, integration of workloads, security, compliance, and a unique partnership with Microsoft, Azure Databricks is a compelling choice for organizations seeking efficiency, innovation, and intelligence from their data estate

Source: microsoft.com