This blog will discuss the IT team at Tailwind Traders and how they leveraged enterprise-scale reference implementations for the cloud environment they are building.

Enterprise-scale landing zone architecture provides a strategic design path and target technical state for your Azure environment, including enterprise enrollment, identity, network topology, resource organization, governance, operations, business continuity, and disaster recovery (BCDR), as well as deployment options. These landing zones follow design principles across the critical design areas for an organization's Azure environment and aligns with Azure platform roadmaps to ensure that new capabilities can be integrated.

Read More: 98-365: Windows Server Administration Fundamentals

Tailwind Traders takes advantage of prescriptive guidance coupled with best practices for your Azure control plane by using the enterprise-scale architecture.

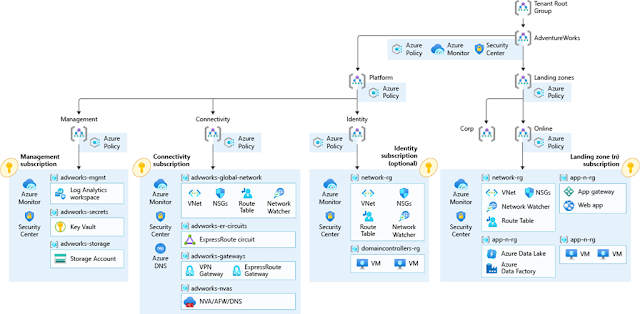

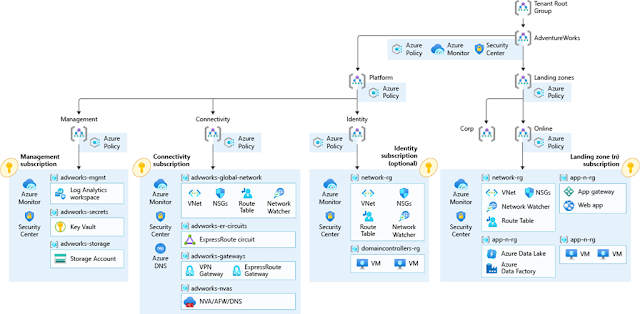

Cloud Adoption Framework enterprise-scale landing zone architecture

The enterprise-landing zone architecture offers a modular design, which not only makes it simple to deploy existing and new applications but also allows Tailwind Traders to start with a lighter deployment implementation and scale depending on their business needs.

This architecture considers several design areas:

◉ Enterprise agreement (EA) enrolment and Azure Active Directory tenants

◉ Identity and access management

◉ Management group and subscription organization

◉ Network topology and connectivity

◉ Management and monitoring

◉ Business continuity and disaster recovery

◉ Security, governance, and compliance

◉ Platform automation and DevOps

To make the implementation of the enterprise-scale landing zone architecture a straightforward process, enterprise-scale offers reference implementations. The reference implementations can be deployed using the Azure portal or infrastructure as code (IaC) to set up and configure their environment. This allows the use of automation and Azure Resource Manager templates or Terraform to easily deploy and manage the enterprise-scale implementation.

Currently, enterprise-scale offers three different reference implementations, which all can be scaled without refactoring when requirements change over time.

Enterprise-scale foundation

The enterprise-scale foundation reference architecture allows organizations to start with Azure landing zones. It allows organizations such as Tailwind Traders to start as needed and scale later depending on their business requirements. This reference implementation is great for organizations that want to start with landing zones in Azure and don't need hybrid connectivity to their on-premises infrastructure at the beginning. However, the modular design of enterprise-scale allows the customer to add hybrid connectivity at a later stage when business requirements change, without refactoring the Azure environment design.

Figure 1: Enterprise-scale foundation architecture

This architecture includes and deploys:

◉ A scalable management group hierarchy aligned to core platform capabilities, allowing you to operationalize at scale using centrally managed Azure role-based access control (RBAC) and Azure Policy where platform and workloads have clear separation.

◉ Azure Policies to enable autonomy for the platform and the landing zones.

◉ An Azure subscription dedicated for management, which enables core platform capabilities at scale using Azure Policy such as Log Analytics, Automation account, Azure Security Center, and Azure Sentinel.

◉ A landing zone subscription for Azure native, internet-facing applications and resources, and specific workload Azure Policies.

Enterprise-scale hub and spoke

The enterprise-scale hub and spoke reference architecture includes the enterprise-scale foundation, and adds hybrid connectivity with Azure ExpressRoute or virtual private network (VPN), as well as a network architecture based on the traditional hub and spoke network topology. This allows Tailwind Traders to leverage the foundational landing zone and add connectivity on-premises datacenters and branch offices by using a traditional hub and spoke network architecture.

Figure 2: Enterprise-scale with hub and spoke architecture

This architecture includes the enterprise-scale foundation, and in addition, deploys:

◉ An Azure subscription dedicated for connectivity, which deploys core Azure networking resources such as a hub virtual network, Azure Firewall (optional), Azure ExpressRoute gateway (optional), VPN gateway (optional), and Azure private domain name system (DNS) zones for Private Link.

◉ An Azure subscription dedicated for identity in case your organization requires to have Azure Active Directory domain controllers in a dedicated subscription (optional).

◉ Landing zone management group for corp-connected applications that require connectivity to on-premises, to other landing zones, or to the internet through shared services provided in the hub virtual network.

◉ Landing zone management group for online applications that will be internet-facing, where a virtual network is optional and hybrid connectivity is not required.

◉ Landing zone subscriptions for Azure native and internet-facing online applications and resources.

◉ Landing zone subscriptions for corp-connected applications and resources, including a virtual network that will be connected to the hub through VNet peering.

◉ Azure Policies for online and corp-connected landing zones.

Enterprise-scale virtual WAN

The Enterprise-scale virtual wide-area network (WAN) reference implementation includes the foundation as well as Azure Virtual WAN, Azure ExpressRoute, and VPN. This allows Tailwind Traders and other organizations to add hybrid connectivity to their on-premises datacenter, branch offices, factories, retail stores, or other edge locations and take advantage of a global transit network.

Figure 3: Enterprise-scale Virtual WAN architecture

This architecture includes the enterprise-scale foundation, and in addition, deploys:

◉ An Azure subscription dedicated to connectivity, which deploys core networking resources such as Azure Virtual WAN, Azure Firewall and policies, and more.

◉ An Azure subscription dedicated for identity, where customers can deploy the Azure Active Directory domain controllers required for their environment.

◉ Landing zone management group for corp-connected applications that require hybrid connectivity. This is where you will create your subscriptions that will host your corp-connected workloads.

◉ Landing zone management group for online applications that will be internet-facing, which doesn't require hybrid connectivity. This is where you will create your subscriptions that will host your online workloads.

Source: microsoft.com