Saturday 29 April 2023

Managing IP with Microsoft Azure and Cliosoft

Thursday 27 April 2023

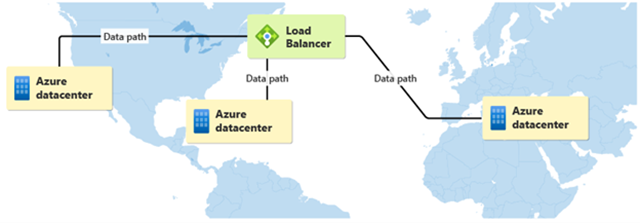

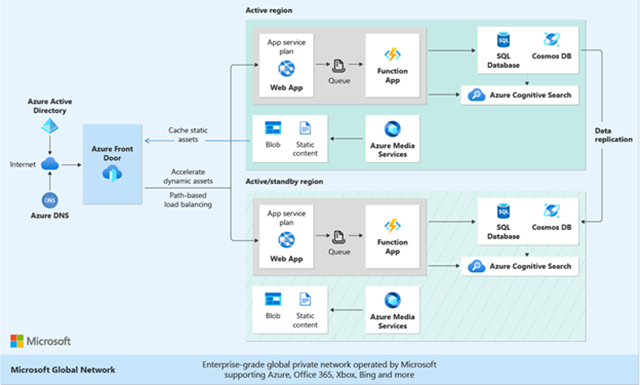

Choose the best global distribution solution for your applications with Azure

Choosing the right global traffic distribution solution

Tuesday 25 April 2023

Isovalent Cilium Enterprise in Azure Marketplace

Key capabilities for Azure Kubernetes Services customers

Start your journey with Isovalent Cilium Enterprise on Azure

The benefits of Isovalent Cilium Enterprise through Azure Marketplace

Saturday 22 April 2023

4 cloud cost optimization strategies with Microsoft Azure

1. Right sizing

2. Clean-up

3. Azure reservations and savings plans

4. Database and application tuning

Prove Your Azure Expertise with Microsoft DP-420 Certification

What Is the Microsoft DP-420 Certification Exam?

DP-420 certification exam is a professional certification exam designed to test an individual's proficiency in data preprocessing, analysis, visualization, and machine learning. Microsoft offers the certification, and it is intended for individuals who want to demonstrate their knowledge and expertise in using Microsoft Azure's Machine Learning service to create and deploy machine learning models. The exam assesses the candidate's ability to use Azure Machine Learning tools and services, understand and implement data preprocessing techniques, and develop, train, and deploy machine learning models in the Azure environment.

Who Should Take the Microsoft DP-420 Certification Exam?

To take this exam, the candidate is expected to have a strong understanding and hands-on experience in developing applications for Azure and working with Azure Cosmos DB database technologies. Specifically, the candidate should have the following knowledge and skills.

1. Proficiency in Developing Applications for Azure

The candidate should be well-versed in developing cloud-based applications using Azure services and tools such as Azure Functions, Azure App Service, Azure Storage, and Azure Event Grid.

2. Solid Understanding of Azure Cosmos Db Database Technologies

The candidate should have a thorough knowledge of the different data models supported by Cosmos DB, such as documents, graphs, and key-value. They should also be familiar with the features and capabilities of Cosmos DB, such as global distribution, partitioning, and indexing.

3. Experience Working with Azure Cosmos DB for NoSQL API

The candidate should have hands-on experience developing applications that use Azure Cosmos DB for NoSQL API. This includes knowledge of creating and managing databases and containers, performing CRUD (Create, Read, Update, Delete) operations, and executing queries using the SQL API.

Having the above skills and knowledge, the candidate can prepare effectively for the exam and increase their chances of passing it successfully. This can also help them professionally by demonstrating their expertise in developing Azure Cosmos DB applications.

Microsoft DP-420 Exam Format

The exam is called Microsoft Certified - Azure Cosmos DB Developer Specialty, with the exam code DP-420. The exam costs $165 (USD), and the duration is 120 minutes. There will be between 40-60 questions on the exam, and the passing score is 700 out of 1000 points. This exam is designed for individuals who want to demonstrate their expertise in developing solutions using Azure Cosmos DB.

Exam Topics

The Microsoft Certified Azure Cosmos DB Developer Specialty exam (DP-420) covers various topics related to developing solutions using Azure Cosmos DB. Some of the critical areas that are covered in the exam include.

- Design and Implement Data Models (35-40%)

- Design and Implement Data Distribution (5-10%)

- Integrate an Azure Cosmos DB Solution (5-10%)

- Optimize an Azure Cosmos DB Solution (15-20%)

- Maintain an Azure Cosmos DB Solution (25-30%)

These are some topics that may be covered on the exam. Reviewing the whole exam objectives and study materials provided by Microsoft is essential to ensure you are fully prepared for the exam.

Why is Microsoft DP-420 Certification Necessary?

The exam you refer to evaluates your proficiency in various technical areas related to Azure Cosmos DB, a worldwide distributed, multi-model database service offered by Microsoft Azure. The exam measures your ability to perform the following tasks.

1. Design and Implement Data Models

You should be able to create and optimize data models to save and manage data in Azure Cosmos DB. This includes understanding the different data models supported by Cosmos DB, such as documents, graphs, and key-value.

2. Design and Implement Data Distribution

You should be able to configure and manage the replication and partitioning of data across multiple regions and availability zones to ensure high availability and disaster recovery.

3. Integrate an Azure Cosmos DB Solution

You should be able to integrate Cosmos DB with other Azure services and tools to create a complete end-to-end solution for your application.

4. Optimize an Azure Cosmos DB Solution

You should be able to fine-tune and optimize the performance of your Cosmos DB solution by selecting appropriate configurations and using features such as indexing and query optimization.

5. Maintain an Azure Cosmos DB Solution

You should be able to monitor and manage the health and performance of your Cosmos DB solution, troubleshoot issues, and implement backup and restore procedures.

By passing this exam, you can show your expertise in designing, implementing, and maintaining robust and scalable data solutions using Azure Cosmos DB. This can lead to career advancement opportunities and increased recognition in the industry.

Microsoft DP-420 Exam Preparation

1. DP-420 Exam Study Materials

To prepare for the DP-420 certification exam, candidates can use various study materials, including Microsoft documentation, Azure Machine Learning samples, whitepapers, and Microsoft Official Courseware (MOC).

2. Microsoft DP-420 Exam Practice Tests and Sample Questions

Practice tests and sample questions are essential for preparing for the DP-420 certification exam. Candidates can find sample questions and practice tests on the Microsoft Learning website or from third-party providers.

3. Microsoft DP-420 Exam Study Tips

Here are some study tips to help candidates prepare for the DP-420 certification exam.

- Review the exam objectives and topics thoroughly.

- Create a study plan and allocate time for each topic.

- Practice hands-on exercises using Azure Machine Learning service.

- Collaborate with other professionals or join study groups to discuss exam topics and share knowledge.

- Take regular breaks to avoid burnout.

4. Recommended DP-420 Exam Study Resources

Here are some recommended study resources for the DP-420 certification exam:

- Microsoft Azure Machine Learning documentation

- Azure Machine Learning samples and tutorials

- Microsoft Official Courseware (MOC)

- Online learning bases such as Udemy, Coursera, and edX

- Microsoft DP-420 Exam Study Guide from Microsoft Press

Exam Tips and Tricks

1. Time Management

Time management is crucial when taking the DP-420 certification exam. Candidates should allocate their time wisely and ensure enough time to answer all the questions. They should also monitor the clock and pace themselves throughout the exam.

2. Answering Strategies

When answering exam questions, candidates should read each question carefully and understand what is being asked before attempting to answer. They should also eliminate incorrect answers to increase their chances of selecting the correct answer. If unsure, they should use their best judgment to choose the most suitable option.

3. Common Mistakes to Avoid

Here are some common mistakes that candidates should avoid when taking the DP-420 certification exam.

- Need help understanding the exam objectives and topics.

- Rushing through the exam without reading questions carefully.

- Not managing time effectively.

- Overthinking questions and second-guessing answers.

- Not reviewing answers before submitting the exam.

4. Best Practices for DP-420 Exam Preparation

Here are some best practices to help candidates prepare for the DP-420 certification exam.

- Use a variety of study materials, including practice tests and sample questions.

- Create a study schedule and stick to it.

- Practice hands-on exercises using Azure Machine Learning service.

- Take breaks and avoid burnout.

- Join study groups or collaborate with other professionals to share knowledge and insights.

Conclusion

In conclusion, the DP-420 certification exam is valuable for experts seeking to demonstrate their proficiency in data preprocessing and machine learning using Microsoft Azure's Machine Learning service. By passing this exam, candidates can validate their knowledge and skills, enhance their career prospects, and differentiate themselves from other job candidates. However, passing the exam requires thorough preparation, including reviewing exam objectives and topics, practicing with sample questions and exercises, and developing effective time management and answering strategies. With dedication and effort, candidates can succeed in the DP-420 certification exam and take the next step in their career in data science and machine learning.

Thursday 20 April 2023

Unleash the power of APIs: Strategies for innovation

API-first businesses transformation

Market trends and API-driven innovation

Enterprise scale API management with Azure

API-first approach in the mortgage industry

Comprehensive defense-in-depth security with Azure API Management

Customer-centric healthcare with APIs

Line of business innovation with Azure API Management

Saturday 15 April 2023

How 5G and wireless edge infrastructure power digital operations with Microsoft

What can 5G infrastructure deliver?

How is 5G being used by enterprises today?

How are Microsoft and Intel empowering 5G solutions?

Thursday 13 April 2023

Azure Space technologies advance digital transformation across government agencies

Viasat Real-Time Earth general availability on Azure Orbital Ground Station

“Viasat Real-Time Earth is enabling remote sensing satellite operators who are pushing the envelope of high-rate downlinks. Our strong relationship with Azure Orbital enables those same customers, through increased access to our ground service over the Azure Orbital marketplace and a dependable, high-speed terrestrial network, to reduce the time it takes to downlink and deliver massive amounts of data.”—John Williams, Vice President Viasat Real-Time Earth.

True Anomaly

"Together, True Anomaly, Viasat, and Microsoft will employ cutting-edge modeling, simulation, and visualization tools available to train Space Force guardians and other operators. Our partnership will extend to satellite control, leveraging Microsoft Azure Orbital to provide seamless and efficient satellite management solutions for our fleet of Autonomous Orbital Vehicles. By joining forces, we unlock a path to disrupt space operations and training for years to come."— Even Rogers, Co-founder and CEO of True Anomaly.

.png)