The exponential growth of datasets has resulted in growing scrutiny of how data is exposed—both from a consumer data privacy and compliance perspective. In this context, confidential computing becomes an important tool to help organizations meet their privacy and security needs surrounding business and consumer data.

Confidential computing technology encrypts data in memory and only processes it once the cloud environment is verified, preventing data access from cloud operators, malicious admins, and privileged software such as the hypervisor. It helps keep data protected throughout its lifecycle—in addition to existing solutions of protecting data at rest and in transit, data is now protected while in use.

Thanks to confidential computing, organizations across the world can now unlock opportunities that were not possible before. For example, they can now benefit from multi-party data analytics and machine learning that combine datasets from parties that would have been unwilling or unable to share them, keeping data private across participants. In fact, RBC created a platform for privacy-preserving analytics for customers to opt-in for more optimized discounts. The platform generates insights into consumer purchasing preferences by confidentially combining RBC’s credit and debit card transactions with retailer data of what specific items consumers purchased.

Industry leadership and standardization

Microsoft has long been a thought leader in the field of confidential computing. Azure introduced “confidential computing” in the cloud when we became the first cloud provider to offer confidential computing virtual machines and confidential containers support in Kubernetes for customers to run their most sensitive workloads inside Trusted Execution Environments (TEEs). Microsoft is also a founding member of the Confidential Computing Consortium (CCC), a group that brings together hardware manufacturers, cloud providers, and solution vendors to jointly work on ways to improve and standardize data protection across the tech industry.

Confidential computing foundations

Our bar for confidentiality aligns and extends the bar set by the CCC to provide a comprehensive foundation for confidential computing. We strive to provide customers the technical controls to isolate data from Microsoft operators, their own operators, or both. In Azure, we have confidential computing offerings that go beyond hypervisor isolation between customer tenants to help protect customer data from access by Microsoft operators. We also have confidential computing with secure enclaves to additionally help prevent access from customer operators.

Our foundation for confidential computing includes:

◉ Hardware root-of-trust to ensure data is protected and anchored into the silicon. Trust is rooted to the hardware manufacturer, so even Microsoft operators cannot modify the hardware configurations.

◉ Remote attestation for customers to directly verify the integrity of the environment. Customers can verify that both the hardware and software on which their workloads run are approved versions and secured before allowing them to access data.

◉ Trusted launch is the mechanism that ensures virtual machines boot with authorized software and that uses remote attestation so that customers can verify. It’s available for all VMs including confidential VMs, bringing Secure Boot and vTPMs, to add defense against rootkits, bootkits, and malicious firmware.

◉ Memory isolation and encryption to ensure data is protected while processing. Azure offers memory isolation by VM, container, or application to meet the various needs of customers, and hardware-based encryption to prevent unauthorized viewing of data, even with physical access in the datacenter.

◉ Secure key management to ensure that keys stay encrypted during their lifecycle and release only to the authorized code.

The above components together form the foundations for what we consider to be confidential computing. And today, Azure has more confidential compute options spanning hardware and software than any other cloud vendor.

Innovative new hardware

Our new Intel-based DCsv3 confidential VMs include Intel SGX that implement hardware-protected application enclaves. Developers can use SGX enclaves to reduce the amount of code that has access to sensitive data to a minimum. Additionally, we will enable Total Memory Encryption-Multi-Key (TME-MK) so that each VM can be secured with a unique hardware key.

Our new AMD-based DCasv5/ECasv5 confidential VMs available provide Secure Encrypted Virtualization-Secure Nested Paging (SEV-SNP) to provide hardware-isolated virtual machines that protect data from other virtual machines, the hypervisor, and host management code. Customers can lift and shift existing virtual machines without changing code and optionally leverage enhanced disk encryption with keys they manage or that Microsoft manages.

To support containerized workloads, we are making all of our confidential VMs available in Azure Kubernetes Service (AKS) as a worker node option. Customers can now protect their containers with Intel SGX or AMD SEV-SNP technologies.

Azure’s memory encryption and isolation options provide stronger and more comprehensive protections for customer data than any other cloud.

Customer successes across industries

Many organizations are already leveraging the great data privacy and security benefits of Azure confidential computing.

Secure AI Labs have been using a private preview of our AMD-based virtual machines to create a platform where healthcare researchers can more easily collaborate with healthcare providers to advance research. Luis Huapaya, VP of Engineering at Secure AI Labs Inc, mentions, “Because of Azure confidential computing, Secure AI Labs can realize all of the benefits of running in Azure without ever sacrificing on security. One could argue that running a virtual payload within Azure confidential computing might be more secure than running in a private server on-premise. It also offers remote attestation, a pivotal security feature because it provides a virtual payload the ability to give cryptographic proof of its identity and verify its running inside an enclave. Azure confidential computing with AMD SEV-SNP makes our job a lot easier.”

While regulated industries have been the early adopters due to compliance needs and highly sensitive data, we are seeing growing interest across industries, from manufacturing to retail and energy, for example.

Signal Messenger, a worldwide messaging app known for high security and privacy, leverages Azure confidential computing with Intel SGX to protect customer data, such as contact info. Jim O’Leary, VP of Engineering at Signal says, “To meet the security and privacy expectations of millions of people every day, we utilize Azure confidential computing to provide scalable, secure environments for our services. Signal puts users first, and Azure helps us stay at the forefront of data protection with confidential computing.”

We are excited to see organizations bring more workloads to Azure with confidence in the data protection of Azure confidential computing to meet the privacy needs of their customers.

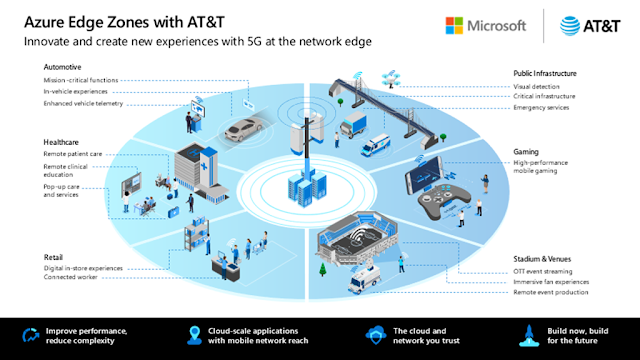

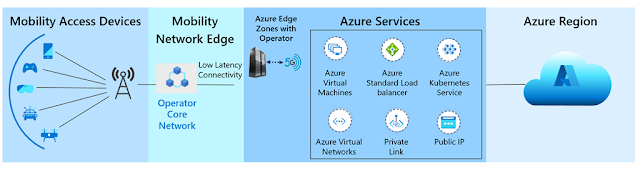

Azure confidential cloud

Azure is the world’s computer from cloud to edge. Customers of all sizes, across all industries, want to innovate, build, and securely operate their applications across multi-cloud, on-premises, and edge. Just as HTTPS has become pervasive for protecting data during internet web browsing, here at Azure, we believe that confidential computing will be a necessary ingredient for all computing infrastructure.

Our vision is to transform the Azure cloud into the Azure confidential cloud, moving from computing in the clear to computing confidentially across the cloud and edge. We want to empower customers to achieve the highest levels of privacy and security for all their workloads.

Alongside our $20 billion investment over the next five years in advancing our security solutions, we will partner with hardware vendors and innovate within Microsoft to bring the highest levels of data security and privacy to our customers. In our journey to become the world’s leading confidential cloud, we will drive confidential computing innovations horizontally across our Azure infrastructure and vertically through all the Microsoft services that run on Azure.

Source: microsoft.com