- Accelerated cloud savings: As the leading provider for SAP workloads, let us manage your infrastructure as you streamline your cloud spending.

- AI intelligence built-in: Harness the power of AI-powered insights to make data-driven decisions that drive your business forward.

- Boost productivity and innovation: Integrated apps streamline your team’s workflow and automate repetitive business processes.

- Enhanced protection: Our multi-layered cloud security ensures your SAP workloads run smoothly, backed by integrated Azure recovery services.

Thursday, 13 June 2024

Unlock new potential for your SAP workloads on Azure with these learning paths

Thursday, 8 February 2024

Reflecting on 2023—Azure Storage

- The storage platform now processes more than 1 quadrillion (that’s 1000 trillion!) transactions a month with over 100 exabytes of data read and written each month. Both numbers were sharply higher compared to the beginning of 2023.

- Premium SSD v2 disks, our new general-purpose block storage tailored for SQL, NoSQL databases, and SAP grew capacity by 100 times.

- The total transactions of Premium Files, our file offering for Azure Virtual Desktop (AVD) and SAP, grew by more than 100% year over year (YoY).

Focused innovations for new workloads

Optimizations for mission critical workloads

Expanding partner ecosystem

Industry contributions

Unparalleled commitment to quality

Saturday, 12 August 2023

Efficiently store data with Azure Blob Storage Cold Tier — now generally available

Cost effectiveness with cold tier

Seamless experience with cold tier

Empowering customers and partners to maximize savings

Thursday, 22 June 2023

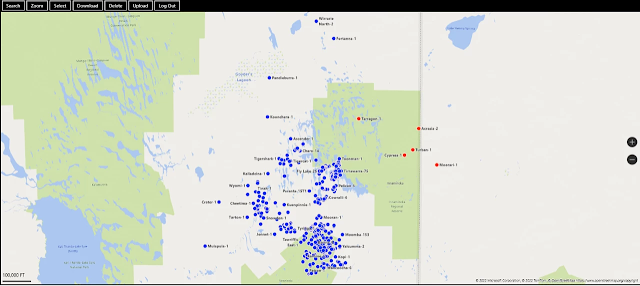

Azure Data Manager for Energy and LogScope: Enabling data integration within minutes

Revolutionizing access to OSDU Log Data with LogScope

Search and ingest data in minutes

How to work with HRP solutions on Azure Data Manager for Energy

Saturday, 20 May 2023

Transforming containerized applications with Azure Container Storage—now in preview

Why Azure Container Storage?

Leveraging Azure Container Storage

Saturday, 10 December 2022

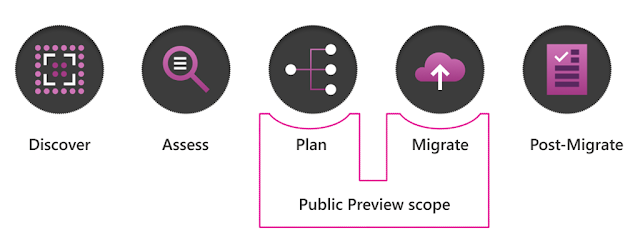

Azure Storage Mover–A managed migration service for Azure Storage

NFS share to Azure blob container

Cloud-driven migrations

Scale and performance

What’s next for Storage Mover?

Thursday, 10 March 2022

The anatomy of a datacenter—how Microsoft's datacenter hardware powers the Microsoft Cloud

Leading hardware engineering at a company known for its vast portfolio of software applications and systems is not as strange as it sounds, as the Microsoft Cloud depends on hardware as the foundation of trust, reliability, capacity, and performance, to make it possible for Microsoft and our customers to achieve more. The underlying infrastructure that powers our 60 plus datacenter regions across 140 countries consists of hardware and systems that sit within the physical buildings of datacenters—enabling millions of customers to execute critical and advanced workloads, such as AI and quantum computing, as well as unleashing future innovations.

Datacenter hardware development is imperative to the evolution of the Microsoft Cloud

As the Microsoft Cloud offers services and products to meet the world’s ever-growing computing demands, it is critical that we continuously design and advance hardware systems and infrastructure to deliver greater performance, higher efficiency, and more resiliency to customers—all with security and sustainability in mind. Today, our hardware engineering efforts and investments focus heavily on roadmap and lifecycle planning, sourcing and provisioning of servers, and innovating to deliver next-generation infrastructure for datacenters. In our new Hardware Innovation blog series, I’ll be sharing some of the hardware development and investments that are driving the most impact for the Microsoft Cloud and making Azure the trusted cloud that delivers innovative, reliable, and sustainable hybrid cloud solutions. But first, let’s look “under the hood” of a Microsoft datacenter:

From server to cloud: the end-to-end cloud hardware lifecycle

Our hardware planning starts with what customers want: capacity, differentiated services, cost-savings, and ultimately the ability to solve harder problems with the help of the Microsoft Cloud. We integrate key considerations such as customer feedback, operational analysis, technology vetting, with evaluation of disruptive innovations into our strategy and roadmap planning, improvement of existing hardware in our datacenters for compute, network architecture, and storage, while future-proofing innovative workloads for scale. Our engineers then design, build, test, and integrate software and firmware into hardware fleets that meet a stringent set of quality, security, and compliance requirements before deploying them into Microsoft’s datacenters across the globe.

Sourcing and provisioning cloud hardware, sustainably and securely

With Microsoft’s scale, the ways in which we provision, deploy, and decommission hardware parts have the potential to drive massive planetary impact. While we work with suppliers to reimagine a more resilient and efficient supply chain using technologies such as blockchain and digital twins, we also aim to have sustainability built into every step of the way. An example of our sustainability leadership is the execution of Microsoft Circular Centers, where servers and hardware that are being decommissioned are repurposed—efforts that are expected to increase the reuse of servers and components by up to 90 percent by 2025. I will be sharing more on our Circular Centers progress this year. We also have in place the Azure Security and Resiliency Architecture (ASRA) as an approach to drive security and resiliency consistently and comprehensively across the Microsoft Cloud infrastructure supply chain.

Innovating to deliver next-generation datacenter infrastructure

We are investigating and developing technology that would allow datacenters to be more agile, efficient, and sustainable to operate while meeting the computing demands of the future. We showcased development in datacenter energy efficiency, such as our two-phase liquid immersion cooling, allowing more densely packed servers to fit in smaller spaces, and addressing processor overclocking for higher computing efficiency with a lower carbon footprint. We also continue to invest in and develop workload-optimized infrastructure—from servers, racks, systems, to datacenter designs—for more custom general-purpose offerings as well as specialized compute such as AI, high-performance computing, quantum, and beyond.

Building the most advanced and innovative hardware for the intelligent cloud and the intelligent edge

The journey of building Microsoft Cloud’s hardware infrastructure is an exciting and humbling one as we see continual advancement in technology to meet the needs of the moment. I have been in the hardware industry for more than thirty years—yet, I’m more excited each day as I work alongside leaders and experts on our team, with our partners across the industry, and with the open source community. Like many of the cloud services that sit on top of it, Microsoft’s hardware engine runs on consistency in quality, reliability, and scalability. Stay tuned as we continue to share more deep dives and updates of our cloud hardware development, progress, and results—and work to drive forward technology advancement, enable new capabilities, and push the limits of what we can achieve in the intelligent cloud and the intelligent edge.

Source: azure.microsoft.com

Sunday, 12 September 2021

Improve availability with zone-redundant storage for Azure Disk Storage

As organizations continue to accelerate their cloud adoption, the need for reliable infrastructure is critical to ensure business continuity and avoid costly disruptions. Azure Disk Storage provides maximum resiliency for all workloads with an industry-leading zero percent annual failure rate and single-instance service-level agreements (SLA) for all disk types, including a best-in-class single-instance SLA of 99.9 percent availability for Azure Virtual Machines using Azure Premium SSD or Azure Ultra Disk Storage.

Today, we continue our investments to further improve the reliability of our infrastructure with the general availability of zone-redundant storage (ZRS) for Azure Disk Storage. ZRS enables you to increase availability for your critical workloads by providing the industry’s only synchronous replication of block storage across three zones in a region, enabling your disks to tolerate zonal failures which may occur due to natural disasters or hardware issues. ZRS is currently supported for Azure Premium SSDs and Azure Standard SSDs.

We have seen strong interest and great feedback from many enterprise customers from various industries during our preview. These customers are planning to use ZRS for disks to provide higher availability for a wide range of scenarios such as clustering for SAP and SQL Server workloads, container applications, and legacy applications.

Increase availability for your clustered or distributed applications

Last year, we announced the general availability of shared disks for Azure Disk Storage, which is the only shared block storage in the cloud that supports both Windows and Linux-based clustered and distributed applications. This unique offering allows a single disk to be simultaneously attached and used from multiple virtual machines (VMs), enabling you to run your most demanding enterprise applications in the cloud, such as clustered databases, parallel file systems, persistent containers, and machine learning applications, without compromising on well-known deployment patterns for fast failover and high availability. Customers can now further improve the availability of their clustered applications, like SQL failover cluster instance (FCI) and SAP ASC/SCS leveraging Windows Server Failover Cluster (WSFC), with the combination of shared disks and ZRS.

Using Availability Zones for VMs, you can allocate primary and secondary VMs in different zones for higher availability and attach a shared ZRS disk to the VMs in different zones. If a primary VM goes down due to a zonal outage, WSFC will quickly failover to the secondary VM providing increased availability to your application. Customers can also use ZRS with shared disks for their Linux-based clustering applications that use IO fencing with SCSI persistent reservations. Shared disks are now available on all Premium SSD and Standard SSD sizes, enabling you to optimize for different price and performance options.

Take advantage of ZRS disks with multi-zone Azure Kubernetes Service clusters

Customers can take advantage of ZRS disks for their container applications hosted on multi-zone Azure Kubernetes Service (AKS) for higher reliability. If a zone goes down, AKS will automatically fail over the stateful pods to a healthy zone by detaching and attaching ZRS disks to nodes in the healthy zone. We recently released the ZRS disks support in AKS through the CSI driver.

Achieve higher availability for legacy applications

You can achieve high availability for your workloads using application-level replication across two zones (such as SQL Always On). However, if you are using industry-specific proprietary software or legacy applications like older versions of SQL Server, which don't support application-level synchronous replication, ZRS disks will provide improved availability through storage-level replication. For example, if a zone goes down due to natural disasters or hardware failures, the ZRS disk will continue to be operational. If your VM in the affected zone becomes unavailable, you could use a virtual machine in another zone and attach the same ZRS disk.

Build highly available, cost-effective solutions

To build highly available software-as-a-service (SaaS) solutions, independent software vendors (ISVs) can take advantage of ZRS disks to increase availability while also reducing costs. Previously, ISVs would need to host VMs in two zones and replicate data between the VMs. This resulted in extra costs as they had to deploy twice the amount of locally redundant storage (LRS) disks to maintain two copies of data in two zones and an additional central processing unit (CPU) for replicating the data to two zones. ISVs can now use shared ZRS disks to deliver a more cost-effective solution with 1.5 times lower costs on the disks and no additional replication costs. In addition, ZRS disks also offer lower write latency than VM to VM replication of the data as the replication is performed by the platform. NetApp describes the value that ZRS provides them and their customers:

“Many customers wish to have their data replicated cross-zone to improve business continuity against zonal failures and reduce downtime. ZRS for Azure Disk Storage combined with shared disks is truly a game-changer for us as it enables us to improve the availability of our solution, allows applications to achieve their full performance, and reduces replication costs by offloading the replication to the backend infrastructure. NetApp is excited to extend its CVO High Availability solution using ZRS disks as this helps us provide a comprehensive high availability solution at lower costs for our mutual customers.”—Rajesh Rajaraman, Senior Technical Director at NetApp

Performance for ZRS disks

The IOPS and bandwidth provided by ZRS disks are the same as the corresponding LRS disks. For example, a P30 (128 GiB) LRS Premium SSD disk provides 5,000 IOPS and 200 MB/second throughput, which is the same for a P30 ZRS Premium SSD disk. Disk latency for ZRS is higher than that of LRS due to the cross zonal copy of data.

Source: microsoft.com

Thursday, 29 July 2021

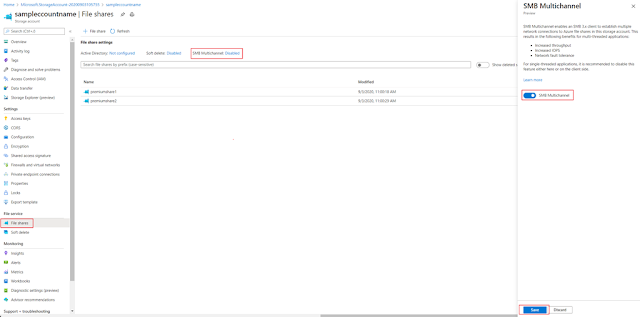

Boost your client performance with Azure Files SMB Multichannel

Lower your deployment cost, while improving client performance with Server Message Block (SMB) Multichannel on premium tier.

Today, we are announcing the preview of Azure Files SMB Multichannel on premium tier. SMB 3.0 introduced the SMB Multichannel technology in Windows Server 2012 and Windows 8 client. This feature allows SMB 3.x clients to establish multiple network connections to SMB 3.x servers for greater performance over multiple network adapters and over network adapter with Receive Side Scaling (RSS) enabled. With this preview release, Azure Files SMB clients can now take advantage of SMB Multichannel technology with premium file shares in the cloud.

Benefits

SMB Multichannel allows multiple connections over the optimum network path that allows for increased performance from parallel processing. The increased performance is achieved by bandwidth aggregation over multiple NICs or with NIC support for Receive Sides Scaling (RSS) that enables distributed IOs across multiple CPUs and dynamic load balance.

Benefits of Azure Files SMB Multichannel include:

Pricing and availability

Getting started

Sunday, 9 May 2021

Azure Availability Zones in the South Central US datacenter region add resiliency

As businesses move more workstreams to the cloud, business continuity and data protection have never been more critical—and perhaps their importance has never been more visible than during the challenges and unpredictability of 2020. To continue our commitment to supporting stability and resiliency in the cloud, Microsoft is announcing the general availability of Azure Availability Zones from our South Central US datacenter region.

Azure Availability Zones are unique physical locations within an Azure region that each consist of one or more datacenters equipped with independent power, cooling, and networking. Availability Zones provide protection against datacenter failures and unplanned downtime. These are further supported by one of the top industry-leading service level agreements (SLA) of 99.99 percent virtual machine uptime.

For many companies, especially those in regulated industries who are increasingly moving their critical applications to the cloud, Availability Zones in South Central US provide the option for customers to choose the resiliency and business continuity options that support their business. Availability Zones provide our customers with added options for high availability with added fault tolerance against datacenter failures while supporting data protection and backup. Customers can choose to store data in the same datacenter, across zonal datacenters in the same region, or across geographically separated regions. Finally, data is protected against accidental customer deletion using role-based access control and immutable storage applied through forced retention policies.

Availability Zones in South Central US build upon a broader, rich set of resiliency features available with Azure that support customer resiliency. Key among these are:

◉ Azure Storage, SQL Database, and Cosmos DB all provide built-in data replication, both within a region and across regions.

◉ Azure managed disks are automatically placed in different storage scale units to limit the effects of hardware failures.

◉ Virtual machines (VMs) in an availability set are spread across several fault domains. A fault domain is a group of VMs that share a common power source and network switch. Spreading VMs across fault domains limits the impact of physical hardware failures, network outages, or power interruptions.

◉ Azure Site Recovery supports customers in disaster recovery scenarios across regions and zones.

The creation of Availability Zones in South Central US benefits our customers in many ways, including increased service availability guarantees, which reduces the chance of downtime or data loss should there be any failure. These zones also help ensure data storage protection for peace of mind. Data protection is our priority, even over recovery time. We can endure a longer outage and data can still be protected because of zone availability. Data is also replicated in triplicate. As we kick off a New Year, resiliency and stability for our customers are still crucial. We’re optimistic and excited to see the impact these Availability Zones will have on customers, their digital transformations, and ultimately their success.

Source: microsoft.com

Saturday, 20 March 2021

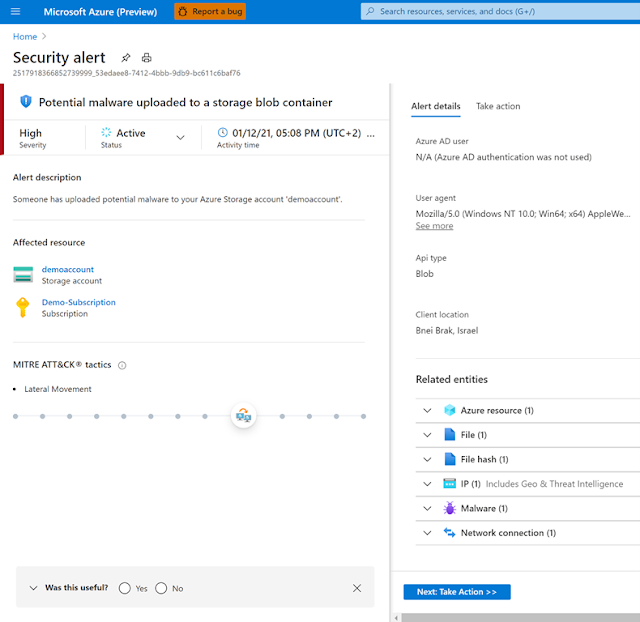

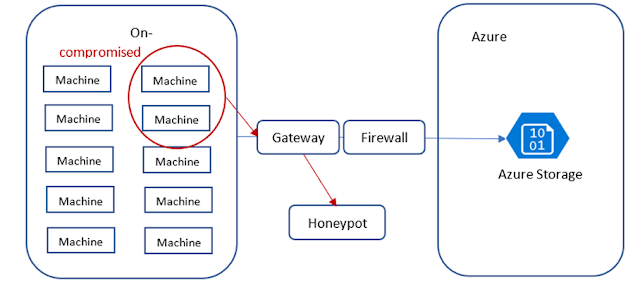

Azure Defender for Storage powered by Microsoft threat intelligence

With the reality of working from home, more people and devices are now accessing corporate data across home networks. This raises the risks of cyber-attacks and elevates the importance of proper data protection. One of the resources most targeted by attackers is data storage, which can hold critical business data and sensitive information.

Read More: AZ-600: Configuring and Operating a Hybrid Cloud with Microsoft Azure Stack

To help Azure customers better protect their storage environment, Azure Security Center provides Azure Defender for Storage, which alerts customers upon unusual and potentially harmful attempts to access or exploit their storage accounts.

What’s new in Azure Defender for Storage

As with all Microsoft security products, customers of Azure Defender for Storage benefit from Microsoft threat intelligence to detect and hunt for attacks. Microsoft amasses billions of signals for a holistic view of the security ecosystem. These shared signals and threat intelligence enrich Microsoft products and allow them to offer context, relevance, and priority management to help security teams act more efficiently.

Based on these capabilities, Azure Defender for Storage now alerts customers also upon the detection of malicious activities such as:

◉ Upload of potential malware (using hash reputation analysis).

◉ Phishing campaigns hosted on a storage account.

◉ Access from suspicious IP addresses, such as TOR exit nodes.

In addition, leveraging the advanced capabilities of Microsoft threat intelligence helps us enrich our current Azure Defender for Storage alert and future detections.

To benefit from Azure Defender for Storage, you can easily configure it on your subscription or storage accounts and start your 30-day trial today.

Cyberattacks on cloud data stores are on the rise

Nowadays, more and more organizations place their critical business data assets in the cloud using PaaS data services. Azure Storage is among the most widely used of these services. The amount of data obtained and analyzed by organizations continues to grow at an increasing rate, and data is becoming increasingly vital in guiding critical business decisions.

With this rise in usage, the risks of cyberattacks and data breaches are also growing, especially for business-critical data and sensitive information. Cyber incidents cause organizations to lose money, data, productivity, and consumer trust. The average total cost of a data breach is $3.86 million. On average, it takes 280 days to identify and contain a breach, and 17 percent of cyberattacks involve malware.

It’s clear that organizations worldwide need protection, detection, and rapid-fire response mechanisms to these threats. Yet, on average, more than 99 days pass between infiltration and detection, which is like leaving the front door wide open for over four months. Therefore, proper threat intelligence and detection are needed.

Azure Defender for Storage improved threat detections

1. Detecting upload of malware and malicious content

Storage accounts are widely used for data distribution, thus they may get infected with malware and cause it to spread to additional users and resources. This may make them vulnerable to attacks and exploits, putting sensitive organizational data at risk.

Malware reaching storage accounts was a top concern raised by our customers, and to help address it, Azure Defender for Storage now utilizes advanced hash reputation analysis to detect malware uploaded to storage accounts in Azure. This can help detect ransomware, viruses, spyware, and other malware uploaded to your accounts.

A security alert is automatically triggered upon detection of potential malware uploaded to an Azure Storage account.

“Azure Blob Storage is a very powerful and cost-effective storage solution, allowing for fast and cheap storage and retrieval of large amounts of files. We use it on all our systems and often have millions of documents in Blob Storage for a given system. With PaaS solutions, it can, however, be a challenge to check files for malware before they are stored in Blob Storage. It is incredibly easy to enable the new “Malware Reputation Screening” for storage accounts at scale, it offers us a built-in basic level of protection against malware, which is often sufficient, thus saving us the overhead to set up and manage complex malware scanning solutions.”—Frans Lytzen, CTO at NewOrbit