Logs are critical for many scenarios in the modern digital world. They are used in tandem with metrics for observability, monitoring, troubleshooting, usage and service level analytics, auditing, security, and much more. Any plan to build an application or IT environment should include a plan for logs.

Logs architecture

There are two main paradigms for logs:

◈ Centralized: All logs are kept in a central repository. In this scenario, it is easy to search across resources and cross-correlate logs but, since these repositories get big and include logs from all kind of sources, it's hard to maintain access control on them. Some organizations completely avoid centralized logging for that reason, while other organizations that use centralized logging restrict access to very few admins, which prevents most of their users from getting value out of the logs.

◈ Siloed: Logs are either stored within a resource or stored centrally but segregated per resource. In these instances, the repository can be kept secure, and access control is coherent with the resource access, but it's hard or impossible to cross-correlate logs. Users who need a broad view of many resources cannot generate insights. In modern applications, problems and insights span across resources, making the siloed paradigm highly limited in its value.

To accommodate the conflicting needs of security and log correlations many organizations have implemented both paradigms in parallel, resulting in a complex, expensive, and hard-to-maintain environment with gaps in logs coverage. This leads to lower usage of log data in the organization and results in decision-making that is not based on data.

New access control options for Azure Monitor Logs

We have recently announced a new set of Azure Monitor Logs capabilities that allow customers to benefit from the advantages of both paradigms. Customers can now have their logs centralized while seamlessly integrated into Azure and its role based access control (RBAC) mechanisms. We call this resource-centric logging. It will be added to the existing Azure Monitor Logs experience automatically while maintaining the existing experiences and APIs. Delivering a new logs model is a journey, but you can start using this new experience today. We plan to enhance and complete alignment of all Azure Monitor's components over the next few months.

The basic idea behind resource-centric logs is that every log record emitted by an Azure resource is automatically associated with this resource. Logs are sent to a central workspace container that respects scoping and RBAC based on the resources. Users will have two options for accessing the data:

1. Workspace-centric: Query all data in a specific workspace–Azure Monitor Logs container. Workspace access permissions apply. This mode will be used by centralized teams that need access to logs regardless of the resource permissions. It can also be used for components that don't support resource-centric or off-Azure resources, though a new option for them will be available soon.

2. Resource-centric: Query all logs related to a resource. Resource access permissions apply. Logs will be served from all workspaces that contain data for that resource without the need to specify them. If workspace access control allows it, there is no need to grant the users access to the workspace. This mode works for a specific resource, all resources in a specific resource group, or all resources in a specific subscription. Most application teams and DevOps will be using this mode to consume their logs.

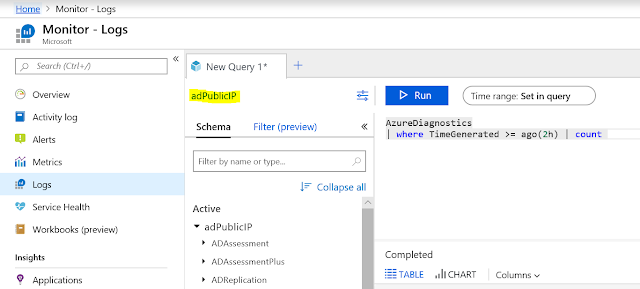

Azure Monitor experience automatically decides on the right mode depending on the scope the user chooses. If the user selects a workspace, queries will be sent in workspace-centric mode. If the user selects a resource, resource group, or subscription, resource-centric is used. The scope is always presented in the top left section of the Log Analytics screen:

You can also query all logs of resources in a specific resource group using the resource group screen:

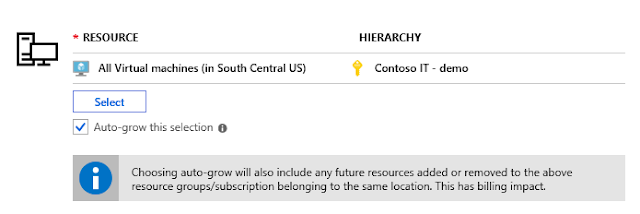

Soon, Azure Monitor will also be able to scope queries for an entire subscription.

To make logs more prevalent and easier to use, they are now integrated into many Azure resource experiences. When log search is opened from a resource menu, the search is automatically scoped to that resource and resource-centric queries are used. This means that if users have access to a resource, they'll be able to access their logs. Workspace owners can block or enable such access using the workspace access control mode.

Another capability we're adding is the ability to set permissions per table that store the logs. By default, if users are granted access to workspaces or resources, they can read all their log types. The new table RBAC allows admins to use Azure custom roles to define limited access for users, so they're only able to access some of the tables, or admins can block users from accessing specific tables. You can use this, for example, if you want the networking team to be able to access only the networking related table in a workspace or a subscription.

As result of these changes, organizations will have simpler models with fewer workspaces and more secure access control. Workspaces now assume the role of a manageable container, allowing administrators to better govern their environments. Users are now empowered to view logs in their natural Azure context, helping them to leverage the power of logs in their day-to-day work.

The improved Azure Monitor Logs access control lets you now enjoy both worlds at once without compromise on usability and security. Central teams can have full access to all logs while DevOps teams can access logs only for their resources. This comes on top of the powerful log analytics, integration and scalability capabilities that are used by tens of thousands of customers.