Tuesday, 10 January 2023

Microsoft named a Leader in 2022 Gartner® Magic Quadrant™ for Insight Engines

Tuesday, 27 December 2022

Microsoft Azure CLX: A personalized program to learn Azure

What is the CLX program?

What courses will I take?

The courses you take are up to you. The self-paced program is catered to your skillset, and you can embark on six tracks: Microsoft Azure Fundamentals, Microsoft Azure AI Fundamentals, Microsoft Azure Data Fundamentals, Microsoft Azure Administrator, Administering Windows Server Hybrid Core Infrastructure, and Windows Server Hybrid Advanced Series—with more on the way. Learn more about these tracks below.

| Course | Learner Personas | Course Content |

| Microsoft Azure Fundamentals | Administrators, Business Users, Developers, Students, Technology Managers | This course strengthens your knowledge of cloud concepts and Azure services, workloads, security, privacy, pricing, and support. It’s designed for learners with an understanding of general technology concepts, such as networking, computing, and storage. |

| Microsoft Azure AI Fundamentals | AI Engineers, Developers, Data Scientists | This course, designed for both technical and non-technical professionals, bolsters your understanding of typical machine learning and artificial intelligence workloads and how to implement them for Azure. |

| Microsoft Azure Data Fundamentals | Database Administrators, Data Analysts, Data Engineers, Developers | The Data Fundamentals course instructs you on Azure core data concepts, Azure SQL, Azure Cosmos DB, and modern data warehouse analytics. It’s designed for learners with a basic knowledge of core data concepts and how they’re implemented in Azure. |

| Microsoft Azure Administrator | Azure Cloud Administrators, VDI Administrators, IT Operations Analysts | In Azure Administrator, you’ll learn to implement cloud infrastructure, develop applications, and perform networking, security, and database tasks. It’s designed for learners with a robust understanding of operating systems, networking, servers, and virtualization. |

| Administering Windows Server Hybrid Core Infrastructure | Systems Administrators, Infrastructure Deployment Engineers, Senior System Administrators, Senior Site Reliability Engineers | In this course, you’ll learn to configure on-premises Windows Servers, hybrid, and Infrastructure as a Service (IaaS) platform workloads. It’s geared toward those with the knowledge to configure, maintain, and deploy on-premises Windows Servers, hybrid, and IaaS platform workloads. |

| Windows Server Hybrid Advanced Series | System Administrators, Infrastructure Deployment Engineers, Associate Database Administrators | This advanced series, which is designed for those with deep administration and deployment knowledge, strengthens your ability to configure and manage Windows Server on-premises, hybrid, and IaaS platform workloads. |

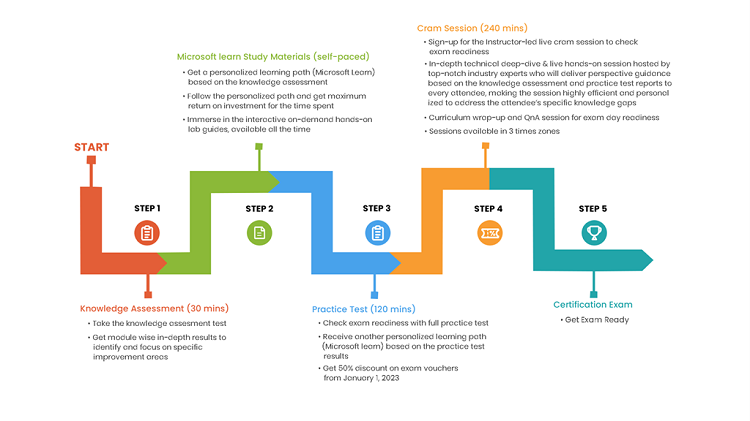

How do I get certified?

Saturday, 24 December 2022

Improve speech-to-text accuracy with Azure Custom Speech

Custom Speech data types and use cases

Research milestones

Customer inspiration

Speech Services and Responsible AI

Sunday, 13 December 2020

Harness analytical and predictive power with Azure Synapse Analytics

Since its preview announcement, we’ve witnessed incredible excitement and adoption of Azure Synapse from our customers and partners. We want to sincerely thank everyone that provided feedback and are now helping us bring the power of limitless analytics to all.

Unified experience

Azure Synapse brings together data integration, enterprise data warehousing, and big data analytics—at cloud scale. The unification of these workloads enables organizations to massively reduce their end-to-end development time and accelerate time to insight. It now also provides both no-code and code-first experiences for critical tasks such as data ingestion, preparation, and transformation.

Unified analytics

Unified data teams

Saturday, 4 April 2020

Microsoft powers transformation at NVIDIA’s GTC Digital Conference

This year during GTC Digital, we’re spotlighting some of the most transformational applications powered by NVIDIA GPU acceleration that highlight our commitment to edge, on-prem, and cloud computing. Registration is free, so sign up to learn how Microsoft is powering transformation.

Visualization and GPU workstations

Azure enables a wide range of visualization workloads, which are critical for desktop virtualization as well as professional graphics such as computer-aided design, content creation, and interactive rendering. Visualization workloads on Azure are powered by NVIDIA’s world-class GPUs and Quadro technology, the world’s preeminent visual computing platform. With access to graphics workstations on Azure cloud, artists, designers, and technical professionals can work remotely, from anywhere, and from any connected device.

Artificial intelligence

We’re sharing the release of the updated execution provider in ONNX Runtime with integration for NVIDIA TensorRT 7. With this update, ONNX Runtime can execute open Open Neural Network Exchange (ONNX) models on NVIDIA GPUs on Azure cloud and at the edge using the Azure Stack Edge, taking advantage of the new features in TensorRT 7 like dynamic shape, mixed precision optimizations, and INT8 execution.

Dynamic shape support enables users to run variable batch size, which is used by ONNX Runtime to process recurrent neural network (RNN) and Bidirectional Encoder Representations from Transformers (BERT) models. Mixed precision and INT8 execution are used to speed up execution on the GPU, which enables ONNX Runtime to better balance the performance across CPU and GPU. Originally released in March 2019, TensorRT with ONNX Runtime delivers better inferencing performance on the same hardware when compared to generic GPU acceleration.

Additionally, the Azure Machine Learning service now supports RAPIDS, a high-performance GPU execution accelerator for data science framework using the NVIDIA CUDA platform. Azure developers can use RAPIDS in the same way they currently use other machine learning frameworks, and in conjunction with Pandas, Scikit-learn, PyTorch, and TensorFlow. These two developments represent major milestones towards a truly open and interoperable ecosystem for AI. We’re working to ensure these platform additions will simplify and enrich those developer experiences.

Edge

Microsoft provides various solutions in the Intelligent Edge portfolio to empower customers to make sure that machine learning not only happens in the cloud but also at the edge. The solutions include Azure Stack Hub, Azure Stack Edge, and IoT Edge.

Whether you are capturing sensor data and inferencing at the Edge or performing end-to-end processing with model training in Azure and leveraging the trained models at the edge for enhanced inferencing operations Microsoft can support your needs however and wherever you need to.

Supercomputing scale

Time-to-decision is incredibly important with a global economy that is constantly on the move. With the accelerated pace of change, companies are looking for new ways to gather vast amounts of data, train models, and perform real-time inferencing in the cloud and at the edge. The Azure HPC portfolio consists of purpose-built computing, networking, storage, and application services to help you seamlessly connect your data and processing needs with infrastructure options optimized for various workload characteristics.

Azure Stack Hub announced preview

Microsoft, in collaboration with NVIDIA, is announcing that Azure Stack Hub with Azure NC-Series Virtual Machine (VM) support is now in preview. Azure NC-Series VMs are GPU-enabled Azure Virtual Machines available on the edge. GPU support in Azure Stack Hub unlocks a variety of new solution opportunities. With our Azure Stack Hub hardware partners, customers can choose the appropriate GPU for their workloads to enable Artificial Intelligence, training, inference, and visualization scenarios.

Azure Stack Hub brings together the full capabilities of the cloud to effectively deploy and manage workloads that otherwise are not possible to bring into a single solution. We are offering two NVIDIA enabled GPU models during the preview period. They are available in both NVIDIA V100 Tensor Core and NVIDIA T4 Tensor Core GPUs. These physical GPUs align with the following Azure N-Series VM types as follows:

◉ NCv3 (NVIDIA V100 Tensor Core GPU): These enable learning, inference and visualization scenarios.

◉ TBD (NVIDIA T4 Tensor Core GPU): This new VM size (available only on Azure Stack Hub) enables light learning, inference, and visualization scenarios.

Hewlett Packard Enterprise is supporting the Microsoft GPU preview program as part of its HPE ProLiant for Microsoft Azure Stack Hub solution.“The HPE ProLiant for Microsoft Azure Stack Hub solution with the HPE ProLiant DL380 server nodes are GPU-enabled to support the maximum CPU, RAM, and all-flash storage configurations for GPU workloads,” said Mark Evans, WW product manager, HPE ProLiant for Microsoft Azure Stack Hub, at HPE. “We look forward to this collaboration that will help customers explore new workload options enabled by GPU capabilities.”

As the leading cloud infrastructure provider1, Dell Technologies helps organizations remove cloud complexity and extend a consistent operating model across clouds. Working closely with Microsoft, the Dell EMC Integrated System for Azure Stack Hub will support additional GPU configurations, which include NVIDIA V100 Tensor Core GPUs, in a 2U form factor. This will provide customers increased performance density and workload flexibility for the growing predictive analytics and AI/ML markets. These new configurations also come with automated lifecycle management capabilities and exceptional support.

Azure Stack Edge preview

We also announced the expansion of our Microsoft Azure Stack Edge preview with the NVIDIA T4 Tensor Core GPU. Azure Stack Edge is a cloud managed appliance that provides processing for fast local analysis and insights to the data. With the addition of an NVIDIA GPU, you’re able to build in the cloud then run at the edge.

Tuesday, 31 March 2020

Extending the power of Azure AI to Microsoft 365 users

What is Azure AI?

Azure AI is a set of AI services built on Microsoft’s breakthrough innovation from decades of world-class research in vision, speech, language processing, and custom machine learning. What is particularly exciting is that Azure AI provides our customers with access to the same proven AI capabilities that power Microsoft 365, Xbox, HoloLens, and Bing. In fact, there are more than 20,000 active paying customers—and more than 85 percent of the Fortune 100 companies have used Azure AI in the last 12 months.

Azure AI helps organizations:

◉ Develop machine learning models that can help with scenarios such as demand forecasting, recommendations, or fraud detection using Azure Machine Learning.

◉ Incorporate vision, speech, and language understanding capabilities into AI applications and bots, with Azure Cognitive Services and Azure Bot Service.

◉ Build knowledge-mining solutions to make better use of untapped information in their content and documents using Azure Search.

Microsoft 365 provides innovative product experiences with Azure AI

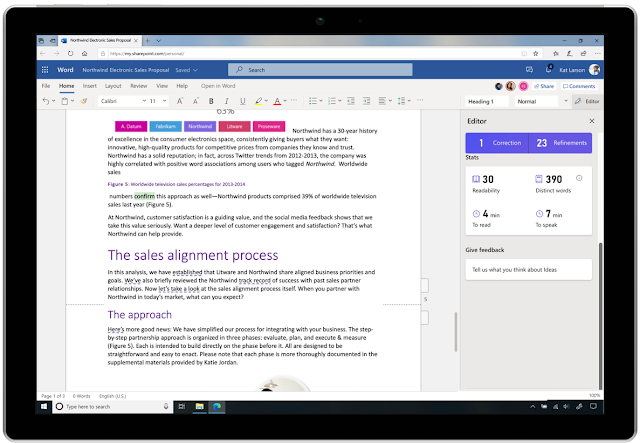

The announcement of Microsoft Editor is one example of innovation. Editor, your personal intelligent writing assistant is available across Word, Outlook.com, and browser extensions for Edge and Chrome. Editor is an AI-powered service available in more than 20 languages that has traditionally helped writers with spell check and grammar recommendations. Powered by AI models built with Azure Machine Learning, Editor can now recommend clear and concise phrasing, suggest more formal language, and provide citation recommendations.

Thursday, 30 January 2020

Six things to consider when using Video Indexer at scale

In general, scaling shouldn’t be difficult, but when you first face such process you might not be sure what is the best way to do it. Questions like “are there any technological constraints I need to take into account?”, “Is there a smart and efficient way of doing it?”, and “can I prevent spending excess money in the process?” can cross your mind. So, here are six best practices of how to use Video Indexer at scale.

1. When uploading videos, prefer URL over sending the file as a byte array

Video Indexer does give you the choice to upload videos from URL or directly by sending the file as a byte array, but remember that the latter comes with some constraints.

First, it has file size limitations. The size of the byte array file is limited to 2 GB compared to the 30 GB upload size limitation while using URL.

Second and more importantly for your scaling, sending files using multi-part means high dependency on your network, service reliability, connectivity, upload speed, and lost packets somewhere in the world wide web, are just some of the issues that can affect your performance and hence your ability to scale.

2. Increase media reserved units if needed

3. Respect throttling

4. Use callback URL

5. Use the right indexing parameters for you

6. Index in optimal resolution, not highest resolution

Saturday, 12 October 2019

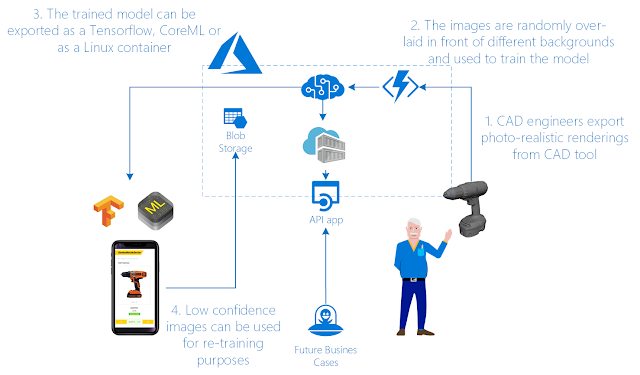

Leveraging Cognitive Services to simplify inventory tracking

The problem

How they hacked it

Benchmarking the iterations

The result

What’s next

Thursday, 26 September 2019

Azure Media Services' new AI-powered innovation

Animated character recognition, multilingual speech transcription and more now available

At Microsoft, our mission is to empower every person and organization on the planet to achieve more. The media industry exemplifies this mission. We live in an age where more content is being created and consumed in more ways and on more devices than ever. At IBC 2019, we’re delighted to share the latest innovations we’ve been working on and how they can help transform your media workflows.

Video Indexer adds support for animation and multilingual content

We made our award winning Azure Media Services Video Indexer generally available at IBC last year, and this year it’s getting even better. Video Indexer automatically extracts insights and metadata such as spoken words, faces, emotions, topics and brands from media files, without you needing to be a machine learning expert. Our latest announcements include previews for two highly requested and differentiated capabilities for animated character recognition and multilingual speech transcription, as well as several additions to existing models available today in Video Indexer.

Animated character recognition

Animated content or cartoons are one of the most popular content types, but standard AI vision models built for human faces do not work well with them, especially if the content has characters without human features. In this new preview solution, Video Indexer joins forces with Microsoft’s Azure Custom Vision service to provide a new set of models that automatically detect and group animated characters and allow customers to then tag and recognize them easily via integrated custom vision models. These models are integrated into a single pipeline, which allows anyone to use the service without any previous machine learning skills. The results are available through the no-code Video Indexer portal or the REST API for easy integration into your own applications.

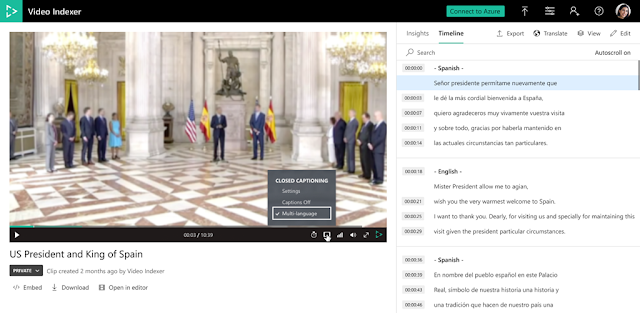

Multilingual identification and transcription

Additional updated and improved models

Extraction of people and locations entities

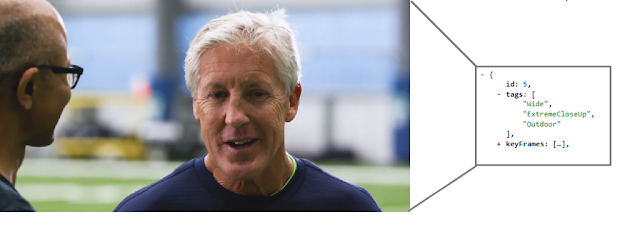

Editorial shot detection model

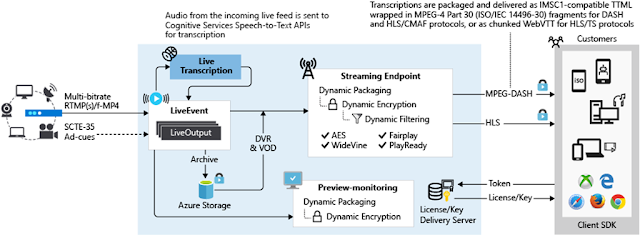

New live streaming functionality

Live transcription supercharges your live events with AI

Live linear encoding for 24/7 over-the-top (OTT) channels

New packaging features

Microsoft Azure partners demonstrate end-to-end solutions

Saturday, 20 July 2019

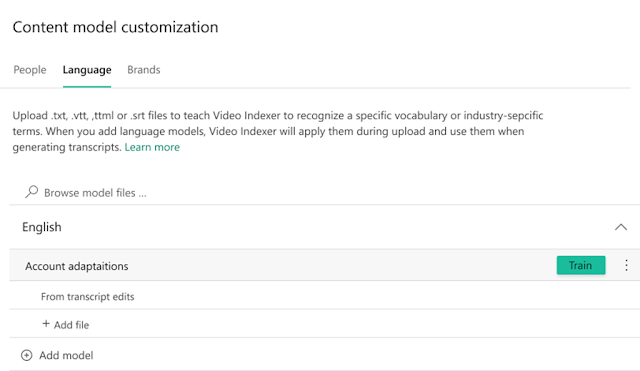

New ways to train custom language models – effortlessly!

Over the past few months, we have worked on a series of enhancements to make this customization process even more effective and easy to accomplish. Enhancements include automatically capturing any transcript edits done manually or via API as well as allowing customers to add closed caption files to further train their custom language models.

The idea behind these additions is to create a feedback loop where organizations begin with a base out-of-the-box language model and improve its accuracy gradually through manual edits and other resources over a period of time, resulting with a model that is fine-tuned to their needs with minimal effort.

Accounts’ custom language models and all the enhancements this blog shares are private and are not shared between accounts.

In the following sections I will drill down on the different ways that this can be done.

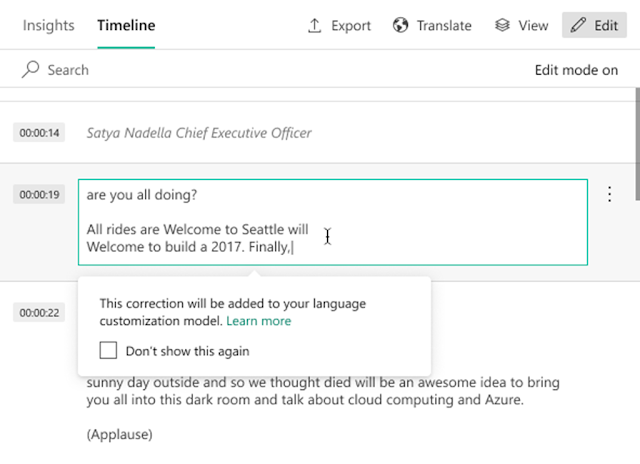

Improving your custom language model using transcript updates

Once a video is indexed in VI, customers can use the Video Indexer portal to introduce manual edits and fixes to the automatic transcription of the video. This can be done by clicking on the Edit button at the top right corner of the Timeline pane of a video to move to edit mode, and then simply update the text, as seen in the image below.

| Type | Before | After |

| VTT | NOTE Confidence: 0.891635 00:00:02.620 --> 00:00:05.080 but you don't like meetings before 10 AM. |

but you don’t like meetings before 10 AM. |

| SRT | 2 00:00:02,620 --> 00:00:05,080 but you don't like meetings before 10 AM. |

but you don’t like meetings before 10 AM. |

| TTML | <!-- Confidence: 0.891635 --> <p begin="00:00:02.620" end="00:00:05.080">but you don't like meetings before 10 AM.</p> |

but you don’t like meetings before 10 AM. |