As companies today look to do more with data, take full advantage of the cloud, and vault into the age of AI, they’re looking for services that process data at scale, reliably, and efficiently. Today, we’re excited to announce the upcoming public preview of HDInsight on Azure Kubernetes Service (AKS), our cloud-native, open-source big data service, completely rearchitected on Azure Kubernetes Service infrastructure with two new workloads and numerous improvements across the stack.

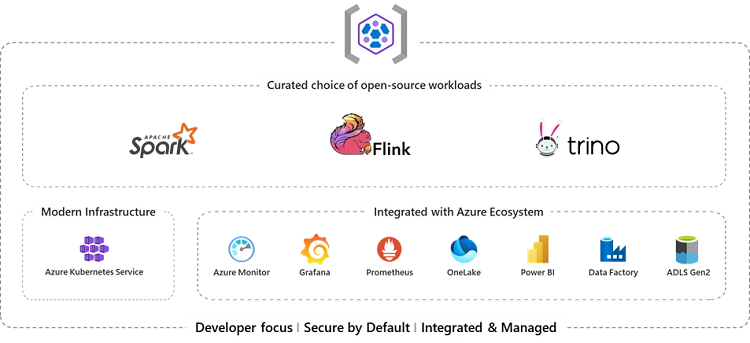

HDInsight on AKS includes Apache Spark, Apache Flink, and Trino workloads on an Azure Kubernetes Service infrastructure, and features deep integration with popular Azure analytics services like Power BI, Azure Data Factory, and Azure Monitor, while leveraging Azure managed services for Prometheus and Grafana for monitoring. HDInsight on AKS is an end-to-end, open-source analytics solution that is easy to deploy and cost-effective to operate.

HDInsight on AKS helps customers leverage open-source software for their analytics needs by:

- Providing a curated set of open-source analytics workloads like Apache Spark, Apache Flink, and Trino. These workloads are the best-in-class open-source software for data engineering, machine learning, streaming, and querying.

- Delivering managed infrastructure, security, and monitoring so that teams can spend their time building innovative applications without needing to worry about the other components of their stack. Teams can be confident that HDInsight helps keep their data safe.

- Offering flexibility that teams need to extend capabilities by tapping into today’s rich, open-source ecosystem for reusable libraries, and customizing applications through script actions.

Customers who are deeply invested in open-source analytics can use HDInsight on AKS to reduce costs by setting up fully functional, end-to-end analytics systems in minutes, leveraging ready-made integrations, built-in security, and reliable infrastructure. Our investments in performance improvements and features like autoscale enable customers to run their analytics workloads at optimal cost. HDInsight on AKS comes with a very simple and consistent pricing structure per vcore per hour regardless of the size of the resource or the region, plus the cost of resources provisioned.

Developers love HDInsight for the flexibility it offers to extend the base capabilities of open-source workloads through script actions and library management. HDInsight on AKS has an intuitive portal experience for managing libraries and monitoring resources. Developers have the flexibility to use a Software Development Kit(SDK), Azure Resource Manager (ARM) templates, or the portal experience based on their preference.

Open, managed, and flexible

HDInsight on AKS covers the full gamut of enterprise analytics needs spanning streaming, query processing, batch, and machine learning jobs with unified visualization.

Curated open-source workloads

HDInsight on AKS includes workloads chosen based on their usage in typical analytics scenarios, community adoption, stability, security, and ecosystem support. This ensures that customers don’t need to grapple with the complexity of choice on account of myriad offerings with overlapping capabilities and inconsistent interoperability.

Each of the workloads on HDInsight on AKS is the best-in-class for the analytics scenarios it supports:

- Apache Flink is the open-source distributed stream processing framework that powers stateful stream processing and enables real-time analytics scenarios.

- Trino is the federated query engine that is highly performant and scalable, addressing ad-hoc querying across a variety of data sources, both structured and unstructured.

- Apache Spark is the trusted choice of millions of developers for their data engineering and machine learning needs.

HDInsight on AKS offers these popular workloads with a common authentication model, shared meta store support, and prebuilt integrations which make it easy to deploy analytics applications.

Managed service reduces complexity

HDInsight on AKS is a managed service in the Azure Kubernetes Service infrastructure. With a managed service, customers aren’t burdened with the management of infrastructure and other software components, including operating systems, AKS infrastructure, and open-source software. This ensures that enterprises can benefit from ongoing security and functional and performance enhancements without investing precious development hours.

Containerization enables seamless deployment, scaling, and management of key architectural components. The inherent resiliency of AKS allows pods to be automatically rescheduled on newly commissioned nodes in case of failures. This means jobs can run with minimal disruptions to Service Level Agreements (SLAs).

Customers combining multiple workloads in their data lakehouse need to deal with a variety of user experiences, resulting in a steep learning curve. HDInsight on AKS provides a unified experience for managing their lakehouse. Provisioning, managing, and monitoring all workloads can be done in a single pane of glass. Additionally, with managed services for Prometheus and Grafana, administrators can monitor cluster health, resource utilization, and performance metrics.

Through the autoscale capabilities included in HDInsight on AKS, resources—and thereby cost—can be optimized based on usage needs. For jobs with predictable load patterns, teams can schedule the autoscaling of resources based on a predefined timetable. Graceful decommission enables the definition of wait periods for jobs to be completed before ramping down resources, elegantly balancing costs with experience. Load-based autoscaling can ramp resources up and down based on usage patterns measured by compute and memory usage.

HDInsight on AKS marks a shift away from traditional security mechanisms like Kerberos. It embraces OAuth 2.0 as the security framework, providing a modern and robust approach to safeguarding data and resources. In HDInsight on AKS authorization, access controls are based on managed identities. Customers can also bring their own virtual networks and associate them during cluster setup, increasing security and enabling compliance with their enterprise policies. The clusters are isolated with namespaces to protect data and resources within the tenant. HDInsight on AKS also allows management of cluster access using Azure Resource Manager (ARM) roles.

Customers who’ve participated in the private preview love HDInsight on AKS.

Here’s what one user had to say about his experience.

“With HDInsight on AKS, we’ve seamlessly transitioned from the constraints of our in-house solution to a robust managed platform. This pivotal shift means our engineers are now free to channel their expertise towards core business innovation, rather than being entangled in platform management. The harmonious integration of HDInsight with other Azure products has elevated our efficiency. Enhanced security bolsters our data’s integrity and trustworthiness, while scalability ensures we can grow without hitches. In essence, HDInsight on AKS fortifies our data strategy, enabling more streamlined and effective business operations.”

Matheus Antunes, Data Architect, XP Inc

Source: microsoft.com