Cloud data lakes solve a foundational problem for big data analytics—providing secure, scalable storage for data that traditionally lives in separate data silos. Data lakes were designed from the start to break down data barriers and jump start big data analytics efforts. However, a final “silo busting” frontier remained, enabling multiple data access methods for all data—structured, semi-structured, and unstructured—that lives in the data lake.

Providing multiple data access points to shared data sets allow tools and data applications to interact with the data in their most natural way. Additionally, this allows your data lake to benefit from the tools and frameworks built for a wide variety of ecosystems. For example, you may ingest your data via an object storage API, process the data using the Hadoop Distributed File System (HDFS) API, and then ingest the transformed data using an object storage API into a data warehouse.

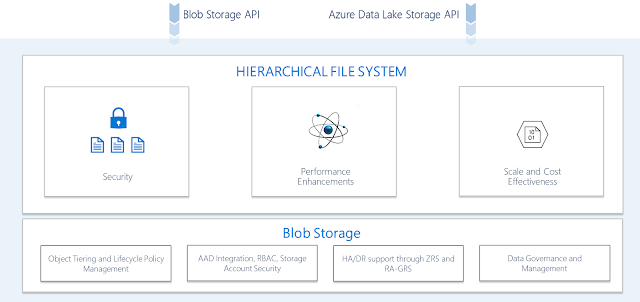

We are very excited to announce the preview of multi-protocol access for Azure Data Lake Storage! Azure Data Lake Storage is a unique cloud storage solution for analytics that offers multi-protocol access to the same data. Multi-protocol access to the same data, via Azure Blob storage API and Azure Data Lake Storage API, allows you to leverage existing object storage capabilities on Data Lake Storage accounts, which are hierarchical namespace-enabled storage accounts built on top of Blob storage. This gives you the flexibility to put all your different types of data in your cloud data lake knowing that you can make the best use of your data as your use case evolves.

Providing multiple data access points to shared data sets allow tools and data applications to interact with the data in their most natural way. Additionally, this allows your data lake to benefit from the tools and frameworks built for a wide variety of ecosystems. For example, you may ingest your data via an object storage API, process the data using the Hadoop Distributed File System (HDFS) API, and then ingest the transformed data using an object storage API into a data warehouse.

Single storage solution for every scenario

We are very excited to announce the preview of multi-protocol access for Azure Data Lake Storage! Azure Data Lake Storage is a unique cloud storage solution for analytics that offers multi-protocol access to the same data. Multi-protocol access to the same data, via Azure Blob storage API and Azure Data Lake Storage API, allows you to leverage existing object storage capabilities on Data Lake Storage accounts, which are hierarchical namespace-enabled storage accounts built on top of Blob storage. This gives you the flexibility to put all your different types of data in your cloud data lake knowing that you can make the best use of your data as your use case evolves.

Single storage solution

Expanded feature set, ecosystem, and applications

Existing blob features such as access tiers and lifecycle management policies are now unlocked for your Data Lake Storage accounts. This is paradigm-shifting because your blob data can now be used for analytics. Additionally, services such as Azure Stream Analytics, IoT Hub, Azure Event Hubs capture, Azure Data Box, Azure Search, and many others integrate seamlessly with Data Lake Storage. Important scenarios like on-premises migration to the cloud can now easily move PB-sized datasets to Data Lake Storage using Data Box.

Multi-protocol access for Data Lake Storage also enables the partner ecosystem to use their existing Blob storage connector with Data Lake Storage. Here is what our ecosystem partners are saying:

“Multi-protocol access for Azure Data Lake Storage is a game changer for our customers. Informatica is committed to Azure Data Lake Storage native support, and Multi-protocol access will help customers accelerate their analytics and data lake modernization initiatives with a minimum of disruption.”

You will not need to update existing applications to gain access to your data stored in Data Lake Storage. Furthermore, you can leverage the power of both your analytics and object storage applications to use your data most effectively.

Multi-protocol access enables features and ecosystem

Multiple API endpoints—Same data, shared features

This capability is unprecedented for cloud analytics services because not only does this support multiple protocols, this supports multiple storage paradigms. We now bring you this powerful capability to your storage in the cloud. Existing tools and applications that use the Blob storage API gain these benefits without any modification. Directory and file-level access control lists (ACL) are consistently enforced regardless of whether an Azure Data Lake Storage API or Blob storage API is used to access the data.

Multi-protocol access on Azure Data Lake Storage

Features and expanded ecosystem now available on Data Lake Storage

Multi-protocol access for Data Lake Storage brings together the best features of Data Lake Storage and Blob storage into one holistic package. It enables many Blob storage features and ecosystem support for your data lake storage.

Features

|

More information

|

Access tiers

|

Cool and Archive tiers are now available for Data Lake Storage.

|

Lifecycle management policies

|

You can now set policies to a tier or delete data in Data Lake Storage.

|

Diagnostics logs

|

Logs for the Blob storage API and Azure Data Lake Storage API are now available in v1.0 and v2.0 formats.

|

SDKs

|

Existing blob SDKs can now be used with Data Lake Storage.

|

PowerShell

|

PowerShell for data plane operations is now available for Data Lake Storage.

|

CLI

|

Azure CLI for data plane operations is now available for Data Lake Storage.

|

Notifications via Azure Event Grid

|

You can now get Blob notifications through Event Grid.

|

| Ecosystem partner | More information |

| Azure Stream Analytics | Azure Stream Analytics now writes to, as well as reads from, Data Lake Storage. |

| Azure Event Hubs capture | The capture feature within Azure Event Hubs now lets you pick Data Lake Storage as one of its destinations. |

| IoT Hub | IoT Hub message routing now allows routing to Azure Data Lake Storage Gen 2. |

| Azure Search | You can now index and apply machine learning models to your Data Lake Storage content using Azure Search. |

| Azure Data Box | You can now ingest huge amounts of data from on-premises to Data Lake Storage using Data Box. |

This comment has been removed by the author.

ReplyDelete