Protect your stuff

In this post, we’ll cover the concepts and best practices to protect your IaaS virtual machines (VMs) on Azure Stack.

Protecting your IaaS virtual machine based applications

Azure Stack is an extension of Azure that lets you deliver IaaS Azure services from your organization’s datacenter. Consuming IaaS services from Azure Stack requires a modern approach to business continuity and disaster recovery (BC/DR). If you’re just starting your journey with Azure and Azure Stack, make sure to work through a comprehensive BC/DR strategy so your organization understands the immediate and long-term impact of modernizing applications in the context of cloud. If you already have Azure Stack, keep in mind that each application must have a well-articulated BC/DR plan calling out the resiliency, reliability, and availability requirements that meet the business needs of your organization.

What Azure Stack is and what it isn’t

Since launching Azure Stack at Ignite 2017, we’ve received feedback from many customers on the challenges they face within their organization evangelizing Azure Stack to their end customers. The main concerns are the stark differences from traditional virtualization. In the context of modernizing BC/DR practices, three misconceptions stand out:

Azure Stack is just another virtualization platform

Azure Stack is delivered as an appliance on prescriptive hardware co-engineered with our integrated system partners. Your focus must be on the services delivered by Azure Stack and the applications your customers will deploy on the system. You are responsible for working with your applications teams to define how they will achieve high availability, backup recovery, disaster recovery, and monitoring in the context of modern IaaS, separate from infrastructure running the services.

I should be able to use the same virtualization protection schemes with Azure Stack

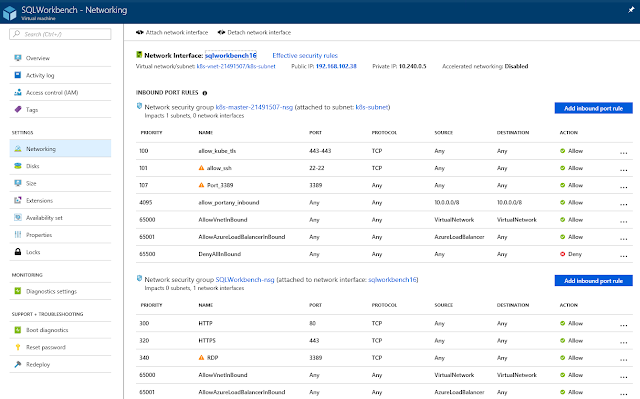

Azure Stack is delivered as a sealed system with multiple layers of security to protect the infrastructure. Constraints include:

◈ Azure Stack operators only have constrained administrative access to the system. Elevated access to the system is only possible through Microsoft support.

◈ Scale unit nodes and infrastructure services have code integrity enabled.

◈ At the networking layer, the traffic flow defined in the switches is locked down at deployment time using access control lists.

Given these constraints, there is no opportunity to install backup/replication agents on the scale-unit nodes, grant access to the nodes from an external device for replication and snapshotting, or physically attach external storage devices for storage level replication to another site.

Another ask from customers is the possibility of deploying one Azure Stack scale-unit across multiple datacenters or sites. Azure Stack doesn’t support a stretched or multi-site topology for scale-units. In a stretched deployment, the expectation is that nodes in one site can go offline with the remaining nodes in the secondary site available to continue running applications. From an availability perspective, Azure Stack only supports N-1 fault tolerance, so losing half of the node count will take the system offline. In addition, based on how scale-units are configured, Azure Stack only supports fault domains at a node level. There is no concept of a site within the scale-unit.

I am not deploying modern applications in Azure, none of this applies to me

Azure Stack is designed to offer cloud services in your datacenter. There is a clear separation between the operation of the infrastructure and how IaaS VM-based applications are delivered. Even if you’re not planning to deploy any applications to Azure, deploying to Azure Stack is not “business as usual” and will require thinking through the BC/DR implications throughout the entire lifecycle of your application.

Define your level of risk tolerance

With the understanding that Azure Stack requires a different approach to BC/DR for your IaaS VM-based applications, let’s look at the implications of having one or more Azure Stack systems, the physical and logical constructs in Azure Stack, and the recovery objectives you and your application owners need to focus on.

How far apart will you deploy Azure Stack systems

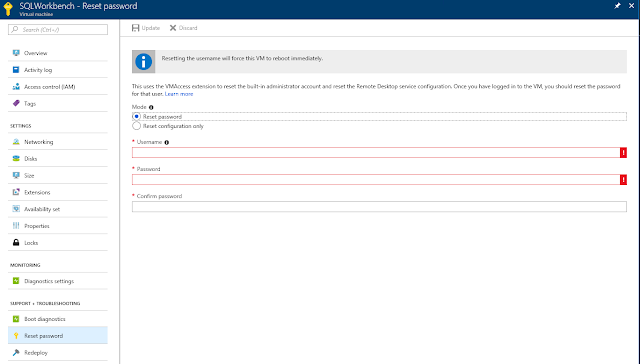

Let’s start by defining the impact radius you want to protect against in the event of a disaster. This can be as small as a rack in a co-location facility or an entire region of a country or continent. Within the impact radius, you can choose to deploy one or more Azure Stack systems. If the region is large enough you may even have multiple datacenters close together, each with Azure Stack systems. The key takeaway is that if the site goes offline due to a disaster or catastrophic event, there is no amount of redundancy that will keep the Azure systems online. If your intent is to survive the loss of an entire site as the diagram below shows, then you must consider deploying Azure Stack systems into multiple geographic locations separated by enough distance so a disaster in one location does not impact any other locations.

Help your application owners understand the physical and logical layers of Azure Stack

Next it’s important to understand the physical and logical layers that come together in an Azure Stack environment. The Azure Stack system running all the foundational services and your applications physically reside within a rack in a datacenter. Each deployment of Azure Stack is a separate instance or cloud with its own portal. The diagram below shows the physical and logical layering that’s common for all Azure Stack systems deployed today and for the foreseeable future.

Define the recovery time objectives for each application with your application owners

Now that you have a clear understanding of your risk tolerance if a system goes offline, you need to decide the protection schemes for your applications. You need to make sure you can quickly recover applications and data on a healthy system. We’re talking about making sure your applications are designed to be highly available within a scale-unit using availability sets to protect against hardware failures. In addition, you should also consider the possibility of an application going offline due to corruption or accidental deletion. Recovery can be as simple as scaling-out an application or restoring from a backup.

To survive an outage of the entire system, you’ll need to identify the availability requirements of each application, where the application can run in the event of an outage, and what tools you need to introduce to enable recovery. If your application can run temporarily in Azure, you can use services like Azure Site Recovery and Azure Backup to protect your application. Another option is to have additional Azure Stack systems fully deployed, operational, and ready to run applications. The time required to get the application running on a secondary system is the recovery time objective (RTO). This objective is established between you and the application owners. Some application owners will only tolerate minimal downtime while others are ok with multiple days of downtime if the data is protected in a separate location. Achieving this RTO will differ from one application to another. The diagram below summarizes the common protection schemes used at the VM or application level.

In the event of a disaster, there will be no time to request an on-demand deployment of Azure Stack to a secondary location. If you don’t have a deployed system in a secondary location, you will need to order one from your hardware partner. The time required to deliver, install, and deploy the system is measured in weeks.

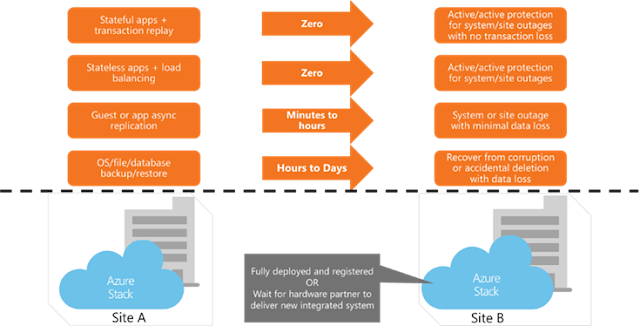

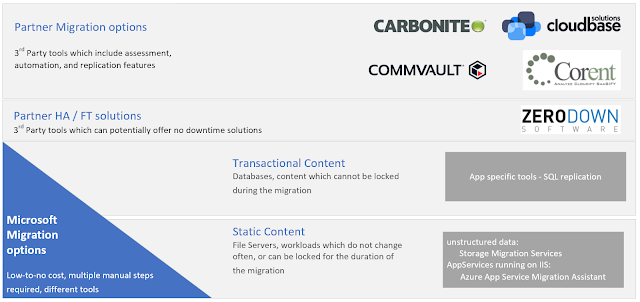

Establish the offerings for application and data protection

Now that you know what you need to protect on Azure Stack and your risk tolerance for each application, let’s review some specific patterns used with IaaS VMs.

Data protection

Applications deployed into IaaS VMs can be protected at the guest OS level using backup agents. Data can be restored to the same IaaS VM, to a new VM on the same system, or a different system in the event of a disaster. Backup agents support multiple data sources in an IaaS VM such as:

◈ Disk: This requires block-level backup of one, some, or all disks exposed to the guest OS. It protects the entire disk and captures any changes at the block level.

◈ File or folder: This requires file system-level backup of specific files and folders on one, some, or all volumes attached to the guest OS.

◈ OS state: This requires backup targeted at the OS state.

◈ Application: This requires a backup coordinated with the application installed in the guest OS. Application-aware backups typically include quiescing input and output in the guest for application consistency (for example, Volume Shadow Copy Service (VSS) in the Windows OS).

Application data replication

Another option is to use replication at the guest OS level or at the application level to make data available in a different system. The replication isn’t offloaded to the underlying infrastructure, it’s handled at the guest OS or above. One example is applications like SQL support asynchronous replication in a distributed availability group.

High availability

For high availability, you need to start by understanding the data persistence model of your applications:

◈ Stateful workloads write data to one or more repositories. It’s necessary to understand which parts of the architecture need point-in-time data protection and high availability to recover from a catastrophic event.

◈ Stateless workloads on the other hand don’t contain data that needs to be protected. These workloads typically support on-demand scale-up and scale-down and can be deployed in multiple locations in a scale-out topology behind a load balancer.

To support application level high availability within an Azure Stack system, multiple virtual machines are grouped into an availability set. Applications deployed in an availability set sit behind a load balancer that distributes incoming traffic randomly among multiple virtual machines.

Across Azure Stack systems, a similar approach is possible with the following differences; The load balancer must be external to both systems or in Azure (i.e. Traffic Manager). Availability sets do not span across independent Azure Stack systems.