In the over 150 years since the automotive industry was founded, it has never experienced such rapid innovation and transformational change as it is currently experiencing. Since the advent of the horseless carriage in the 1860s, vehicle manufacturers have continued to improve the quality, safety, speed, and comfort of millions of automotive models sold around the world, each year.

Today, however, all eyes are on autonomous vehicles as a cornerstone of future human mobility.

Exponential market growth expected

Over the past decade, the impact of emerging technologies such as AI, machine vision, and high-performance computing (HPC) has changed the face of the automotive industry. Today, nearly every car manufacturer in the world is exploring the potential and power of these technologies to usher in a new age of self-driving vehicles. Microsoft Azure HPC and Azure AI infrastructure are tools to help accomplish that.

Data suggests that the global autonomous vehicle market, with level two autonomous features present in cars, was worth USD76 billion in 2020, but is expected to grow exponentially over the coming years to reach over USD2.1 trillion by 2030, as levels of autonomy features in cars continue to increase.

The platformization of autonomous taxis also holds enormous potential for the broader adoption and usage of autonomous vehicles. Companies like Tesla, Waymo, NVIDIA, and Zoox are all investing in the emerging category of driverless transportation that leverages powerful AI and HPC capabilities to transform the concept of human mobility. However, several challenges still need to be overcome for autonomous vehicles to reach their potential and become the de facto option for car buyers, passengers, and commuters.

Common challenges persist

One of the most important challenges with autonomous vehicles is ethics. If the vehicle determines what action to take during a trip, how does it decide what holds the most value during an emergency? To illustrate, if an autonomous vehicle is traveling down a road and two pedestrians suddenly run across the road from opposite directions, what are the ethics underpinning whether the vehicle swerves to collide with one pedestrian instead of another?

Another of the top challenges with autonomous vehicles is that the AI algorithms underpinning the technology are continuously learning and evolving. Autonomous vehicle AI software relies heavily on deep neural networks, with a machine learning algorithm tracking on-road objects as well as road signs and traffic signals, allowing the vehicle to ‘see’ and respond to—for example, a red traffic light.

Where the tech still needs some refinement is with the more subtle cues that motorists are instinctually aware of. For example, a slightly raised hand by a pedestrian may indicate they are about to cross the road. A human will see and understand the cue far better than an AI algorithm does, at least for now.

Another challenge is whether there is sufficient technology and connectivity infrastructure for autonomous vehicles to offer the optimal benefit of their value proposition to passengers, especially in developing countries. With car journeys from A to B evolving into experiences, people will likely want to interact with their cars based on their personal technology preferences, linked to tools from leading technology providers. In addition, autonomous vehicles will also need to connect to the world around them to guarantee safety and comfort to their passengers.

As such, connectivity will be integral to the mass adoption of autonomous vehicles. And with the advent and growing adoption of 5G, it may improve connectivity and enable communication between autonomous vehicles—which could enhance autonomous vehicles’ safety and functioning.

Road safety is not the only concern with autonomous vehicles. Autonomous vehicles will be designed to be hyper-connected, almost like an ultra-high-tech network of smartphones on wheels. However, an autonomous vehicle must be precisely that—standalone autonomous. If connectivity is lost, the autonomous vehicle must still be able to operate fully autonomously.

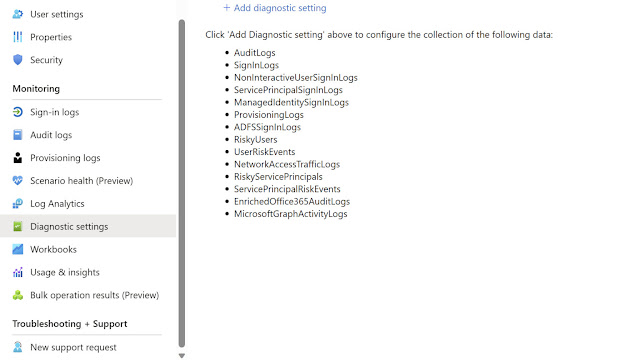

That being said, there is still the risk that cyberattacks could pose a threat to autonomous vehicle motorists, compared to legacy vehicles currently on the road. In the wake of a successful cyberattack, threat actors may gain access to sensitive personal information or even gain control over key vehicle systems. Manufacturers and software providers will need to take every step necessary to protect their vehicles and systems from compromise.

Lastly, there are also social and cultural barriers to the mainstreaming of autonomous vehicles with many people across the globe still very uncomfortable with the idea of giving up control of their cars to a machine. Once consumers can experience autonomous drives and see how the technology continuously monitors a complete 360-degree view around the vehicle and does not get drowsy or distracted, confidence that autonomous vehicles are safe and secure will grow, and adoption rates will rise.

The future of travel is (nearly) upon us

As the world moves closer to a future where autonomous vehicles are a ubiquitous presence on our roads, the complex challenges that must be addressed to make this a safe and viable option become ever more apparent. The adoption of autonomous vehicles is not simply a matter of developing the technology, but also requires a complete overhaul of how we approach transportation systems and infrastructure.

To tackle the many challenges posed by autonomous vehicle adoption, companies and researchers are heavily investing resources into solving these complex challenges. For example, one way that researchers are addressing the ethical challenges posed by autonomous vehicles being able to make life or death decisions, is by developing ethical frameworks that guide the decision-making processes of these vehicles.

These frameworks define the principles and values that should be considered when autonomous vehicles encounter ethical dilemmas, such as deciding between protecting the safety of passengers versus that of pedestrians. Such frameworks can help ensure that autonomous vehicles make ethical decisions that are consistent with societal values and moral principles.

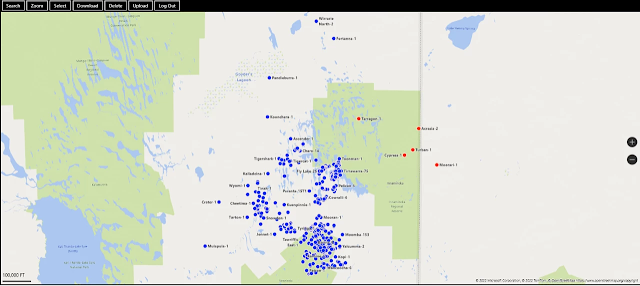

Significant investments are also being made into updating existing infrastructure to accommodate autonomous vehicles. Roads, highways, and parking areas must be equipped with the necessary infrastructure to support autonomous vehicles, such as sensors, cameras, and communication systems.

Companies are also working collaboratively with regulators, researchers, and OEMs to develop policies that ensure that autonomous vehicles can operate safely alongside traditional vehicles. This includes considerations such as how traffic signals, road markings, and signage need to be adapted to support autonomous vehicles.

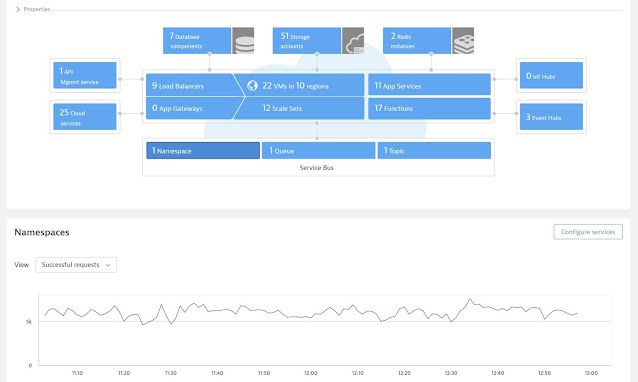

In 2021, for example, Microsoft teamed up with a market leading self-driving car innovator to unlock the potential of cloud computing for autonomous vehicles, leveraging Microsoft Azure to commercialize autonomous vehicle solutions at scale.

Another global automotive group also recently announced a collaboration with Microsoft to build a dedicated cloud-based platform for its autonomous car systems that are currently in development. This ties in with their ambitious plans to invest more than USD32 billion in the digitalization of the car by 2025.

NVIDIA is also taking bold steps to fuel the growth of the autonomous vehicle market. The NVIDIA DRIVE platform is a full-stack AI compute solution for the automotive industry, scaling from advanced driver-assistance systems for passenger vehicles to fully autonomous robotaxis. The end-to-end solution spans from the cloud to the car, enabling AI training and simulation in the data centre, in addition to running deep neural networks in the vehicle for safe and secure operations. The platform is being utilized by hundreds of companies in the industry, from leading automakers to new energy vehicle makers.

Key takeaways

There is little doubt that the future of human mobility is built upon the ground-breaking innovation and technological capabilities of autonomous vehicles. While some challenges still exist, the underlying technology continues to mature and improve, paving the way for an increase in the adoption of self-driving cars long term.

The technology may soon proliferate and displace other, less safe modes of transport, with huge potential upsides for many aspects of our daily lives, such as saving lives and reducing the number of accidents, decreasing commute times, optimizing traffic flow and patterns, thereby lessening congestion, and extending the freedom of mobility for all.

With vehicle manufacturers and software firms continuously iterating on autonomous vehicle technology, continuing to educate the public on their benefits and continuing to work with lawmakers to overcome regulatory hurdles, we may all soon enjoy a new world, one where technology gets us safely from one destination to another, leaving us free to simply enjoy the view.

Source: microsoft.com