In my recent conversations with customers, they have shared the security challenges they are facing on-premises. These challenges include recruiting and retaining security experts, quickly responding to an increasing number of threats, and ensuring that their security policies are meeting their compliance requirements.

Moving to the cloud can help solve these challenges. Microsoft Azure provides a highly secure foundation for you to host your infrastructure and applications while also providing you with built-in security services and unique intelligence to help you quickly protect your workloads and stay ahead of threats. Microsoft’s breadth of security tools range span across identity, networking, data, and IoT and can even help you protect against threats and manage your security posture. One of our integrated, first-party services is Azure Security Center.

Security Center is built into the Azure platform, making it easy for you start protecting your workloads at scale in just a few steps. Our agent-based approach allows Security Center to continuously monitor and assess your security state across Azure, other clouds and on-premises. It’s helped customers like Icertis or Stanley Healthcare strengthen and simplify their security monitoring. Security Center gives you instant insight into issues and the flexibility to solve these challenges with integrated first-party or third-party solutions. In just a few clicks, you can have peace of mind knowing Security Center is enabled to help you reduce the complexity involved in security management.

Today we are announcing several capabilities that will help you strengthen your security posture and protect against threats across hybrid environments.

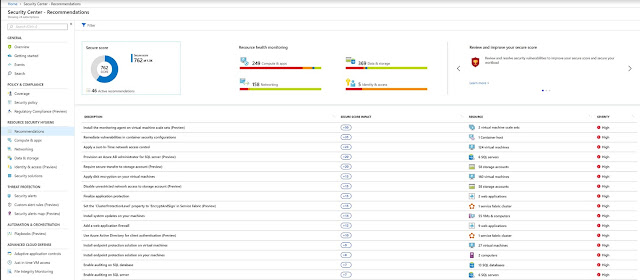

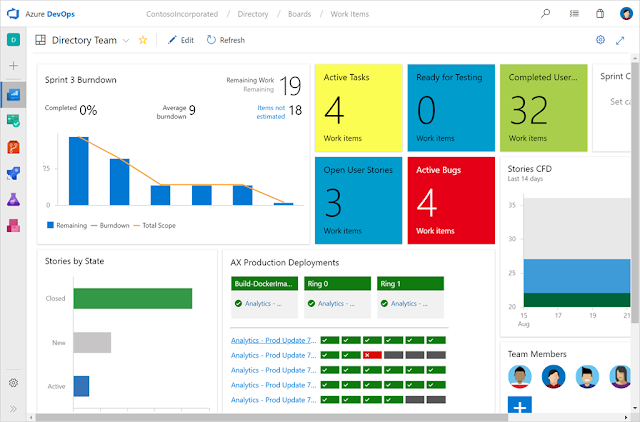

Improve your overall security with Secure Score: Secure Score gives you visibility into your organizational security posture. Secure Score prioritizes all of your recommendations across subscriptions and management groups guiding you which vulnerabilities to address first. When you quickly remediate the most pressing issues first, you can see how your actions greatly improve your Secure Score and thus your security posture.

Moving to the cloud can help solve these challenges. Microsoft Azure provides a highly secure foundation for you to host your infrastructure and applications while also providing you with built-in security services and unique intelligence to help you quickly protect your workloads and stay ahead of threats. Microsoft’s breadth of security tools range span across identity, networking, data, and IoT and can even help you protect against threats and manage your security posture. One of our integrated, first-party services is Azure Security Center.

Security Center is built into the Azure platform, making it easy for you start protecting your workloads at scale in just a few steps. Our agent-based approach allows Security Center to continuously monitor and assess your security state across Azure, other clouds and on-premises. It’s helped customers like Icertis or Stanley Healthcare strengthen and simplify their security monitoring. Security Center gives you instant insight into issues and the flexibility to solve these challenges with integrated first-party or third-party solutions. In just a few clicks, you can have peace of mind knowing Security Center is enabled to help you reduce the complexity involved in security management.

Today we are announcing several capabilities that will help you strengthen your security posture and protect against threats across hybrid environments.

Strengthen your security posture

Improve your overall security with Secure Score: Secure Score gives you visibility into your organizational security posture. Secure Score prioritizes all of your recommendations across subscriptions and management groups guiding you which vulnerabilities to address first. When you quickly remediate the most pressing issues first, you can see how your actions greatly improve your Secure Score and thus your security posture.

Interact with a new network topology map: Security Center now gives you visibility into the security state of your virtual networks, subnets and nodes through a new network topology map. As you review the components of your network, you can see recommendations to help you quickly respond to detected issues in your network. Also, Security Center continuously analyzes the network security group rules in the workload and presents a graph that contains the possible reachability of every VM in that workload on top of the network topology map.

Define security policies at an organizational level to meet compliance requirements: You can set security policies at an organizational level to ensure all your subscriptions are meeting your compliance requirements. To make things even simpler, you can also set security policies for management groups within your organization. To easily understand if your security policies are meeting your compliance requirements, you can quickly view an organizational compliance score as well as scores for individual subscriptions and management groups and then take action.

Monitor and report on regulatory compliance using the new regulatory compliance dashboard: The Security Center regulatory compliance dashboard helps you monitor the compliance of your cloud environment. It provides you with recommendations to help you meet compliance standards such as CIS, PCI, SOC and ISO.

Customize policies to protect information in Azure data resources: You can now customize and set an information policy to help you discover, classify, label and protect sensitive data in your Azure data resources. Protecting data can help your enterprise meet compliance and privacy requirements as well as control who has access to highly sensitive information. To learn more on data security, visit our documentation.

Assess the security of containers and Docker hosts: You can gain visibility into the security state of your containers running on Linux virtual machines. Specifically, you can gain insight into the virtual machines running Docker as well as the security assessments that are based on the CIS for Docker benchmark.

Protect against evolving threats

Integration with Windows Defender Advanced Threat Protection servers (WDATP): Security Center can detect a wide variety of threats targeting your infrastructure. With the integration of WDATP, you now get endpoint threat detection (i.e. Server EDR) for your Windows Servers as part of Security Center. Microsoft’s vast threat intelligence enables WDATP to identify and notify you of attackers’ tools and techniques, so you can understand threats and respond. To uncover more information about a breach, you can explore the details in the interactive Investigation Path within Security Center blade. To get started, WDATP is automatically enabled for Azure and on-premises Windows Servers that have onboarded to Security Center.

Threat detection for Linux: Security Center’s advanced threat detection capabilities are available across a wide variety of Linux distros to help ensure that whatever operation system your workloads are running on or wherever your workloads are running, you gain the insights you need to respond to threats quickly. Capabilities include being able to detect suspicious processes, dubious login attempts, and kernel module tampering.

Adaptive network controls: One of the biggest attack surfaces for workloads running in the public cloud are connections to and from the public internet. Security Center can now learn the network connectivity patterns of your Azure workload and provide you with a set of recommendations for your network security groups on how to better configure your network access policies and limit your exposure to attack. These recommendations also use Microsoft’s extensive threat intelligence reports to make sure that known bad actors are not recommended.

Threat Detection for Azure Storage blobs and Azure Postgre SQL: In addition to being able to detect threats targeting your virtual machines, Security Center can detect threats targeting data in Azure Storage accounts and Azure PostgreSQL servers. This will help you respond to unusual attempts to access or exploit data and quickly investigate the problem.

Security Center can also detect threats targeting Azure App Services and provide recommendations to protect your applications.

Fileless Attack Detection: Security Center uses a variety of advanced memory forensic techniques to identify malware that persists only in memory and is not detected through traditional means. You can use the rich set of contextual information for alert triage, correlation, analysis and pattern extraction.

Adaptive application controls: Adaptive applications controls helps you audit and block unwanted applications from running on your virtual machines. To help you respond to suspicious behavior detected with your applications or deviation from the policies you set, it will now generate an alert in the Security alerts if there is a violation of your whitelisting policies. You can now also enable adaptive application controls for groups of virtual machines that fall under the “Not recommend” category to ensure that you whitelist all applications running on your Windows virtual machines in Azure.

Just-in-Time VM Access: With Just-in-Time VM Access, you can limit your exposure to brute force attacks by locking down management ports, so they are only open for a limited time. You can set rules for how users can connect to these ports, and when someone needs to request access. You can now ensure that the rules you set for Just-in-Time VM access will not interfere with any existing configurations you have already set for your network security group.

File Integrity Monitoring (FIM): To help protect your operation system and application software from attack, Security Center is continuously monitoring the behavior of your Windows files, Windows registry and Linux files. For Windows files, you can now detect changes through recursion, wildcards, and environment variables. If some abnormal change to the files or a malicious behavior is detected, Security Center will alert you so that you can continue to stay in control of your files.

Start using Azure Security Center’s new capabilities today

The following capabilities are generally available: Enterprise-wide security policies, Adaptive application controls, Just-in-Time VM Access for a specific role, adjusting network security group rules in Just-in-Time VM Access, File Integrity Monitoring (FIM), threat detection for Linux, detecting threats on Azure App Services, Fileless Attack Detection, alert confidence score, and integration with Windows Defender Advanced Threat Protection (ATP).

These features are available in public preview: Security state of containers, network visibility map, information protection for Azure SQL, threat detection for Azure Storage blobs and Azure Postgre SQL and Secure Score.