We are thrilled to present the release of Bot Framework SDK version 4.2 and we want to use this opportunity to provide additional updates on Conversational-AI releases from Microsoft.

In the SDK 4.2 release, the team focused on enhancing monitoring, telemetry, and analytics capabilities of the SDK by improving the integration with Azure App Insights. As with any release, we fixed a number of bugs, continued to improve Language Understanding (LUIS) and QnA integration, and enhanced our engineering practices. There were additional updates across the other areas like language, prompt and dialogs, and connectors and adapters. You can review all the changes that went into 4.2 in the detailed changelog.

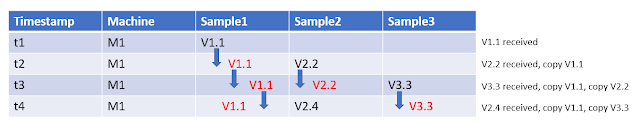

Bot Framework can use the App Insights telemetry to provide information about how your bot is performing and track key metrics. For example, once you enable App Insights for your bot, the SDK will automatically trace important information for each activity that gets sent to your bot. Essentially, per activity, for example, a user interacts with your bot by typing some utterance, the SDK emit traces for all different stages of the activity processing. This then can be placed on a timeline showing each component latency and performance – as you can see from the following image.

This can help identify slow responses and further optimize your bot performance.

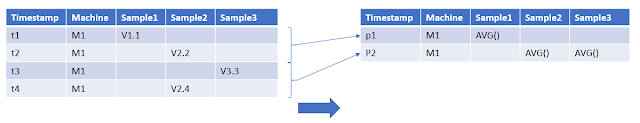

Beyond basic bot performance analysis, we have instrumented the SDK to emit traces for the dialog stack in the SDK, primarily the waterfall dialog. The following image is a visualization showing the behavior of a waterfall dialog. Specifically, this image shows three events before and after someone completes a dialog across all sessions. The center “Initial Event” is the starting point that fans left and right showing before and after, respectively. This is great to show drop-off rate, shown in red, and where most conversation ‘flows’ by the thickness of the line. This view is a default app insight report, all we had to do is connect the wires between the SDK, dialogs, and App Insights.

Telemetry updates for SDK 4.2

With the SDK 4.2 release, we started improving the built-in monitoring, telemetry, and analytics capabilities provided by the SDK. Our goal is to provide developers with the ability to understand their overall bot-health, provide detailed reports about the bot’s conversation quality, as well as tools to understand where conversations fall short. To do that, we decided to further enhance the built-in integration with Bot FrameworkMicrosoft Azure Application Insights. For that end, we have streamlined the integration and default telemetry emitted from the SDK. This includes waterfall dialog instrumentation, docs, examples for querying data, and a PowerBI dashboard.Bot Framework can use the App Insights telemetry to provide information about how your bot is performing and track key metrics. For example, once you enable App Insights for your bot, the SDK will automatically trace important information for each activity that gets sent to your bot. Essentially, per activity, for example, a user interacts with your bot by typing some utterance, the SDK emit traces for all different stages of the activity processing. This then can be placed on a timeline showing each component latency and performance – as you can see from the following image.

This can help identify slow responses and further optimize your bot performance.

Beyond basic bot performance analysis, we have instrumented the SDK to emit traces for the dialog stack in the SDK, primarily the waterfall dialog. The following image is a visualization showing the behavior of a waterfall dialog. Specifically, this image shows three events before and after someone completes a dialog across all sessions. The center “Initial Event” is the starting point that fans left and right showing before and after, respectively. This is great to show drop-off rate, shown in red, and where most conversation ‘flows’ by the thickness of the line. This view is a default app insight report, all we had to do is connect the wires between the SDK, dialogs, and App Insights.

The SDK and integration with App Insights provide a lot more capabilities, for example:

◈ Complete activity tracing including all dependencies.

◈ LUIS telemetry, including non-functional such as latency, error rate, and functions such as intent distribution, intent sentiment, and more.

◈ QnA telemetry including non-functional such as latency, error rate, and functional such QnA score and relevance.

◈ Word Cloud, common and utterances showing for top most used words and phrases – this can help in case you missed some intents or QnA.

◈ Conversation length expressed in term of time and step-count.

◈ Come up with your own reports using custom queries.

◈ Custom logging to your bot.

Solutions

The creation of a high-quality conversational experience requires a foundational set of capabilities. To help customers and partners succeed with building great conversational experiences, we released the enterprise bot template at Microsoft Ignite 2018. This template brings together all the best practices and supporting components we've identified through the building of conversational experiences.

Synchronized with the SDK 4.2 release, we have delivered updates to the enterprise template which provides additional localization for LUIS models and responses including multi-language dispatcher support for customers that wish to support multiple native languages in one bot deployment. We’ve also replaced custom telemetry work with the new native SDK support for dialog telemetry and a new Conversational Analytics Power BI dashboard providing deep analytics into usage, dialog quality, and more.

The enterprise template is now joined by retail customer support focused template which provides further LUIS models for this scenario and example dialogs for order management, stock availability, and store location.

The virtual assistant solution accelerator, which enables customers and partners to build their own virtual assistants tailored to their brand and scenarios, has continued to evolve. Ignite was our first crucial milestone for virtual assistant and skills. Work has continued with regular updates to all elements of the overall solution.

We now have full support for six languages including Chinese for the virtual assistant and skills. The productivity skills (i.e., calendar, email, and tasks) have updated conversation flows, entity handling, new pre-built domain language models, and work with Microsoft Graph. This release also includes the first automotive capabilities enabling control of car features along with updates to skills enabling proactive experiences, Speech DDK integration, and experimental skills (restaurant booking and news).

Language Understanding December update

December was a very exciting month for Language Understanding in Microsoft. On December 4, 2018, we announced Docker container support for LUIS in public preview. Hosing LUIS runtime on containers provides a great set of benefits including:

◈ Control over data: Allow customers to use the service with complete control over their data. This is essential for customers that cannot send data to the cloud but need access to the technology. Support consistency in hybrid environments – across data, management, identity, and security.

◈ Control over model updates: Provide customers flexibility in versioning and updating of models deployed in their solutions.

◈ Portable architecture: Enable the creation of a portable application architecture that can be deployed in the cloud, on-premises, and the edge.

◈ High throughput/low latency: Provide customers the ability to scale for high throughput, low latency, requirements by enabling Cognitive Services to run in Azure Kubernetes Service physically close to their application logic, and data.

LUIS has expanded its service to seven new regions completing worldwide availability in all major Azure regions including UK, India, Canada, and Japan.

Among the other notables is the enhancement of the training experience. This included the improvement in the time required to train application. The team also released new pre-built entity extractors for people’s names, and geographical locations in English and Chinese, and expanded the phone number, URL, and email entities across all languages.

QnA Maker updates

In December, the QnA Maker service released an improvement for its intelligent extraction capabilities. Along with accuracy improvements for existing supported sources, QnA Maker can now extract information from simple “Support” URLs. Read more about extraction and supported data sources in the documentation, “Data sources for QnA Maker content.” QnA Maker also rolled out an improved ranking and scoring algorithm for all English KBs.

The team also released SDKs for the service in .NET, Node.js, Go, and Ruby.

Web chat speech update

We now support the new Cognitive Services Speech to Text and Text to Speech services directly in Web Chat 4.2. This sample is a great place to learn about the new feature and start migrating your bot from Bing Speech to the new Speech Services.

We also added a few samples including backchannel injection and minimize mode. Backchannel injection demonstrates how to add sideband data to outgoing activities. You can leverage this technique to send browser language and time zone information alongside with messages sent by the user. Minimize mode sample shows how to load Web Chat on-demand and overlay it on top of your existing web page.