This blog will cover the design, technology, and recommendations for setting up disaster recovery (DR) for an enterprise customer, to achieve best in class recovery point objective (RPO) and recovery time objective (RTO) with an SAP S/4HANA landscape.

Microsoft Azure provides a trusted path to enterprise-ready innovation with SAP solutions in the cloud. Mission critical applications such as SAP run reliably on Azure, which is an enterprise proven platform offering hyperscale, agility, and cost savings for running a customer’s SAP landscape.

System availability and disaster recovery are crucial for customers who run mission-critical SAP applications on Azure.

RTO and RPO are two key metrics that organizations consider in order to develop an appropriate disaster recovery plan that can maintain business continuity due to an unexpected event. Recovery point objective refers to the amount of data at risk in terms of “Time” whereas Recovery Time Objective refers to the amount of time or the maximum tolerable time that system can be down after disaster occurs.

The below diagram gives a view of RPO and RTO on a timeline view in a business as usual (BAU) scenario.

Microsoft Azure provides a trusted path to enterprise-ready innovation with SAP solutions in the cloud. Mission critical applications such as SAP run reliably on Azure, which is an enterprise proven platform offering hyperscale, agility, and cost savings for running a customer’s SAP landscape.

System availability and disaster recovery are crucial for customers who run mission-critical SAP applications on Azure.

RTO and RPO are two key metrics that organizations consider in order to develop an appropriate disaster recovery plan that can maintain business continuity due to an unexpected event. Recovery point objective refers to the amount of data at risk in terms of “Time” whereas Recovery Time Objective refers to the amount of time or the maximum tolerable time that system can be down after disaster occurs.

The below diagram gives a view of RPO and RTO on a timeline view in a business as usual (BAU) scenario.

Orica is the world's largest provider of commercial explosives and innovative blasting systems to the mining, quarrying, oil and gas, and construction markets. They are also a leading supplier of sodium cyanide for gold extraction and a specialist provider of ground support services in mining and tunneling.

As part of Orica’s digital transformation journey, Cognizant has been chosen as a trusted technology advisor and managed cloud platform provider to build highly available, scalable, disaster proof IT platforms for SAP S/4HANA and other SAP applications in Microsoft Azure.

This blog describes how Cognizant took up the challenge of building a disaster recovery solution for Orica as a part of the Digital Transformation Program with SAP S/4HANA as a digital core. This blog contains the SAP on Azure architectural design considerations, by Cognizant and Orica, over the last two years, leading to a reduction in RTO to 4 hours. This is achieved by deploying the latest technology features available on Azure, coupled with automation. Along with reduction in RTO, there’s also reduction in RPO to less than 5 minutes with the use of database specific technologies such as SAP HANA system replication and Azure Site Recovery.

Design principles for disaster recovery systems

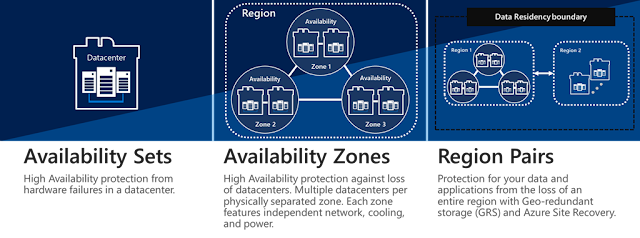

◈ Selection of DR Region based on SAP Certified VMs for SAP HANA – It is important to verify the availability of SAP Certified VMs types in DR Region.

◈ RPO and RTO Values – Businesses need to lay out clear expectations in RPO and RTO values which greatly affect the architecture for Disaster Recovery and requirements of tools and automation required to implement Disaster Recovery

◈ Cost of Implementing DR, Maintenance and DR Drills

◈ Criticality of systems – It is possible to establish Trade-off between Cost of DR implementation and Business Requirements. While most critical systems can utilize state of the art DR architecture, medium and less critical systems may afford higher RPO/RTO values.

◈ On Demand Resizing of DR instances – It is preferable to use small size VMs for DR instances and upsize those during active DR scenario. It is also possible to reserve the required capacity of VMs at DR region so that there is no “waiting” time to upscale the VMs. Microsoft offers Reserved Instances with which one can reserve virtual machines in advance and save up to 80 percent. According to required RTO value a tradeoff needs to be worked out between running smaller VMs vs. Azure RI.

◈ Additional considerations for cloud infrastructure costs, efforts in setting up environment for Non-disruptive DR Tests. Non-disruptive DR Tests refers to executing DR Tests without performing failover of actual productive systems to DR systems thereby avoiding any business downtimes. This involves additional costs for setting up temporary infrastructure which is in completely isolated vNet during the DR Tests.

◈ Certain components in SAP systems architecture such as clustered network file system (NFS) which are not recommended to be replicated using Azure Site Recovery, hence there is a need for additional tools with license costs such as SUSE Geo-cluster or SIOS Data keeper for NFS Layer DR.

◈ Selection of specific technology and tools – While Azure offers “Azure Site Recovery (ASR)” which replicates the virtual machines across the region, this technology is used at non-database components or layers of the system while database specific methods such as SAP HANA system replication (HSR) are used at database layer to ensure consistency of databases.

Disaster recovery architecture for SAP systems running on SAP HANA Database

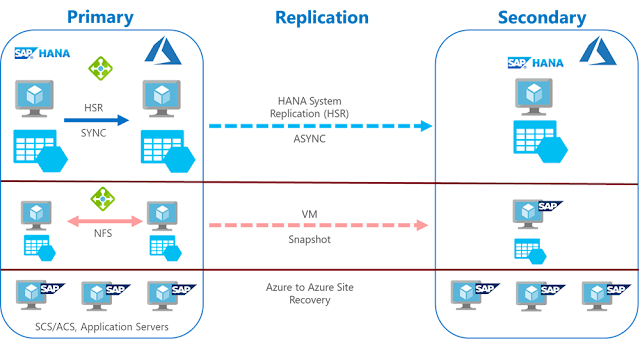

At a very high level, the below diagram depicts the architecture of SAP systems based on SAP HANA and which systems will be available in case of local or regional failures.

The diagram below gives next level details of SAP HANA systems components and corresponding technology used for achieving disaster recovery.

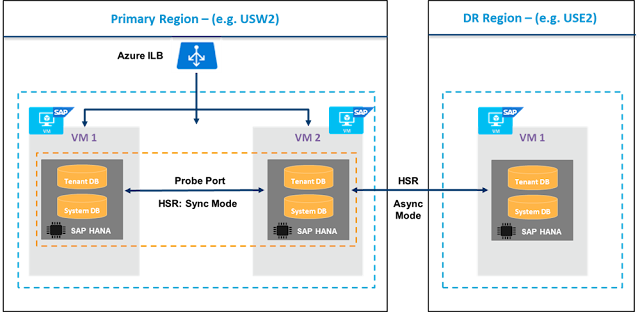

Database layer

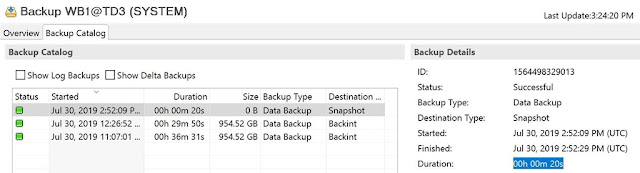

At the database layer, database specific method of replications such as SAP HANA system replication (HSR) is used. Use of database specific replication method allows better control over RPO values by configuring various replication specific parameters and offers consistency of database at DR site. The alternative methods of achieving disaster recovery at the database (DB) layer such as backup and restore, and recovery or storage base replications are available however, they result in higher RTO values.

RPO Values for SAP HANA database depend on factors including replication methodology (Synchronous in case of high availability or Asynchronous in case of DR replication), backup frequency, backup data retention policies, savepoint, and replication configuration parameters.

SAP Solution Manager can be used to monitor the replication status, such that an e-mail alert is triggered if the replication is impacted.

Even though multi-node replication is available as of SAP HANA 2.0 SP 3, revision 33, at the time or writing this article, this scenario is not tested in conjunction with high availability cluster. With successful implementation of multi-target replications, the DR maintenance process will become simpler and will not need manual interventions due to fail-over scenarios at primary site.

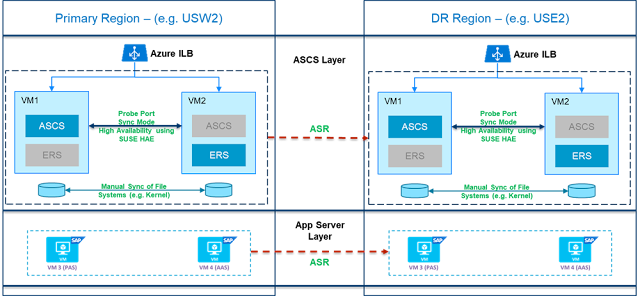

Application layer – (A)SCS, APP, iSCSI

Azure Site Recovery is used for replication of non-database components of SAP systems architecture including (A)SCS, application servers, and Linux cluster fencing agents such as iSCSI (with an exception of NFS layer which is discussed below.) Azure Site Recovery replicates workloads running on a virtual machines (VMs) from a primary site to a secondary location at storage layer and it does not require VM to be in a running state, and VMs can be started during actual disaster scenarios or DR drills.

There are two options to set up a pacemaker cluster in Azure. You can either use a fencing agent, which takes care of restarting a failed node via the Azure APIs or you can use a storage based death (SBD) device. The SBD device requires at least one additional virtual machine that acts as an iSCSI target server and provides an SBD device. These iSCSI target servers can however be shared with other pacemaker clusters. The advantage of using an SBD device is a faster failover time.

Below diagram describes disaster recovery at the application layer, (A)SCS, App servers, and iSCSI servers use the same architecture to replicate the data across DR region using Azure Site Recovery.

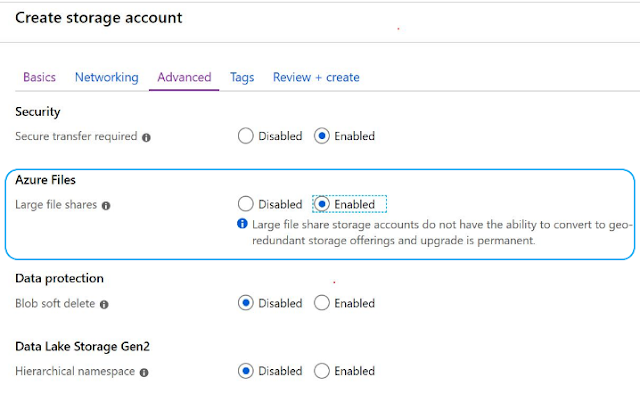

NFS layer – NFS layer at primary site uses a cluster with distributed replicated block device (DRBD) for high availability replication purposes. We evaluated multiple technologies for the implementation of DR at NFS layer. Since DRBD and Site Recovery configurations are not compatible, solutions such as SUSE geo cluster, SIOS data keeper, or simple VM snapshot backups and restore are available for achieving NFS layer DR. Since DRBD enables high availability at NFS layer using disk replication, Site Recovery replication is not supported. In case where DRBD is enabled, the cost-effective solution to achieve DR for NFS layer is by using simple backup/restore using VM snapshot backups.

Steps for invoking DR or a DR drill

Microsoft Azure Site Recovery technology helps in faster replication of data at the DR region. In a DR implementation where Site Recovery is not used or configured, it would take more than 24 hours to recover about five systems, and eventually RTO will result in 24 or more hours. However, when Site Recovery is used at the application layer with database specific method of replication at DB Layer being leveraged, it is possible to reduce the RTO value to well below four hours for same number of systems. Below diagram describes timeline view with the steps to activate disaster recovery with four hours RTO Value.

Steps for Invoking DR or a DR drill:

◈ DNS Changes for VMs to use new IP addresses

◈ Bring up iSCSI – single VM from ASR Replicated data

◈ Recover Databases and Resize the VMs to required capacity

◈ Manually provision NFS – Single VM using snapshot backups

◈ Build Application layer VMs from ASR Replicated data

◈ Perform cluster changes

◈ Bring up applications

◈ Validate Applications

◈ Release systems

Recommendations on non-disruptive DR drills

Some businesses cannot afford down-time during DR drills. Non-disruptive DR drills are suggested in case where it is not possible to arrange downtimes to perform DR. A non-disruptive DR procedure can be achieved by creating an additional DR VNet, isolating it from the network, and carrying out DR Drill with below steps.

As a prerequisite, build SAP HANA database servers in the isolated VNet and configure SAP HANA system replication.

1. Disconnect express route circuit to DR region, as express route gets disconnected it simulates abrupt unavailability of systems in primary region

2. As a prerequisite, backup domain controller is required to be active and in replication mode with primary domain controller until the time of express route disconnection

3. DNS server needs to be configured in isolated DR VNet (additional DR VNet Created for non-disruptive DR drill) and kept in standby mode until the time of express route disconnection

4. Establish point to site VPN tunnel for administrators and key users for DR test

5. Manually update the NSGs so that DR VNet is isolated from the entire network

6. Bring up applications using DR enable procedure in DR region

7. Once test is concluded, reconfigure NSGs, express route, and DR replications

Involvement of relevant infrastructure and SAP subject matter experts is highly recommended during DR tests.

Note that the non-disruptive DR procedure need to be executed with extreme caution with prior validation and testing with non-production systems. Database VMs capacity at DR region should be decided with a tradeoff between reserving full capacity vs. Microsoft’s timeline to allocate required capacity to resize the database VMs.