For the past few years, Microsoft and Red Hat have co-developed hybrid solutions enabling customers to innovate both on-premises and in the cloud. In May 2019, we announced the general availability of Azure Red Hat OpenShift, allowing enterprises to run critical container-based production workloads via an OpenShift managed service on Azure, jointly operated by Microsoft and Red Hat.

Microsoft and Red Hat are now working together to further extend Azure services to hybrid environments across on-premises and multi-cloud with upcoming support of Azure Arc for OpenShift and Red Hat Enterprise Linux (RHEL), so our customers will be able to more effectively develop, deploy, and manage cloud-native applications anywhere. With Azure Arc, customers will have a more consistent management and operational experience across their Microsoft hybrid cloud including Red Hat OpenShift and RHEL.

As part of the Azure Arc preview, we’re expanding Azure Arc’s Linux and Kubernetes management capabilities to add support specifically for Red Hat customers, enabling you to:

Organize, secure, and govern your Red Hat ecosystem across environments

Many of our customers have workloads sprawling across clouds, datacenters, and edge locations. Azure Arc enables customers to centrally manage, secure, and control RHEL servers and OpenShift clusters from Azure at scale. Wherever the workloads are running, customers can view inventory and search from the Azure Portal. They can apply policies and manage compliance for connected servers and clusters from Azure Policy; either one or many clusters at a time. Customers can enhance their security posture through built-in Azure security policies and RBAC for the managed infrastructure that works the same way wherever they run. As Azure Arc progresses towards general availability, more policies will be enabled, such as reporting on expiring certificates, password complexity, managing SSH keys, and enforcing disk encryption.

In addition, with SQL Server 2019 for RHEL 8 is now quicker to deploy via new images now available in the Azure Marketplace, we’re expanding Azure Arc to manage SQL Server on RHEL, providing integrated database and server governance via unified Azure Policies.

Finally, Azure Arc makes it easy to use Azure Management services such as Azure Monitor and Azure Security Center when dealing with workloads and infrastructure running outside of Azure.

Manage OpenShift clusters and applications at scale

Manage container-based applications running in Azure Red Hat OpenShift service on Azure, as well as OpenShift clusters running on IaaS, virtual machines (VMs), or on-premises bare metal. Applications defined in Github repositories can be automatically deployed via Azure Policy and Azure Arc to any repo-linked OpenShift cluster, and policies can be used to keep them up to date. New application versions can be distributed globally to all Azure Arc-managed OpenShift clusters using Github pull requests, with full DevOps CI/CD pipeline integrations for logging and quality testing. Additionally, if an application is modified in an unauthorized way, the change is reverted, so your OpenShift environment remains stable and compliant.

Run Azure Data Services on OpenShift and anywhere else

Azure Arc enables you to run Azure data services on OpenShift on-premises, at the edge, and in multi-cloud environments, whether a self-deployed cluster or a managed container service like Azure Red Hat OpenShift. With Azure Arc support for Azure SQL Managed Instance on OpenShift, you’ll know your container-based data infrastructure is always current and up to date; Microsoft SQL Big Data Cluster (BDC) support for OpenShift provides a new container-based deployment pattern for big data storage and analytics, allowing you to elastically scale your data with your dynamic OpenShift based application anywhere it runs.

Microsoft and Red Hat are now working together to further extend Azure services to hybrid environments across on-premises and multi-cloud with upcoming support of Azure Arc for OpenShift and Red Hat Enterprise Linux (RHEL), so our customers will be able to more effectively develop, deploy, and manage cloud-native applications anywhere. With Azure Arc, customers will have a more consistent management and operational experience across their Microsoft hybrid cloud including Red Hat OpenShift and RHEL.

What’s new for Red Hat Customers with Azure Arc

As part of the Azure Arc preview, we’re expanding Azure Arc’s Linux and Kubernetes management capabilities to add support specifically for Red Hat customers, enabling you to:

Organize, secure, and govern your Red Hat ecosystem across environments

Many of our customers have workloads sprawling across clouds, datacenters, and edge locations. Azure Arc enables customers to centrally manage, secure, and control RHEL servers and OpenShift clusters from Azure at scale. Wherever the workloads are running, customers can view inventory and search from the Azure Portal. They can apply policies and manage compliance for connected servers and clusters from Azure Policy; either one or many clusters at a time. Customers can enhance their security posture through built-in Azure security policies and RBAC for the managed infrastructure that works the same way wherever they run. As Azure Arc progresses towards general availability, more policies will be enabled, such as reporting on expiring certificates, password complexity, managing SSH keys, and enforcing disk encryption.

In addition, with SQL Server 2019 for RHEL 8 is now quicker to deploy via new images now available in the Azure Marketplace, we’re expanding Azure Arc to manage SQL Server on RHEL, providing integrated database and server governance via unified Azure Policies.

Finally, Azure Arc makes it easy to use Azure Management services such as Azure Monitor and Azure Security Center when dealing with workloads and infrastructure running outside of Azure.

Manage OpenShift clusters and applications at scale

Manage container-based applications running in Azure Red Hat OpenShift service on Azure, as well as OpenShift clusters running on IaaS, virtual machines (VMs), or on-premises bare metal. Applications defined in Github repositories can be automatically deployed via Azure Policy and Azure Arc to any repo-linked OpenShift cluster, and policies can be used to keep them up to date. New application versions can be distributed globally to all Azure Arc-managed OpenShift clusters using Github pull requests, with full DevOps CI/CD pipeline integrations for logging and quality testing. Additionally, if an application is modified in an unauthorized way, the change is reverted, so your OpenShift environment remains stable and compliant.

Run Azure Data Services on OpenShift and anywhere else

Azure Arc enables you to run Azure data services on OpenShift on-premises, at the edge, and in multi-cloud environments, whether a self-deployed cluster or a managed container service like Azure Red Hat OpenShift. With Azure Arc support for Azure SQL Managed Instance on OpenShift, you’ll know your container-based data infrastructure is always current and up to date; Microsoft SQL Big Data Cluster (BDC) support for OpenShift provides a new container-based deployment pattern for big data storage and analytics, allowing you to elastically scale your data with your dynamic OpenShift based application anywhere it runs.

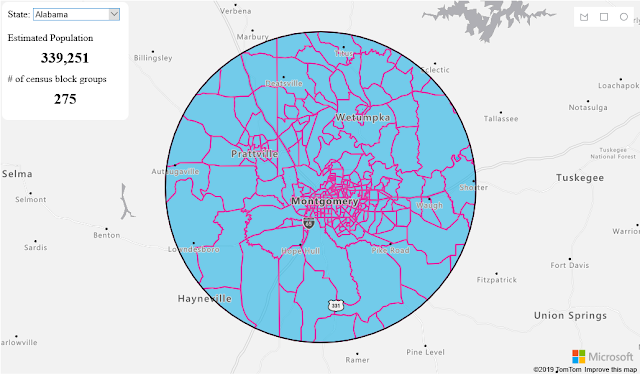

Managing multiple configurations for an on-premises OpenShift deployment from Azure Arc.

Azure SQL Managed Instances within Azure Arc.

If you’d like to learn more about how Azure is working with Red Hat to make innovation easier for customers in hybrid cloud environments, join us for a fireside chat between Scott Guthrie, EVP of Cloud and AI at Microsoft, and Paul Cormier, president and CEO of Red Hat, including a demo of Azure Arc for Red Hat today at the Red Hat Summit 2020 Virtual Experience.

Private hybrid clusters and OpenShift 4 added to Azure Red Hat OpenShift

Rounding out our hybrid offerings for Red Hat customers, today we’re announcing the general availability of Azure Red Hat OpenShift on OpenShift 4.

This release brings key innovations from Red Hat OpenShift 4 to Azure Red Hat OpenShift. Additionally we‘re enabling features to support hybrid and enterprise customer scenarios, such as:

◉ Private API and ingress endpoints: Customers can now choose between public and private cluster management (API) and ingress endpoints. With private endpoints and Azure Express Route support we’re enabling private hybrid clusters, allowing our mutual customers to extend their on-premises solutions to Azure.

◉ Industry compliance certifications: To help customers meet their compliance obligations across regulated industries and markets worldwide, Azure Red Hat OpenShift is now PCI DSS, HITRUST, and FedRAMP certified. Azure maintains the largest compliance portfolio in the industry both in terms of total number of offerings, as well as number of customer-facing services in assessment scope.

◉ Multi-Availability Zones clusters: To ensure the highest resiliency, cluster components are now deployed across 3 Azure Availability Zones in supported Azure regions to maintain high availability for the most demanding mission-critical applications and data. Azure Red Hat OpenShift has a Service Level Agreement (SLA) of 99.9 percent.

◉ Cluster-admin support: We’ve enabled the cluster-admin role on Azure Red Hat OpenShift clusters, enabling full cluster customization capabilities, such as running privileged containers and installing Custom Resource Definitions (CRDs).