Unravel on HDInsight enables developers and IT Admins to manage performance, auto scaling & cost optimization better than ever.

We are pleased to announce Unravel on Azure HDInsight Application Platform. Azure HDInsight is a fully-managed open-source big data analytics service for enterprises. You can use popular open-source frameworks (Hadoop, Spark, LLAP, Kafka, HBase, etc.) to cover broad range of scenarios such as ETL, Data Warehousing, Machine Learning, IoT and more. Unravel provides comprehensive application performance management (APM) for these scenarios and more. The application helps customers analyze, optimize, and troubleshoot application performance issues and meet SLAs in a seamless, easy to use, and frictionless manner. Some customers report up to 200 percent more jobs at 50 percent lower cost using Unravel’s tuning capability on HDInsight.

The inherent complexity of big data systems, disparate set of tools for monitoring, and lack of expertise in optimizing these open source frameworks create significant challenges for end-users who are responsible for guaranteeing SLAs. Users today have to monitor their applications with Ambari which only provides infrastructure metrics to administer the cluster health, performance and utilization. Big Data solutions use a variety of open source frameworks. Monitoring applications running across all of these frameworks is a daunting task. Users have to troubleshoot issues manually by analyzing logs from YARN, Hive, Tez, LLAP, Pig, Spark, Kafka, etc. To get good performance, users may have to change settings in Spark executors, YARN queues, Kafka topic configuration, region servers in HBase, storage throttling, sizing of compute and more. Unravelling this complexity is an art and science.

Unravel on HDInsight provides intelligent applications and operations management for Big Data a breeze. Its Application Performance Management correlates full-stack performance and provides automated insights and recommendations. Users can now analyze troubleshoot and optimize performance with ease. Here are the key value propositions of Unravel on HDInsight:

◈ Proactive alerts on applications missing SLAs, violating usage policies or affecting other applications running on the cluster.

◈ Automatic actions to resolve above issues using dynamic thresholds and company defined policies such as killing bad applications, re-directing apps based on priority levels.

◈ Intuitive, end-to-end view of application performance with drill-down capabilities into bottlenecks, problem areas and errors.

◈ Correlated insights into all factors affecting app performance such as resource allocation, container utilization, poor configuration settings, task execution pattern, data layout, resource contention and more.

◈ Rapid detection of performance and cost issues caused by applications.

Following is an example of how Unravel diagnosed poor resource usage and high cost caused by a Hive on Tez application. Tuning the application using recommendations provided by Unravel reduced the cost of running this application by 10 times.

We are pleased to announce Unravel on Azure HDInsight Application Platform. Azure HDInsight is a fully-managed open-source big data analytics service for enterprises. You can use popular open-source frameworks (Hadoop, Spark, LLAP, Kafka, HBase, etc.) to cover broad range of scenarios such as ETL, Data Warehousing, Machine Learning, IoT and more. Unravel provides comprehensive application performance management (APM) for these scenarios and more. The application helps customers analyze, optimize, and troubleshoot application performance issues and meet SLAs in a seamless, easy to use, and frictionless manner. Some customers report up to 200 percent more jobs at 50 percent lower cost using Unravel’s tuning capability on HDInsight.

How complex is guaranteeing an SLA on a Big Data solution?

The inherent complexity of big data systems, disparate set of tools for monitoring, and lack of expertise in optimizing these open source frameworks create significant challenges for end-users who are responsible for guaranteeing SLAs. Users today have to monitor their applications with Ambari which only provides infrastructure metrics to administer the cluster health, performance and utilization. Big Data solutions use a variety of open source frameworks. Monitoring applications running across all of these frameworks is a daunting task. Users have to troubleshoot issues manually by analyzing logs from YARN, Hive, Tez, LLAP, Pig, Spark, Kafka, etc. To get good performance, users may have to change settings in Spark executors, YARN queues, Kafka topic configuration, region servers in HBase, storage throttling, sizing of compute and more. Unravelling this complexity is an art and science.

Monitoring Big Data applications now made easy with Unravel

Unravel on HDInsight provides intelligent applications and operations management for Big Data a breeze. Its Application Performance Management correlates full-stack performance and provides automated insights and recommendations. Users can now analyze troubleshoot and optimize performance with ease. Here are the key value propositions of Unravel on HDInsight:

Proactive alerting and automatic actions

◈ Proactive alerts on applications missing SLAs, violating usage policies or affecting other applications running on the cluster.

◈ Automatic actions to resolve above issues using dynamic thresholds and company defined policies such as killing bad applications, re-directing apps based on priority levels.

Analyze app performance

◈ Intuitive, end-to-end view of application performance with drill-down capabilities into bottlenecks, problem areas and errors.

◈ Correlated insights into all factors affecting app performance such as resource allocation, container utilization, poor configuration settings, task execution pattern, data layout, resource contention and more.

◈ Rapid detection of performance and cost issues caused by applications.

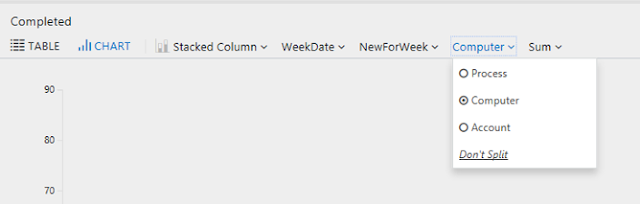

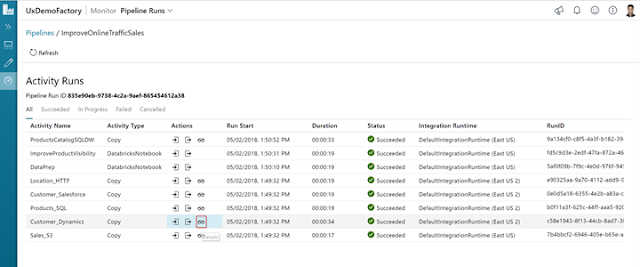

Following is an example of how Unravel diagnosed poor resource usage and high cost caused by a Hive on Tez application. Tuning the application using recommendations provided by Unravel reduced the cost of running this application by 10 times.

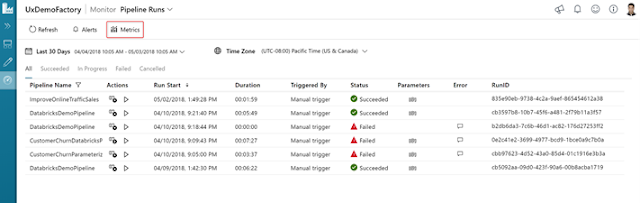

Here is an example of cluster utilization after using Unravel. Unravel enables you to utilize resources efficiently and auto scale the cluster based on SLA needs, which results in cost savings.

AI-driven intelligent recommendation engine

◈ Recommend optimal values for fastest execution and/or least resource utilization including: data parallelism, optimal container size, number of tasks, etc.

The example below shows how Unravel’s AI-driven engine provides actionable recommendations for optimal performance of a Hive query.

◈ Identify and automatically fix issues related to poor execution, skew, expensive joins, too many mappers/reducers, caching, etc.

Following is an example of Unravel automatically detecting lag in a real-time IoT application using Spark Streaming & Kafka and recommending a solution.

Get started with Unravel on HDInsight

Customers can easily install Unravel on HDInsight using a single click in Azure portal. Unravel provides a live view into the behavior of big data applications using open source frameworks such as Hadoop, Hive, Spark, Kafka, LLAP and more on Azure HDInsight.

After installing you can launch the Unravel application from the Applications blade of the cluster as shown below.