Drilling for oil and gas is one of the most dangerous jobs on Earth. Workers are exposed to the risk of events ranging from small equipment malfunctions to entire off shore rigs catching on fire. Fortunately, the application of deep learning in predictive asset maintenance can help prevent natural and human made catastrophes.

We have more information than ever on our equipment thanks to sensors and IoT devices, but we are still working on ways to process the data so it is valuable for preventing these catastrophic events. That’s where deep learning comes in. Data from multiple sources can be used to train a predictive model that helps oil and gas companies predict imminent disasters, enabling them to follow a proactive approach.

Using the PyTorch deep learning framework on Microsoft Azure, Accenture helped a major oil and gas company implement such a predictive asset maintenance solution. This solution will go a long way in protecting their staff and the environment.

What is predictive asset maintenance?

Predictive asset maintenance is a core element of the digital transformation of chemical plants. It is enabled by an abundance of cost-effective sensors, increased data processing, automation capabilities, and advances in predictive analytics. It involves converting information from both real-time and historical data into simple, accessible, and actionable insights. This is in order to enable the early detection and elimination of defects that would otherwise lead to malfunction. For example, by simply detecting an early defect in a seal that connects the pipes, we can prevent a potential failure that can result in a catastrophic collapse of the whole gas turbine.

Under the hood, predictive asset maintenance combines condition-based monitoring technologies, statistical process control, and equipment performance analysis to enable data from disparate sources across the plant to be visualized clearly and intuitively. This allows operations and equipment to be better monitored, processes to be optimized, better controlled, and energy management to be improved.

It is worth noting that the predictive analytics at the heart of this process do not tell the plant operators what will happen in the future with complete certainty. Instead, they forecast what is likely to happen in the future with an acceptable level of reliability. It can also provide “what-if” scenarios and an assessment of risks and opportunities.

Figure 1 – Asset maintenance maturity matrix (Source: Accenture)

The challenge with oil and gas

Event prediction is one of the key elements in predictive asset maintenance. For most prediction problems there are enough examples of each pattern to create a model to identify them. Unfortunately, in certain industries like oil and gas where everything is geared towards avoiding failure, the sought-after examples of failure patterns are rare. This means that most standard modelling approaches either perform no better than experienced humans or fail to work at all.

Accenture’s solution with PyTorch and Azure

Although there only exists a small number of failure examples, there exists a wealth of times series and inspection data that can be leveraged.

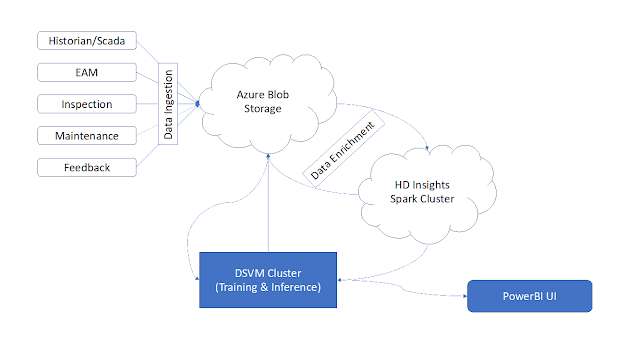

Figure 2 – Approach for Predictive Maintenance (Source : Accenture)

After preparing the data in stage one, a two-phase deep learning solution was built with PyTorch in stage two. First, a recurrent neural network (RNN) was trained in combination with a long short-term memory (LSTM) architecture which is phase one of stage two. The neural network architecture used in the solution was inspired by Koprinkova-Hristova et al 2011 and Aydin and Guldamlasioglu 2017. This RNN timeseries model forecasts important variables, such as the temperature of an important seal. These forecasts are then fed into a classifier algorithm (random forest) to identify the variable is outside of the safe range and if so, the algorithm produces a ranking of potential causes which experts can examine and address. This effectively enables experts to address the root causes of potential disasters before they occur.

The following is a diagram of the system that was used for training and execution of the solution:

Figure 3 - System Architecture

The architecture above was chosen to ensure the customer requirement of maximum flexibility in modeling, training, and in the execution of complex machine learning workflows are using Microsoft Azure. At the time of implementation, the services that fit these requirements were HDInsights and Data Science Virtual Machines (DSVM). If the project was implemented today, Azure Machine Learning service would have been used for training/inferencing with HDInsights or Azure Databricks for data processing.

PyTorch was used due to the extreme flexibility in designing the computational execution graphs, and not being bound into a static computation execution graph like in other deep learning frameworks. Another important benefit of PyTorch is that standard python control flow can be used and models can be different for every sample. For example, tree-shaped RNNs can be created without much effort. PyTorch also enables the use of Python debugging tools, so programs can be stopped at any point for inspection of variables, gradients, and more. This flexibility was very beneficial during training and tuning cycles.

The optimized PyTorch solution resulted in faster training time by over 20 percent compared to other deep learning frameworks along with 12 percent faster inferencing. These improvements were crucial in the time critical environment that team was working in. Please note, that the version tested was PyTorch 0.3.

Overview of benefits of using PyTorch in this project:

◈ Training time

◈ Reduction in average training time by 22 percent using PyTorch on the outlined Azure architecture.

◈ Debugging/bug fixing

◈ The dynamic computational execution graph in combination with Python standard features reduced the overall development time by 10 percent.

◈ Visualization

◈ The direct integration into Power BI enabled a high end-user acceptance from day one.

◈ Experience using distributed training

◈ The dynamic computational execution graph in combination with flow control allowed us to create a simple distributed training model and gain significant improvements in overall training time.

How did Accenture operationalize the final model?

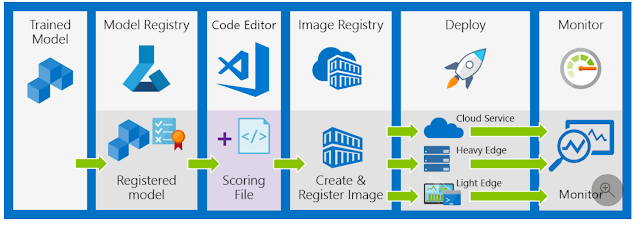

Scalability and operationalization were key design considerations from day one of the project, as the customer wanted to scale out the prototype to several other assets across the fleet. As a result, all components within the system architecture were chosen with those as criteria. In addition, the customer wanted to have the ability to add more data sources using Azure Data Factory. Azure Machine Learning service and its model management capability were used to operationalize the final model. The following diagram illustrates the deployment workflow used.

Figure 4 – Deployment workflow

The deployment model was also integrated into a Continuous Integration/Continuous Delivery (CI/CD) workflow as depicted below.

PyTorch on Azure: Better together

The combination of Azure AI offerings with the capabilities of PyTorch proved to be a very efficient way to train and rapidly iterate on the deep learning architectures used for the project. These choices yielded a significant reduction in training time and increased productivity for data scientists.

Azure is committed to bringing enterprise-grade AI advances to developers using any language, any framework, and any development tool. Customers can easily integrate Azure AI offerings into any part of their machine learning lifecycles to productionize their projects at scale, without getting locked into any one tool or platform.

0 comments:

Post a Comment