This marks an important milestone in our journey to redefine Security Information and Event Management (SIEM) for the cloud era. With Azure Sentinel, enterprises worldwide can now keep pace with the exponential growth in security data, improve security outcomes without adding analyst resources, and reduce hardware and operational costs.

With the help of customers and partners, including feedback from over 12,000 trials during the preview, we have designed Azure Sentinel to bring together the power of Azure and AI to enable Security Operations Centers to achieve more. There are lots of new capabilities coming online this week. I’ll walk you through several of them here.

With Azure Sentinel, we are on a mission to improve security for the whole enterprise. Many Microsoft and non-Microsoft data sources are built right in and can be enabled in a single click. New connectors for Microsoft services like Cloud App Security and Information Protection join a growing list of third-party connectors to make it easier than ever to ingest and analyze data from across your digital estate.

Workbooks offer rich visualization options for gaining insights into your data. Use or modify an existing workbook or create your own.

With the help of customers and partners, including feedback from over 12,000 trials during the preview, we have designed Azure Sentinel to bring together the power of Azure and AI to enable Security Operations Centers to achieve more. There are lots of new capabilities coming online this week. I’ll walk you through several of them here.

Collect and analyze nearly limitless volume of security data

With Azure Sentinel, we are on a mission to improve security for the whole enterprise. Many Microsoft and non-Microsoft data sources are built right in and can be enabled in a single click. New connectors for Microsoft services like Cloud App Security and Information Protection join a growing list of third-party connectors to make it easier than ever to ingest and analyze data from across your digital estate.

Workbooks offer rich visualization options for gaining insights into your data. Use or modify an existing workbook or create your own.

Apply analytics, including Machine Learning, to detect threats

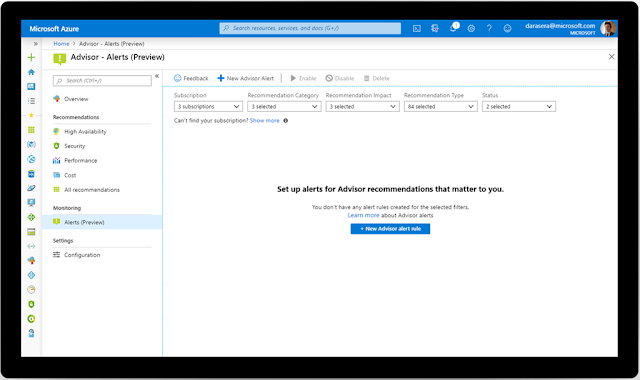

You can now choose from more than 100 built-in alert rules or use the new alert wizard to create your own. Alerts can be triggered by a single event or based on a threshold, or by correlating different datasets (e.g., events that match threat indicators) or by using built-in machine learning algorithms.

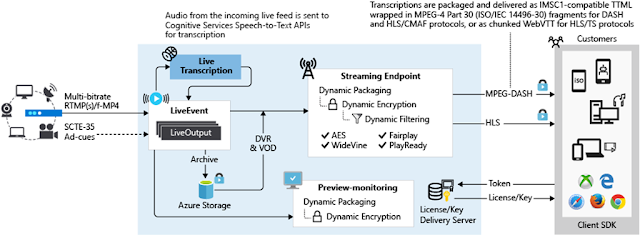

We’re previewing two new Machine Learning approaches that offer customers the benefits of AI without the complexity. First, we apply proven off-the-shelf Machine Learning models for identifying suspicious logins across Microsoft identity services to discover malicious SSH accesses. By using transferred learning from existing Machine Learning models, Azure Sentinel can detect anomalies from a single dataset with accuracy. In addition, we use a Machine Learning technique called fusion to connect data from multiple sources, like Azure AD anomalous logins and suspicious Office 365 activities, to detect 35 different threats that span different points on the kill chain.

Expedite threat hunting, incident investigation, and response

Proactive threat hunting is a critical yet time-consuming task for Security Operations Centers. Azure Sentinel makes hunting easier with a rich hunting interface that features a growing collection of hunting queries, exploratory queries, and python libraries for use in Jupyter Notebooks. Use these to identify events of interest and bookmark them for later reference.

Incidents (formerly cases) contain one or more alerts that require further investigation. Incidents now support tagging, comments, and assignments. A new rules wizard allows you to decide which Microsoft alerts trigger the creation of incidents.

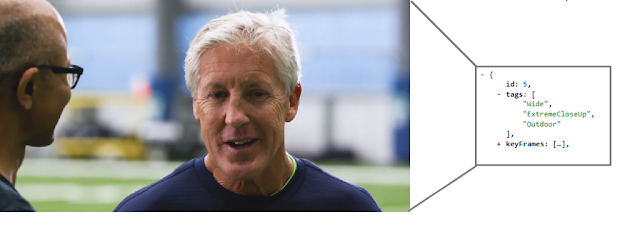

Using the new investigation graph preview, you can visualize and traverse the connections between entities like users, assets, applications, or URLs and related activities like logins, data transfers, or application usage to rapidly understand the scope and impact of an incident.

New actions and playbooks simplify the process of incident automation and remediation using Azure Logic Apps. Send an email to validate a user action, enrich an incident with geolocation data, block a suspicious user, and isolate a Windows machine.

Build on the expertise of Microsoft and community members

The Azure Sentinel GitHub repository has grown to over 400 detection, exploratory, and hunting queries, plus Azure Notebooks samples and related Python libraries, playbooks samples, and parsers. The bulk of these were developed by our MSTIC security researchers based on their vast global security experience and threat intelligence.

Support managed Security Services Providers and complex customer instances

Azure Sentinel now works with Azure Lighthouse, empowering customers and managed security services providers (MSSPs) to view Azure Sentinel for multiple tenants without the need to navigate between tenants. We have worked closely with our partners to jointly develop a solution that addresses their requirements for a modern SIEM.

DXC Technology, one of the largest global MSSPs is a great example of this design partnership:

“Through our strategic partnership with Microsoft, and as a member of the Microsoft Security Partner Advisory Council, DXC will integrate and deploy Azure Sentinel into the cyber defense solutions and intelligent security operations we deliver to our clients.” said Mark Hughes, senior vice president and general manager, Security, DXC. “Our integrated solution leverages the cloud native capabilities and assets of Azure Sentinel to orchestrate and automate large volumes of security incidents, enabling our security experts to focus on the forensic investigation of high priority incidents and threats.”