Saturday, 31 December 2022

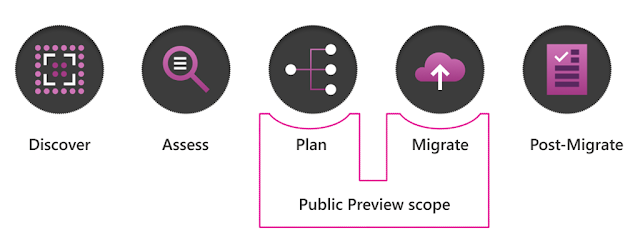

Zero downtime migration for Azure Front Door—now in preview

Thursday, 29 December 2022

Delivering consistency and transparency for cloud hardware security

Securing customer workloads from the cloud to the edge

Caliptra: Integrating trust into every chip

Hydra: A new secure Baseboard Management Controller (BMC)

Kirkland: A secure Trusted Platform Module (TPM)

Open hardware innovation at cloud scale

Tuesday, 27 December 2022

Microsoft Azure CLX: A personalized program to learn Azure

What is the CLX program?

What courses will I take?

The courses you take are up to you. The self-paced program is catered to your skillset, and you can embark on six tracks: Microsoft Azure Fundamentals, Microsoft Azure AI Fundamentals, Microsoft Azure Data Fundamentals, Microsoft Azure Administrator, Administering Windows Server Hybrid Core Infrastructure, and Windows Server Hybrid Advanced Series—with more on the way. Learn more about these tracks below.

| Course | Learner Personas | Course Content |

| Microsoft Azure Fundamentals | Administrators, Business Users, Developers, Students, Technology Managers | This course strengthens your knowledge of cloud concepts and Azure services, workloads, security, privacy, pricing, and support. It’s designed for learners with an understanding of general technology concepts, such as networking, computing, and storage. |

| Microsoft Azure AI Fundamentals | AI Engineers, Developers, Data Scientists | This course, designed for both technical and non-technical professionals, bolsters your understanding of typical machine learning and artificial intelligence workloads and how to implement them for Azure. |

| Microsoft Azure Data Fundamentals | Database Administrators, Data Analysts, Data Engineers, Developers | The Data Fundamentals course instructs you on Azure core data concepts, Azure SQL, Azure Cosmos DB, and modern data warehouse analytics. It’s designed for learners with a basic knowledge of core data concepts and how they’re implemented in Azure. |

| Microsoft Azure Administrator | Azure Cloud Administrators, VDI Administrators, IT Operations Analysts | In Azure Administrator, you’ll learn to implement cloud infrastructure, develop applications, and perform networking, security, and database tasks. It’s designed for learners with a robust understanding of operating systems, networking, servers, and virtualization. |

| Administering Windows Server Hybrid Core Infrastructure | Systems Administrators, Infrastructure Deployment Engineers, Senior System Administrators, Senior Site Reliability Engineers | In this course, you’ll learn to configure on-premises Windows Servers, hybrid, and Infrastructure as a Service (IaaS) platform workloads. It’s geared toward those with the knowledge to configure, maintain, and deploy on-premises Windows Servers, hybrid, and IaaS platform workloads. |

| Windows Server Hybrid Advanced Series | System Administrators, Infrastructure Deployment Engineers, Associate Database Administrators | This advanced series, which is designed for those with deep administration and deployment knowledge, strengthens your ability to configure and manage Windows Server on-premises, hybrid, and IaaS platform workloads. |

How do I get certified?

Saturday, 24 December 2022

Improve speech-to-text accuracy with Azure Custom Speech

Custom Speech data types and use cases

Research milestones

Customer inspiration

Speech Services and Responsible AI

Friday, 23 December 2022

How to Begin Preparation for the Microsoft AZ-500 Certification Exam?

Overview of Azure AZ-500 Certification

AZ-500 is an associate-level certification exam. Passing this Microsoft exam is equal to becoming a subject matter expert in the field of Azure cloud. The Microsoft AZ-500 certification proves your skills and expertise in working in the Azure cloud with identity control and security.

The AZ-500 exam purports to demonstrate that the applicant understands the identity control and security process, and it confirms your skills and expertise in the field of cloud computing.

You can go for this certification exam if you have knowledge or experience of Microsoft Azure fundamentals or some former knowledge of advanced security.

Microsoft AZ-500 Exam Details

The number of questions may vary in all Microsoft exams, approximately 40-60. But, the time for this exam is 120 minutes. You’d have to score 700 marks out of 1000 if you want to pass the AZ-500 exam.

Microsoft Azure AZ-500 Exam Domains:

How to Begin Preparation for the Microsoft AZ-500 Certification Exam?

Let’s dive into the phase of Microsoft Azure Security Technologies AZ-500 examination preparation. These are a few of the confirmed phases to confirm the topmost preparation for the AZ-500 certificate examination.

1. Visit the Microsoft Official website

While you have determined to begin the AZ-500 exam preparation, your most fundamental phase is to explore the official webpage. You will be exploring all the essential details about the exam, such as prerequisites, exam cost, preconditions, and examination objectives there. Most importantly, you will find timely updates about the exam; that way, you will keep your preparations on the right track.

2. Have Detailed Knowledge of the Exam Objectives

Another crucial phase while preparing for the examination is understanding the AZ-500 syllabus in a straightforward way. Amusingly, you will utilize the studying guideline to consistently contemplate every examination objective with the understanding of subtopics in every topic. Most importantly, your understanding of the examination syllabus wouldn’t only smoothen your preparations and also give you the assurance to qualify for the examination easily.

3. Enroll in Training Courses

The importance of enrolling in an official online training course is also apparent in the exam preparation for AZ-500. The official training course for the “Course AZ-500T00: Microsoft Azure Security Technologies” offers a further advantage to exam preparation. With the instructor-led course, it will be simpler for you to acquire technical skills and conceptual knowledge.

4. Microsoft AZ-500 Practice Test

Practice tests are excellent study resources you can utilize to prepare for the AZ-500 exam. If you take up the practice tests from some authentic websites, such as edusum, you will find them really beneficial and productive also. Practice tests will give you an opportunity to gauge your skills in a simulation environment. Utilize this opportunity to familiarize yourself with question types that you will confront in the actual exam and boost your confidence.

5. Online Community

You can join an online community at any time while studying for the exam. Group study assures that you are in touch with everyone following the same path as you. Group study features a large number of people that can assist you with any questions you have regarding the AZ-500 exam.

Is the Microsoft Azure Security Technologies AZ-500 Certification Worth It?

There are always advantages to achieving IT certifications for professionals who work in the IT field. Here are some of the reasons that earning the AZ-500 Azure Security Technologies certification is worth it:

Conclusion

According to experts, Azure certification programs like the Microsoft Azure AZ-500 are more than just exams. The AZ-500 certification will improve your confidence while also presenting you to renowned figures in the IT industry. Moreover, this has been categorized as one of the best cloud security certifications available.

Thursday, 22 December 2022

Microsoft Innovation in RAN Analytics and Control

Dynamic service models for real-time RAN control

The Microsoft RAN analytics and control framework

Examples of new applications of RAN analytics

Saturday, 17 December 2022

Learn how Microsoft datacenter operations prepare for energy issues

Supporting grid stability by responsibly managing our energy consumption

Resilient infrastructure investment

Resiliency recommendations for cloud architectures

Wednesday, 14 December 2022

Your Best Help to Clear Microsoft DP-900 Exam

You may find this helpful article if you are considering taking the Microsoft DP-900 exam shortly. In the post, you will learn essential details of this exam and how to prepare for it. Let’s get it started!

All About Microsoft DP-900 Certification Exam

Microsoft DP-900 is an entry-level exam that familiarizes the applicants with the essential data concepts and equips them with an understanding of how these concepts are executed with the help of Microsoft data services.

Although Microsoft doesn’t reveal details about the duration and number of questions, you can anticipate 40 to 60 questions in your actual exam that you will need to answer within 60 minutes. The exam is available in multiple languages and costs $99.

Once you have cracked the Microsoft DP-900, you will be granted the Microsoft Certified - Azure Data Fundamentals certification, as this is a fundamental certification that will propose the jump-start you require to establish your IT career in Azure. After learning the fundamentals, this Microsoft certification will be your stepping stone to achieving Microsoft Azure role-based certifications such as Azure Database Administrator Associate or Azure Data Engineer Associate.

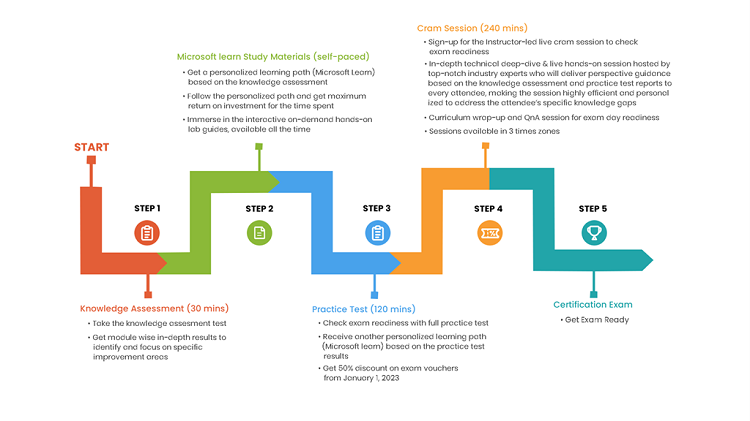

Preparation for the Microsoft DP-900 Certification Exam

If you plan to take Microsoft Azure Data Fundamentals DP-900, you don’t have to stress. With the right preparation strategy and the appropriate study materials, you can ace your exam without issues and with a high result. Below are some of the top preparation tips that will help you achieve your desired score.

1. Know the DP-900 Syllabus Topics

You first ought to explore the official webpage and understand the DP-900 syllabus topics. Become familiar with each topic and then its subtopics thoroughly and comprehend what you are expected to know. After reading all the syllabus topics, craft out a study plan that incorporates all the syllabus topics. Make sure that you are practical about your study schedule. Don’t specify your exam preparation over a few days before your scheduled exam date. Take time to learn and comprehend everything attentively and not miss a spot before taking the DP-900 exam.

2. Choose the Best Study Resources

There are lots of study guides and other learning materials available for DP-900 exam preparation, which suggests you can have enough of them to pass the exam with a good score. Don’t get baffled about the wealth of resources out there. To ensure you use only the reliable ones, it is advised that you limit your search to only reputable platforms such as Microsoft official website. Microsoft provides training courses for all Microsoft exams. This suggests that you will find enough materials on this site.

3. Learn Time Management with DP-900 Practice Test

Before taking the Microsoft Azure Data Fundamentals exam, it is crucial to acquire exam-taking and time-management skills. The ideal way to go about this is to take DP-900 practice tests independently in a mock environment. This will help you acquire proficiency in managing the time limit. You may feel you have sufficient time, but if you are not aware, you may find out that the 60‑minute duration is not enough to answer all the questions. Thus, take time to answer practice questions in a simulated environment.

4. Give Yourself A Break

When you have studied and done everything you ought to, the next thing is to unwind and believe in your preparation. Take small breaks in between your studies and take a good rest the day before the exam. Being fresh and peaceful will help you a lot on the actual exam day.

Don’t get nervous. Relax and read the questions thoroughly on the day of your scheduled exam. If you come across the ones you are not familiar with, mark them for analysis and skip them. You can answer them when you are done with the exam. You mustn’t leave any question unanswered since you will not be penalized for answering incorrectly, but sometimes, your guesses might be right, considerably improving your overall score.

Benefits of Microsoft Azure Data Fundamentals DP-900 Certification

Conclusion

Microsoft DP-900 is an excellent step to boost a career in Azure. With the Microsoft Azure Data Fundamentals certification, you advance your career and salary potential in the IT field for complex tasks.

Remember, passing any exam demands persistence and enough preparation. Taking benefit of the resources available makes it simpler to pass the exam without much ado.