Developers are essential to the world we live in today, and the work you do is critical to powering organizations in every industry. Every developer and development team brings new ideas and innovation. Our ambition with the Microsoft Cloud and Azure is to be the platform for all of this innovation to really empower the entire community as they build what comes next.

Microsoft was founded as a developer tools company, and developers remain at the very center of our mission. Today, we have the most used and beloved developer tools with Visual Studio, .NET, and GitHub. We offer a trusted and comprehensive platform to build amazing apps and solutions that help enable people and organizations across the planet to achieve more.

Over 95 percent of the world’s largest companies today are choosing Microsoft Azure to run their business, in addition to thousands of smaller and mid-size innovative organizations as well. The NBA uses Azure and AI capabilities to turn billions of in-game data points into customizable content for its fans. Stonehenge Technology Labs has increased developer velocity through its fast-growing commerce enhancement software, STOPWATCH, using Azure, Live Share, and Visual Studio.

With the Microsoft Cloud and Azure, we meet you where you are and make it easy for you to start your cloud-native journey—from anywhere. That means developers can use their favorite languages, open-source frameworks, and tools to code and deploy to the cloud and the edge, collaborating in a secure way and integrating different components in no time with low-code solutions.

Supporting all of this, here are some of the latest developments we’ll talk about at Microsoft Build this week. You can also view the Scaling cloud-native apps and accelerating app modernization session to learn more about these announcements.

Build modern, cloud-native apps productively with serverless technologies and the best Kubernetes experience for developers

As new apps are built, you’ll want them to be cloud-native since they’re designed to take full advantage of everything the cloud offers. Using cloud-native design patterns helps achieve the agility, efficiency, and speed of innovation that you need to deliver for your businesses. The experience bar and what end users expect from apps is going up. Product launches, peak shopping seasons, and sporting events are just a few examples of highly dynamic usage demands that modern apps must be prepared to handle.

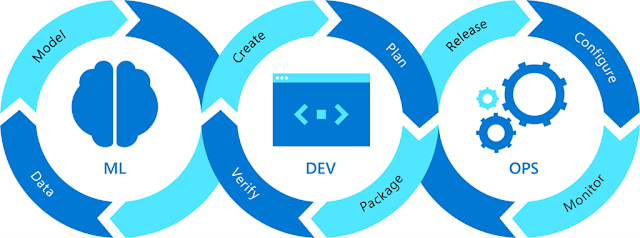

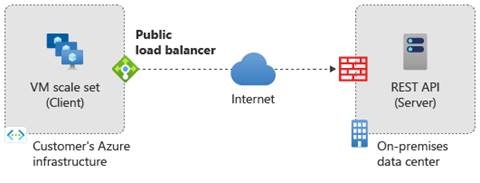

This is made possible through architectures and technologies like containers, serverless, microservices, APIs, and DevOps everywhere, which offer the shortest path to cloud value. With Azure, GitHub, and the Microsoft Cloud, we’re working to better enable you to easily leverage all these capabilities.

Azure Container Apps offers an ideal platform for application developers who want to run microservices in serverless containers without managing infrastructure. Today, Azure Container Apps is generally available and ready for you to use. It’s built on the strong open-source foundation of the Kubernetes ecosystem, which is core for cloud-native applications.

Azure Kubernetes Services (AKS) was built to be a destination for all developers and provide the best-managed experience for Kubernetes, whether it’s your first time trying it or you use it regularly for quick testing. It delivers elastic provisioning of capacity without the need to manage underlying compute infrastructure and is the fastest way to spin up managed Kubernetes clusters and configure a seamless DevSecOps workflow with CI/CD integration.

A great example of a customer taking advantage of AKS today is Adobe. Adobe evolved to cloud-native practices a few years ago and adopted a microservices architecture. They chose AKS because of its scalable, flexible, and multi-cloud capabilities, and it brought faster development, from onboarding to production, all while providing automated guardrails with DevSecOps practices.

Today, we have some great updates to enhance the developer and operator experience on AKS even further, making it faster and easier than ever before so you can spend more time writing code. We’re launching the Draft extension and CLI, the preview of a new integrated AKS web application routing add-on, and a KEDA extension (Kubernetes Event-driven Autoscaling extension).

The power and scalability of a cloud-native platform

What makes the Microsoft Cloud particularly rich as a development platform and ecosystem is the services it delivers and the underlying cloud infrastructure that allows you to focus on writing and shipping code. You can build upon and leverage a complete cloud-native platform, from containers to cloud-native databases and AI services.

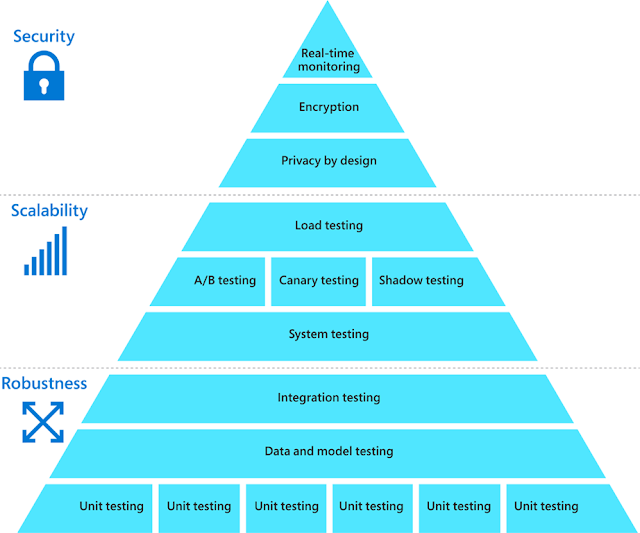

Azure Cosmos DB is a fully-managed and serverless developer database—and the only database service in the market to offer service legal agreements (SLAs) guaranteeing single-digit millisecond latency and 99.999 percent availability. These guarantees are available globally at any scale, even through traffic bursts.

Today, we’re improving Azure Cosmos DB elasticity with new burst capacity and increased serverless capacity to 1TB—while only charging for the storage and throughput used. In preview, this ability is ideal for workloads with intermittent and unpredictable traffic and allows developers to build scalable, cost-effective cloud-native applications.

We see customers innovating at a faster pace with cloud-native technologies. Azure Arc brings Azure security and cloud-native services to hybrid and multicloud environments, enabling you to secure and govern infrastructure and apps anywhere.

One example of a customer turning to Azure Arc is Canada’s largest bank, Royal Bank of Canada (RBC). As a Kubernetes-based deployment, Azure Arc enables the company to leverage existing infrastructure investments, and skillsets to manage and automate database deployments. Arc-enabled Data services allowed RBC to accelerate their time to market and development of products—bringing more time and focus to innovation and integration of their products and capabilities.

We continue to innovate and add new capabilities to Azure Arc to enable hybrid and multicloud scenarios. Today, we’re excited to announce several new Azure Arc capabilities including the landing zone accelerator for Azure Arc-enabled Kubernetes, offering customers greater agility for cloud-native apps and tools to simplify hybrid and multicloud deployments—all while strengthening security and compliance. The landing zone accelerator provides best practices, guidance, and automated reference implementations for a fast and easy deployment.

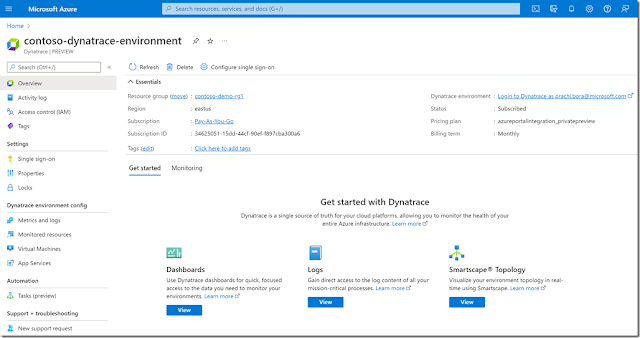

Azure Managed Grafana is part of our approach to provide customers with all the tools they need to manage, monitor, and secure their hybrid and multicloud investments. We recently launched this integration so you can easily deploy Grafana dashboards, complete with Azure’s built-in high availability and security.

I’m excited to share that the Business Critical tier of Azure Arc-enabled SQL Managed Instance is now generally available to meet the most demanding critical business continuity requirements. This allows developers to build scalable, cost-effective cloud-native apps and add the same top-rated security and automated update capabilities they’ve trusted for decades.

Modernize Java applications

Java continues to be one of the most important programming languages, and we’re committed to helping Java developers run their Spring applications more easily in the cloud. As part of a long-time collaboration with Pivotal, now VMware, Azure Spring Cloud was created as a fully managed service for Spring Boot applications to solve the challenges of running Spring at scale. Azure Spring Cloud is a fully-featured platform for all types of Spring applications; to better reflect this, the service is now called Azure Spring Apps.

Azure Spring Apps Enterprise will be generally available in June, bringing fully managed VMware Tanzu components running on Azure and advanced Spring Runtime support. Customers like FedEx are already leveraging this collaboration on Azure Springs Apps to deliver an impactful solution for their end-customers, helping predict estimated delivery times for millions of packages globally.

Build with Microsoft Cloud

Developing with the Microsoft Cloud puts the latest technologies in your hands and empowers you with both control and productivity. It offers a trusted and comprehensive platform so you can build great apps and solutions.

Microsoft Build is all about celebrating the work you do and helping you build what comes next. Be sure to view the session Scaling cloud-native apps and accelerating app modernization to learn more about these announcements. I also encourage you to view the Rapidly code, test, and ship from secure development environments session for more depth on Microsoft’s developer tools. There’s an exciting week planned, so join in throughout the entire digital event for more announcements, customer stories, breakout sessions, learning opportunities, and technical demos. Enjoy the event experience. I can’t wait to see what you build.

Source: microsoft.com