The financial services industry is constantly evolving to meet customer and regulatory demands. It is facing a variety of challenges spanning people, processes, and technology. Financial institutions (FIs) need to continuously accelerate to achieve technology and innovation while maintaining scale, quality, speed, and safety. Simultaneously, they need to handle evolving regulatory frameworks, manage risk, digitally transform, process financial transaction volumes, and accelerate cost reductions and restructuring efforts.

Murex is a leading global software provider of trading, risk management, processing operations, and post-trade solutions for capital markets. FIs around the world deploy Murex’s MX.3 platform to better manage risk, accelerate transformation, and simplify compliance while driving revenue growth.

Murex MX.3 on Azure

Murex MX.3 has been certified for Microsoft Azure since version 3.1.35. We have been collaborating with Murex and global strategic partners like Accenture and DXC to provide Murex customers with a simple way to create and scale MX.3 infrastructure and achieve agility in business transformation. With the recent version 3.1.48, SQL Server is supported and customers can now benefit from the performance, scalability, resilience, and cost savings facilitated by SQL Server. With SQL Server IaaS Extension, Murex customers can run SQL Server virtual machines (VMs) in Azure with PaaS capabilities for Windows OS (with automated patching setting disabled in order to prevent the installation of a cumulative update that may not yet be supported by MX3).

Architecture

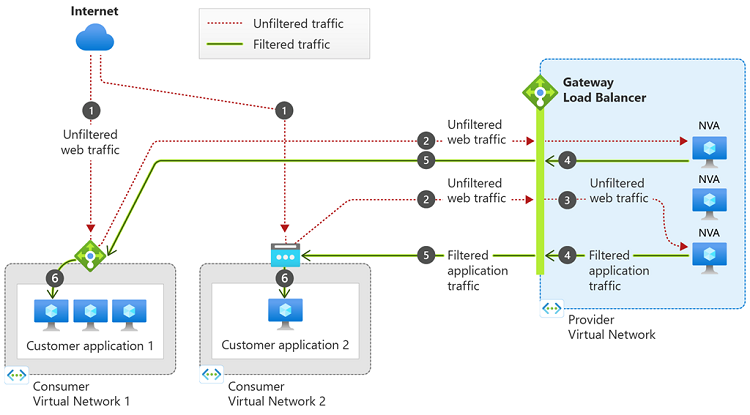

Murex customers can now refer to the architecture to implement MX.3 application on Azure. Azure enables a secure, reliable, and efficient environment, significantly reducing the infrastructure cost needed to operate the MX.3 environment and providing scalability and a highly performant environment. Customers running MX.3 on Azure can take advantage of multilayered security provided by Microsoft across physical data centers, infrastructure, and operations in Azure. They can benefit from the Compliance Program that helps accelerate cloud adoption with proactive compliance assurance for highly critical and regulated workloads. Customers can maximize their existing on-premises investments using an effective hybrid approach. Azure provides a holistic, seamless, and more secure approach to innovation across customers’ on-premises, multicloud, and edge environments.

The architecture is designed to provide high availability and disaster recovery. Murex customers can achieve threat intelligence and traffic control using Azure Firewall, cost optimization using Reserved Instances and VM scale sets, and high storage throughout using Azure NetApp Files Ultra Storage.

“With the deployment of large scale—originally specialized platform-based—Murex workloads, Azure NetApp Files has proven to deliver the ideal Azure landing zone for storage-performance intensive, mission-critical enterprise applications and to live up to its promise to Migrate the Un-migratable," says Geert van Teylingen, Azure NetApp Files Principal Product Manager from NetApp.

Customers running Murex on Azure

Customers around the world are migrating the Murex platform from on-premises to Azure.

ABN AMRO has moved their MX.3 trading and treasury front-to-back-to-risk platform to Azure, achieving flexibility, agility, and improved time to market. ABN AMRO’s journey to Azure progressed from proof of concept to production, with the Murex MX.3 platform now entirely operational on Azure.

“The key focus for us was always to make sure that we could automate most processes while preserving its operational excellence and key features,” says Kees van Duin, IT Integrator at ABN AMRO.“Thanks to Microsoft, we were able to preserve nearly 90 percent of our original design and move our platform to the cloud, while in-production, as efficiently as possible. We couldn’t be happier with the result,” he continues.

For Pavilion Energy, Upskills helped drive implementation for Murex Trading in Azure, helping reduce the risk of errors, increase the volume of trading activities, and optimize the management of their Murex MX.3 platform environments.

“We have been working on the Murex technology for over 10 years. Implementing Murex Trading Platform fully into Azure has proven to be the right decision to reduce the risk of delivery, optimize the environments management, and provide sustainable solutions and support to Pavilion Energy” says Thong Tran, Chief Executive Officer (CEO) of Upskills.

Strategic partners helping accelerate Murex workloads

Murex customers can modernize MX.3 workloads, reduce time-to-market and operational costs, and increase acceleration, leveraging accelerators, scripts, and blueprints from our partners—Accenture and DXC.

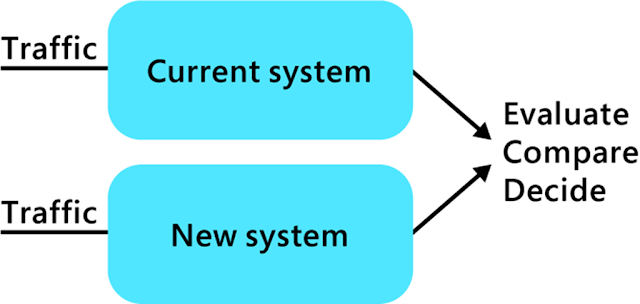

Accenture and Microsoft have decades of experience partnering with each other and building joint solutions that help customers achieve their goals. Leveraging our strategic alliance to better serve our customers, Accenture has designed and created specific accelerators, tools, and methodologies for MX.3 on Azure that could help organizations develop richer DevOps and become more agile while controlling costs.

Luxoft, a DXC Technology Company, with Microsoft as a global strategic partner for more than 30 years and Murex as a top-tier alliance partner for more than 13 years, helps modernize solutions to connect people, data, and processes with tangible business results. DXC has developed execution frameworks that adopt market best practices to accelerate and minimize risks of cloud migration of MX.3 to Azure.

Keeping pace with the changing regulatory and compliance constraints, financial innovation, computation complexity, and cyber threats is essential for FIs. FIs around the world are relying on Murex MX.3 to accelerate transformation and drive growth and innovation while complying with complex regulations. Customers are using Azure to enhance business agility and operation efficiency, reduce risk and total cost of ownership, and achieve scalability and robustness.

Source: microsoft.com