Cloud computing has revolutionized the way you build, deploy, and scale applications and services. While you have unprecedented flexibility, agility, and scalability, you also face greater challenges in managing cost, security, and compliance. While IT security and compliance are often managed by central teams, cost is a shared responsibility across executive, finance, product, and engineering teams, which is what makes managing cloud cost such a challenge. Having the right tools to enable cross-group collaboration and make data-driven decisions is critical.

Fortunately, you have everything you need in the Microsoft Cloud to implement a streamlined FinOps practice that brings people together and connects them to the data they need to make business decisions. And with new developments like Copilot in Microsoft Cost Management and Microsoft Fabric, there couldn’t be a better time to take a fresh look at how you manage cost within your organization and how you can leverage the FinOps Framework and the FinOps Open Cost and Usage Specification (FOCUS) to accelerate your FinOps efforts.

There’s a lot to cover in this space, so I’ll split this across a series of blog posts. In this first blog post, I’ll introduce the core elements of Cost Management and Fabric that you’ll need to lay the foundation for the rest of the series, including how to export data, how FOCUS can help, and a few quick options that anyone can use to setup reports and alerts in Fabric with just a few clicks.

No-code extensibility with Cost Management exports

As your FinOps team grows to cover new services, endpoints, and datasets, you may find they spend more time integrating disparate APIs and schemas than driving business goals. This complexity also keeps simple reports and alerts just out of reach from executive, finance, and product teams. And when your stakeholders can’t get the answers they need, they push more work on to engineering teams to fill those gaps, which again, takes away from driving business goals.

We envision a future where FinOps teams can empower all stakeholders to stay informed and get the answers they need through turn-key integration and AI-assisted tooling on top of structured guidance and open specifications. And this all starts with Cost Management exports—a no-code extensibility feature that brings data to you.

As of today, you can sign up for a limited preview of Cost Management expands where you can export five new datasets directly into your storage account without a single line of code. In addition to the actual and amortized cost and usage details you get today, you’ll also see:

◉ Cost and usage details aligned to FOCUS

◉ Price sheets

◉ Reservation details

◉ Reservation recommendations

◉ Reservation transactions

Of note, the FOCUS dataset includes both actual and amortized costs in a single dataset, which can drive additional efficiencies in your data ingestion process. You’ll benefit from reduced data processing times and more timely reporting on top of reduced storage and compute costs due to fewer rows and less duplication of data.

Beyond the new datasets, you’ll also discover optimizations that deliver large datasets more efficiently, reduced storage costs by updating rather than creating new files each day, and more. All exports are scheduled at the same time, to ensure scheduled refreshes of your reports will stay in sync with the latest data. Coupled with file partitioning, which is already available and recommended today, and data compression, which you’ll see in the coming months, the exports preview removes the need to write complex code to extract, transfer, and load large datasets reliably via APIs. This better enables all FinOps stakeholders to build custom reports to get the answers they need without having to learn a single API or write a single line of code.

FOCUS democratizes cloud cost analytics

In case you’re not familiar, FOCUS is a groundbreaking initiative to establish a common provider and service-agnostic format for billing data that empowers organizations to better understand cost and usage patterns and optimize spending and performance across multiple cloud, software as a service (SaaS), and even on-premises service offerings. FOCUS provides a consistent, clear, and accessible view of cost data, explicitly designed for FinOps needs. As the new “language” of FinOps, FOCUS enables practitioners to collaborate more efficiently and effectively with peers throughout the organization and even maximize transferability and onboarding for new team members, getting people up and running quicker.

FOCUS 0.5 was originally announced in June 2023, and we’re excited to be leading the industry with our announcement of native support for the FOCUS 1.0 preview as part of Cost Management exports on November 13, 2023. We believe FOCUS is an important step forward for our industry, and we look forward to our industry partners joining us and collaboratively evolving the specification alongside FinOps practitioners from our collective customers and partners.

FOCUS 1.0 preview adds new columns for pricing, discounts, resources, and usage along with prescribed behaviors around how discounts are applied. Soon, you’ll also have a powerful new use case library, which offers a rich set of problems and prebuilt queries to help you get the answers you need without the guesswork. Armed with FOCUS and the FinOps Framework, you have a literal playbook on how to understand and extract answers out of your data effortlessly, enabling you to empower FinOps stakeholders regardless of how much knowledge or experience they have, to get the answers they need to maximize business value with the Microsoft Cloud.

Microsoft Fabric and Copilot enable self-service analytics

So far, I’ve talked about how you can leverage Cost Management exports as a turn-key solution to extract critical details about your costs, prices, and reservations using FOCUS as a consistent, open billing data format with its use case library that is a veritable treasure map for finding answers to your FinOps questions. While these are all amazing tools that will accelerate your FinOps efforts, the true power of democratizing FinOps lies at the intersection of Cost Management and FOCUS with a platform that enables you to provide your stakeholders with self-serve analytics and alerts. And this is exactly what Microsoft Fabric brings to the picture.

Microsoft Fabric is an all-in-one analytics solution that encompasses data ingestion, normalization, cleansing, analysis, reporting, alerting, and more. I could write a separate blog post about how to implement each FinOps capability in Microsoft Fabric, but but to get you acclimated, let me introduce the basics.

Your first step to leveraging Microsoft Fabric starts in Cost Management, which has done much of the work for you by exporting details about your prices, reservations, and cost and usage data aligned to FOCUS.

Once exported, you’ll ingest your data into a Fabric lakehouse, SQL, or KQL database table and create a semantic model to bring data together for any reports and alerts you’ll want to create. The database option you use will depend on how much data you have and your reporting needs. Below is an example using a KQL database, which uses Azure Data Explorer under the covers, to take advantage of the performance and scale benefits as well as the powerful query language.

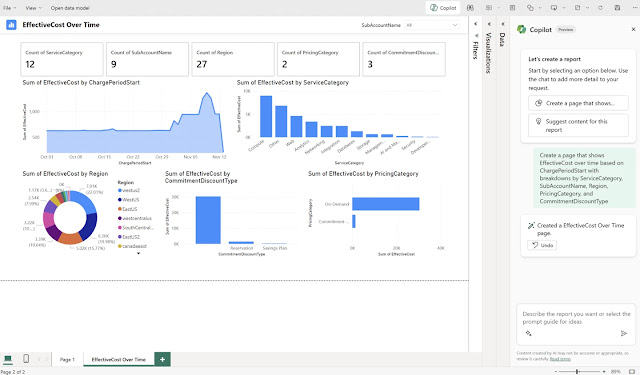

Fabric offers several ways to quickly explore data from a semantic model. You can explore data by simply selecting the columns you want to see, but I recommend trying the auto-create a report option which takes that one step further by generating a quick summary based on the columns you select. As an example, here’s an auto-generated summary of the FOCUS EffectiveCost broken down by ChargePeriodStart, ServiceCategory, SubAccountName, Region, PricingCategory, and CommitmentDiscountType. You can apply quick tweaks to any visual or switch to the full edit experience to take it even further.

Those with a keen eye may notice the Copilot button at the top right. If we switch to edit mode, we can take full advantage of Copilot and even ask it to create the same summary:

Copilot starts to get a little fancier with the visuals and offers summarized numbers and a helpful filter. I can also go further with more specific questions about commitment-based discounts:

Of course, this is barely scratching the surface. With a richer semantic model including relationships and additional details, Copilot can go even further and save you time by giving you the answers you need and building reports with less time and hassle.

In addition to having unparalleled flexibility in reporting on the data in the way you want, you can also create fine-grained alerts in a more flexible way than ever before with very little effort. Simply select the visual you want to measure and specify when and how you want to be alerted:

This gets even more powerful when you add custom visuals, measures, and materialized views that offer deeper insights.

This is just a glimpse of what you can do with Cost Management and Microsoft Fabric together. I haven’t even touched on the data flows, machine learning capabilities, and the potential of ingesting data from multiple cloud providers or SaaS vendors also using FOCUS to give you a full, single pane of glass for your FinOps efforts. You can imagine the possibilities of how Copilot and Fabric can impact every FinOps capability, especially when paired with rich collaboration and automation tools like Microsoft Teams, Power Automate, and Power Apps that can help every stakeholder accomplish more together.

Source: microsoft.com