Recently a new flaw was discovered in PolKit - a component which controls system-wide privileges in Unix OS. This vulnerability potentially allows unprivileged account to have root permission. In this blog post, we will focus on the recent vulnerability, demonstrate how attacker can easily abuse and weaponize it. In addition, we will preset how Azure Security Center can help you detect threats, and provide recommendations for mitigation steps.

PolKit (previously known as PolicyKit) is a component that provides centralized way to define and handle policies and controls system-wide privileges in Unix OS. The vulnerability CVE-2018-19788 was caused due to improper validation of permission requests. It allows a non-privileged user with user id greater than the maximum integer to successfully execute arbitrary code under root context.

The vulnerability exists within PolKit’s versions earlier than 0.115, which comes pre-installed by some of the most popular Linux distributions. A patch was released, but it required a manual install by the relevant package manager issuer.

You can check if your machine is vulnerable by running the command “pkttyagent -version” and verify that your PolKit’s version is not vulnerable.

We are going to demonstrate a simple exploitation inspired from a previously published proof of concept (POC). The exploitation shows how an attacker could leverage this vulnerability for achieve privilege escalation technique and access restrict files. For this demonstration, we will use one of the most popular Linux distributions today.

First, we verify that we are on vulnerable machine by checking the PolKit version. Then, we verify that the user ID is greater than the maximal integer value.

The PolKit vulnerability

PolKit (previously known as PolicyKit) is a component that provides centralized way to define and handle policies and controls system-wide privileges in Unix OS. The vulnerability CVE-2018-19788 was caused due to improper validation of permission requests. It allows a non-privileged user with user id greater than the maximum integer to successfully execute arbitrary code under root context.

The vulnerability exists within PolKit’s versions earlier than 0.115, which comes pre-installed by some of the most popular Linux distributions. A patch was released, but it required a manual install by the relevant package manager issuer.

You can check if your machine is vulnerable by running the command “pkttyagent -version” and verify that your PolKit’s version is not vulnerable.

How an attacker can exploit this vulnerability to gain access to your environment

We are going to demonstrate a simple exploitation inspired from a previously published proof of concept (POC). The exploitation shows how an attacker could leverage this vulnerability for achieve privilege escalation technique and access restrict files. For this demonstration, we will use one of the most popular Linux distributions today.

First, we verify that we are on vulnerable machine by checking the PolKit version. Then, we verify that the user ID is greater than the maximal integer value.

Now, that we know we are on vulnerable machine, we can leverage this flaw by using another pre-installed tool, Systemctl, that uses PolKit as the permission policy enforcer and has the ability to execute arbitrary code. If you take closer look into CVE-2018-19788, you would find Systemctl is impacted by the vulnerability. Systemctl is one of Systemd utilities, and the system manager that is becoming the new foundation for building with Linux.

Using Systemctl, we will be able to create a new service in order to execute our malicious command with root context. Because of the flaw in PolKit, we can bypass the permission checks and runs systemctl operations. Let’s take a look at how we can do that.

Bash script content:

#!/bin/bash

cat <<EOF >> /tmp/polKitVuln.service

[Unit]

Description= Abusing PolKit Vulnerability

[Service]

ExecStart=/bin/bash -c 'cat /etc/sudoers > /tmp/sudoersList.txt'

Restart=on-failure

RuntimeDirectoryMode=0755

[Install]

WantedBy=multi-user.target

Alias= polKitVuln.service

EOF

systemctl enable /tmp/polKitVuln.service

systemctl start polKitVuln.service

First, we define a new service and provides the required information to “/tmp/polkitVuln.service”. The ExecStart directive contains our command (bolded above), accesses the sudoers file, and copies its content to a share folder. This shared folder can be accessed by unprivileged users. The Sudoers file is one of the most important files in the system, as it contains the users and groups privileges information of the machine. At the last part of the script, we make the actual call for systemctl tool to create and start our new service.

Execute the script:

Notice the errors regarding Polkit failing to handle the uid field. As the Sudoers file is copied using the exploitation, we can read its content.

With this vulnerability attackers can bypass permissions to check and gain root access to your environment.

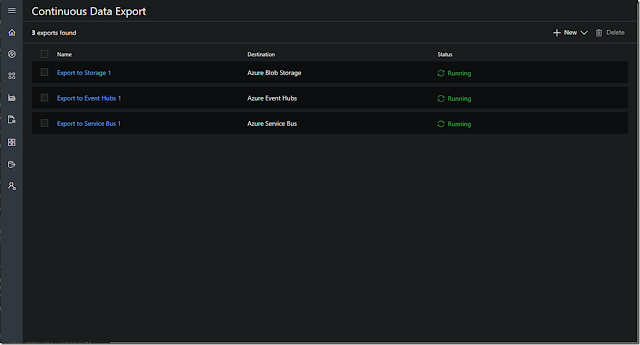

Protect against and respond to threats with Azure Security Center

Azure Security Center can help detect threats, such as the PolKit vulnerability, and help you quickly mitigate these risks. Azure Security Center consolidates your security alerts into a single dashboard, making it easier for you to see the threats in your environment and prioritize your response to threats. Each alert gives you a detailed description of the incident as well as steps on how to remediate the issue.

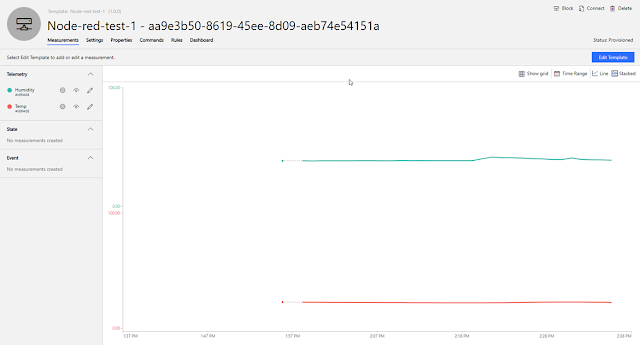

While we investigate Azure Security Center hosts impact, we could determine what is the frequency in which machines are under attack and using behavioral detection techniques, inform customers when they have been attacked. Below is the security alert based on our previous activity which you can see in Security Center.

In addition, Azure Security Center provides a set of steps that enable customers to quickly remediate the problem:

◈ System administration should not allow negative user IDs or user IDs greater than 2147483646.

◈ Verify user ID maximum and minimum values under “/etc/login.defs.”

◈ Upgrade your policykit package by the package manager in advance.