Do you know how to develop and source control your Microsoft Azure Stream Analytics (ASA) jobs? Do you know how to setup automated processes to build, test, and deploy these jobs to multiple environments? Stream Analytics Visual Studio tools together with Azure Pipelines provides an integrated environment that helps you accomplish all these scenarios. This article will show you how and point you to the right places in order to get started using these tools.

In the past it was difficult to use Azure Data Lake Store Gen1 as the output sink for ASA jobs, and to set up the related automated CI/CD process. This was because the OAuth model did not allow automated authentication for this kind of storage. The tools being released in January 2019 support Managed Identities for Azure Data Lake Storage Gen1 output sink and now enable this important scenario.

This article covers the end-to-end development and CI/CD process using Stream Analytics Visual Studio tools, Stream Analytics CI.CD NuGet package, and Azure Pipelines. Currently Visual Studio 2019, 2017, and 2015 are all supported. If you haven’t tried the tools, follow the installation instructions to get started!

Let’s get started by creating a job. Stream Analytics Visual Studio tools allows you to manage your jobs using a project. Each project consists of an ASA query script, a job configuration, and several input and output configurations. Query editing is very efficient when using all the IntelliSense features like error markers, auto completion, and syntax highlighting.

In the past it was difficult to use Azure Data Lake Store Gen1 as the output sink for ASA jobs, and to set up the related automated CI/CD process. This was because the OAuth model did not allow automated authentication for this kind of storage. The tools being released in January 2019 support Managed Identities for Azure Data Lake Storage Gen1 output sink and now enable this important scenario.

This article covers the end-to-end development and CI/CD process using Stream Analytics Visual Studio tools, Stream Analytics CI.CD NuGet package, and Azure Pipelines. Currently Visual Studio 2019, 2017, and 2015 are all supported. If you haven’t tried the tools, follow the installation instructions to get started!

Job development

Let’s get started by creating a job. Stream Analytics Visual Studio tools allows you to manage your jobs using a project. Each project consists of an ASA query script, a job configuration, and several input and output configurations. Query editing is very efficient when using all the IntelliSense features like error markers, auto completion, and syntax highlighting.

If you have existing jobs and want to develop them in Visual Studio or add source control, just export them to local projects first. You can do this from the server explorer context menu for a given ASA job. This feature can also be used to easily copy a job across regions without authoring everything from scratch.

Developing in Visual Studio also provides you with the best native authoring and debugging experience when you are writing JavaScript user defined functions in cloud jobs or C# user defined functions in Edge jobs.

Source control

When created as projects, the query and other artifacts sit on the local disk of your development computer. You can use the Azure DevOps, formerly Visual Studio Team Service, for version control or commit code directly to any repositories you want. By doing this you can save different versions of the .asaql query as well as inputs, outputs, and job configurations while easily reverting to previous versions when needed.

Testing locally

During development, use local testing to iteratively run and fix code with local sample data or live streaming input data. Running locally starts the query in seconds and makes the testing cycle much shorter.

Testing in the cloud

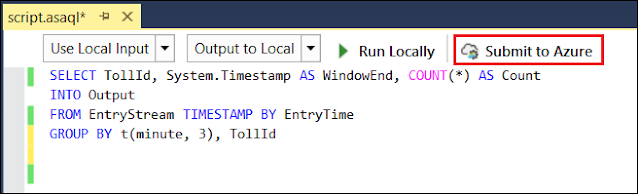

Once the query works well on your local machine, it’s time to submit to the cloud for performance and scalability testing. Select “Submit to Azure” in the query editor to upload the query and start the job running. You can then view the job metrics and job flow diagram from within Visual Studio.

Setup CI/CD pipelines

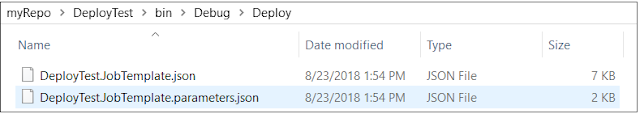

When your query testing is complete, the next step is to setup your CI/CD pipelines for production environments. ASA jobs are deployed as Azure resources using Azure Resource Manager (ARM) templates. Each job is defined by an ARM template definition file and a parameter file.

There are two ways to generate the two files mentioned above:

1. In Visual Studio, right click your project name and select “Build.”

2. On an arbitrary build machine, install Stream Analytics CI.CD NuGet package and run the command “build” only supported on Windows at this time. This is needed for an automated build process.

Performing a “build” generates the two files under the “bin” folder and lets you save them wherever you want.

The default values in the parameter file are the ones from the inputs/outputs job configuration files in your Visual Studio project. To deploy in multiple environments, replace the values via a simple power shell script in the parameter file to generate different versions of this file to specify the target environment. In this way you can deploy into dev, test, and eventually production environments.

As stated above, the Stream Analytics CI.CD NuGet package can be used independently or in the CI/CD systems such as Azure Pipelines to automate the build and test process of your Stream Analytics Visual Studio project.

0 comments:

Post a Comment