Azure Machine Learning service contains many advanced capabilities designed to simplify and accelerate the process of building, training, and deploying machine learning models. Automated machine learning enables data scientists of all skill levels to identify suitable algorithms and hyperparameters faster. Support for popular open-source frameworks such as PyTorch, TensorFlow, and scikit-learn allow data scientists to use the tools of their choice. DevOps capabilities for machine learning further improve productivity by enabling experiment tracking and management of models deployed in the cloud and on the edge. All these capabilities can be accessed from any Python environment running anywhere, including data scientists’ workstations.

We built Azure Machine Learning service working closely with our customers, thousands of whom are using it every day to improve customer service, build better products and optimize their operations. Below are two such customer examples.

TAL, a 150-year-old leading life insurance company in Australia, is embracing AI to improve quality assurance and customer experience. Traditionally, TAL’s quality assurance team could only review a randomly selected 2-3 percent of cases. Using Azure Machine Learning service, it is now able to review 100 percent of cases.

“Azure Machine Learning regularly lets TAL’s data scientists deploy models within hours rather than weeks or months – delivering faster outcomes and the opportunity to roll out many more models than was previously possible. There is nothing on the market that matches Azure Machine Learning in this regard.”

Elastacloud, a London-based data science consultancy, uses Azure Machine Learning service to build and run the Elastacloud Energy BSUoS Forecast service, an AI-powered solution that helps alternative energy providers to better predict demand and reduce costs.

“With Azure Machine Learning, we support BSUoS Forecast with no virtual machines and nothing to manage. We built a highly automated service that hides its complexity inside serverless boxes.”

To simplify and accelerate machine learning, Azure Machine Learning has been built on the following design principles that are detailed in the rest of the blog.

◈ Enable data scientists to use a familiar and rich set of data science tools

◈ Simplify the use of popular machine learning and deep learning frameworks

◈ Accelerate time to value by offering end-to-end machine learning lifecycle capabilities

Data scientists expect to use the full Python ecosystem of libraries and frameworks and the ability to train locally on their laptop or workstation. There are a wide variety of tools used across the industry, but broadly they fall under command line interfaces, editors and IDEs, and Notebooks. Azure Machine Learning service has been designed to support all of these. Its Python SDK is accessible from any Python environment, IDEs like Visual Studio Code (VS Code) or PyCharm, or Notebooks such as Jupyter and Azure Databricks. Let’s look deeper at the Azure Machine Learning service integration with a couple of these tools.

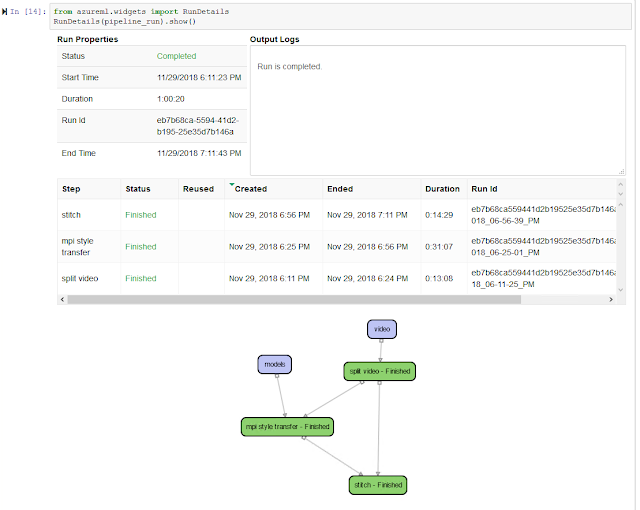

Jupyter Notebooks are a popular development environment for data scientists working in Python. Azure Machine Learning service provides robust support for both local and hosted notebooks (such as Azure Notebooks) and provides built-in widgets that allow data scientists to monitor the progress of training jobs visually in near real-time as shown in the image below. For customers who do machine learning in Azure Databricks, the Azure Databricks notebooks can be used just as well.

We built Azure Machine Learning service working closely with our customers, thousands of whom are using it every day to improve customer service, build better products and optimize their operations. Below are two such customer examples.

TAL, a 150-year-old leading life insurance company in Australia, is embracing AI to improve quality assurance and customer experience. Traditionally, TAL’s quality assurance team could only review a randomly selected 2-3 percent of cases. Using Azure Machine Learning service, it is now able to review 100 percent of cases.

“Azure Machine Learning regularly lets TAL’s data scientists deploy models within hours rather than weeks or months – delivering faster outcomes and the opportunity to roll out many more models than was previously possible. There is nothing on the market that matches Azure Machine Learning in this regard.”

Elastacloud, a London-based data science consultancy, uses Azure Machine Learning service to build and run the Elastacloud Energy BSUoS Forecast service, an AI-powered solution that helps alternative energy providers to better predict demand and reduce costs.

“With Azure Machine Learning, we support BSUoS Forecast with no virtual machines and nothing to manage. We built a highly automated service that hides its complexity inside serverless boxes.”

Azure Machine Learning service design principles

To simplify and accelerate machine learning, Azure Machine Learning has been built on the following design principles that are detailed in the rest of the blog.

◈ Enable data scientists to use a familiar and rich set of data science tools

◈ Simplify the use of popular machine learning and deep learning frameworks

◈ Accelerate time to value by offering end-to-end machine learning lifecycle capabilities

Familiar data science tools

Data scientists expect to use the full Python ecosystem of libraries and frameworks and the ability to train locally on their laptop or workstation. There are a wide variety of tools used across the industry, but broadly they fall under command line interfaces, editors and IDEs, and Notebooks. Azure Machine Learning service has been designed to support all of these. Its Python SDK is accessible from any Python environment, IDEs like Visual Studio Code (VS Code) or PyCharm, or Notebooks such as Jupyter and Azure Databricks. Let’s look deeper at the Azure Machine Learning service integration with a couple of these tools.

Jupyter Notebooks are a popular development environment for data scientists working in Python. Azure Machine Learning service provides robust support for both local and hosted notebooks (such as Azure Notebooks) and provides built-in widgets that allow data scientists to monitor the progress of training jobs visually in near real-time as shown in the image below. For customers who do machine learning in Azure Databricks, the Azure Databricks notebooks can be used just as well.

Visual Studio Code is a lightweight but powerful source code editor which runs on your desktop and is available for Windows, macOS and Linux. The Python extension for Visual Studio Code, combines the power of Jupyter Notebooks with the power of Visual Studio Code. This allows data scientists to experiment incrementally in a “notebook style” while also getting all the productivity Visual Studio Code has to offer, such as IntelliSense, built-in debugger, and Live Share as shown in the image below.

Support for popular frameworks

Frameworks are the most important libraries that a data scientist uses to build their models. Azure Machine Learning service supports all python-based frameworks. The most popular ones, scikit-learn, PyTorch, and TensorFlow, have been made into an Estimator class to simplify submission of training code to remote compute, whether it's on a single node or distributed training across GPU clusters. Furthermore, this is not just limited to machine learning frameworks. Any packages from the vast Python ecosystem can be used.

We realize that customers often face several challenges when they try to use multiple frameworks to build models and deploy them to a variety of hardware and OS platforms. This is because the frameworks have not been designed to be used interchangeably and require specific optimizations for hardware and OS platforms. To address these problems, Microsoft has worked with industry leaders such as Facebook and AWS, as well as hardware companies, to develop the Open Neural Network Exchange (ONNX) specification for describing machine learning models in an open standard format. Azure Machine Learning service supports ONNX and enables customers to deploy, manage, and monitor ONNX models easily. Additionally, to provide a consistent software platform to run ONNX models across cloud and edge, we announced that we will open source the ONNX runtime today. We welcome you to join the community and contribute to the ONNX project.

End-to-end machine learning lifecycle

Azure Machine Learning seamlessly integrates with Azure services to provide end-to-end capabilities for the machine learning lifecycle which include data preparation, experimentation, model training, model management, deployment, and monitoring.

Data preparation

Customers can use Azure’s rich data platform capabilities, such as Azure Databricks, to manage and prepare their data for machine learning. The DataPrep SDK is available as a companion to the Azure Machine Learning Python SDK to simplify data transformations.

Training

Azure Machine Learning service provides seamless distributed compute capabilities that allow data scientists to scale out training from their local laptop or workstation to the cloud. The compute is on-demand. Users only pay for compute time and don’t have to manage and maintain GPU and CPU clusters.

Data professionals, who are already invested in Apache Spark, should train on Azure Databricks clusters. The Azure Machine Learning service SDK is integrated into the Azure Databricks environment and can seamlessly extend it for experimentation, model deployment, and management.

Experimentation

Data scientists create their model through a process of experimentation, iterating over their data and training code multiple times until they get the desired results from the model. Azure Machine Learning service provides powerful capabilities to improve the productivity of data scientists while also enhancing governance, repeatability, and collaboration during the model development process.

1. Using automated machine learning, data scientists can point to the dataset, and a scenario (regression, classification, or forecasting) and automated machine learning uses advanced techniques to propose a new model by doing feature engineering, selecting the algorithm and sweeping hyperparameters.

2. Hyper parameter-tuning of existing models enables fast and intelligent exploration of hyperparameters, with the early termination of non-performant training jobs, helps improve model accuracy.

3. Machine Learning pipelines allow data scientists to modularize their model training into discrete steps such as data movement, data transforms, feature extraction, training, and evaluation. Machine Learning pipelines are a mechanism for automating, sharing, and reproducing models. They also provide performance gains by caching intermediate outputs as the data scientist iterates in the model development inner loop.

4. Finally, run history captures each training run, the model performance and the related metrics. We keep track of the code, compute, and datasets used in training the model. The data scientist can compare runs, and then select the “best” model for their problem statement. Once selected, the model is registered to the Model registry, which provides auditability – including provenance – of the models in production.

Deployment, model management, and monitoring

Once data scientists complete model development, there is the work of putting them into production, managing, and monitoring them. Azure Machine Learning service model registry keeps track of models and their version history, along with the lineage and artifacts of the model.

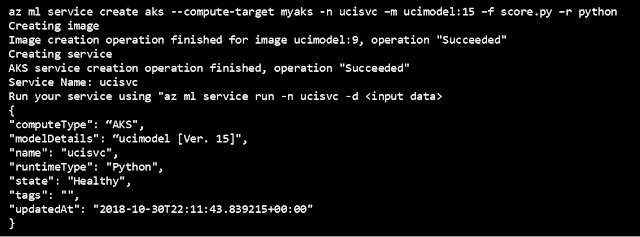

Azure Machine Learning service provides the capability to deploy to both cloud and the edge, doing real-time and batch scoring depending on the customer’s needs. In the cloud, Azure Machine Learning service will provision, load balance, and scale a Kubernetes cluster using Azure Kubernetes Service (AKS) or attach to the customer’s own AKS cluster. This allows for multiple models to be deployed into production. The cluster will auto-scale with the load. Model management activities can be done with both the Python SDK, UX or with Command Line Interface (CLI) and REST API, which are callable from Azure DevOps. These capabilities fully integrate the model lifecycle with the rest of our customer’s app lifecycle.

Models in the registry can also be deployed to edge devices with integration with Azure IoT Edge service.

Once the model is in production, the service collects both application and model telemetry that allows the model to be monitored in production for operational and model correctness. The data captured during inferencing is presented back to the data scientists and this information can be used to determine model performance, data drift, and model decay.

For extremely fast and low-cost inferencing, Azure Machine Learning service offers hardware accelerated models (in preview) that provide vision model acceleration through FPGAs. This capability is exclusive to Azure Machine Learning service and offers class-leading latency benefits, as well as cost/transaction benefits for offline processing jobs.

0 comments:

Post a Comment