Learn how to connect Power BI and Azure Data Services to share data and unlock new insights with a new tutorial. Business analysts who use Power BI dataflows can now share data with data engineers and data scientists, who can leverage the power of Azure Data Services, including Azure Databricks, Azure Machine Learning, Azure SQL Data Warehouse, and Azure Data Factory for advanced analytics and AI.

With the recently announced preview of Power BI dataflows, Power BI has enabled self-service data prep for business analysts. Power BI dataflows can ingest data from a large array of transactional and observational data sources, and cleanse, transform, enrich, schematize, and store the result. Dataflows are reusable and can be refreshed automatically and daisy-chained to create powerful data preparation pipelines. Power BI is now making available support for storing dataflows in Azure Data Lake Storage (ADLS) Gen2, including both the data and dataflow definition. By storing dataflows in Azure Data Lake Storage Gen2, business analysts using Power BI can now collaborate with data engineers and data scientists using Azure Data Services.

The ability for organizations to extract intelligence from business data provides a key competitive advantage, however attempting this today can be time consuming and costly. To extract intelligence and create value from data, an application must be able to access the data and understand its structure and meaning. Data often resides in silos that are application or platform specific, creating a major data integration and data preparation challenge.

By adopting a consistent way to store and describe data based on the Common Data Model (CDM), Power BI, Azure Data Services and other applications can share and interoperate over data more effectively. Power BI dataflows are stored in ADLS Gen2 as CDM folders. A CDM folder contains a metadata file that describes the entities in the folder, with their attributes and datatypes, and lists the data files for each entity. CDM also defines a set of standard business entities that define additional rich semantics. Mapping the data in a CDM folder to standard CDM entities further facilitates interoperability and data sharing. Microsoft has joined with SAP and Adobe to form an Open Data Initiative to encourage the definition and adoption of standard entities across a range of domains to make it easier for applications and tools to share data through an enterprise Data Lake.

By adopting these data storage conventions, data ingested by Power BI, with its already powerful and easy to use data prep features, can now be further enriched and leveraged in Azure. Similarly, data in Azure can be exported into CDM folders and shared with Power BI.

With the recently announced preview of Power BI dataflows, Power BI has enabled self-service data prep for business analysts. Power BI dataflows can ingest data from a large array of transactional and observational data sources, and cleanse, transform, enrich, schematize, and store the result. Dataflows are reusable and can be refreshed automatically and daisy-chained to create powerful data preparation pipelines. Power BI is now making available support for storing dataflows in Azure Data Lake Storage (ADLS) Gen2, including both the data and dataflow definition. By storing dataflows in Azure Data Lake Storage Gen2, business analysts using Power BI can now collaborate with data engineers and data scientists using Azure Data Services.

Data silos inhibit data sharing

The ability for organizations to extract intelligence from business data provides a key competitive advantage, however attempting this today can be time consuming and costly. To extract intelligence and create value from data, an application must be able to access the data and understand its structure and meaning. Data often resides in silos that are application or platform specific, creating a major data integration and data preparation challenge.

Consistent data and metadata formats enable collaboration

By adopting a consistent way to store and describe data based on the Common Data Model (CDM), Power BI, Azure Data Services and other applications can share and interoperate over data more effectively. Power BI dataflows are stored in ADLS Gen2 as CDM folders. A CDM folder contains a metadata file that describes the entities in the folder, with their attributes and datatypes, and lists the data files for each entity. CDM also defines a set of standard business entities that define additional rich semantics. Mapping the data in a CDM folder to standard CDM entities further facilitates interoperability and data sharing. Microsoft has joined with SAP and Adobe to form an Open Data Initiative to encourage the definition and adoption of standard entities across a range of domains to make it easier for applications and tools to share data through an enterprise Data Lake.

By adopting these data storage conventions, data ingested by Power BI, with its already powerful and easy to use data prep features, can now be further enriched and leveraged in Azure. Similarly, data in Azure can be exported into CDM folders and shared with Power BI.

Azure Data Services enable advanced analytics on shared data

Azure Data Services enable advanced analytics that let you maximize the business value of data stored in CDM folders in the data lake. Data engineers and data scientists can use Azure Databricks and Azure Data Factory dataflows to cleanse and reshape data, ensuring it is accurate and complete. Data from different sources and in different formats can be normalized, reformatted, and merged to optimize the data for analytics processing. Data scientists can use Azure Machine Learning to define and train machine learning models on the data, enabling predictions and recommendations that can be incorporated into BI dashboards and reports, and used in production applications. Data engineers can use Azure Data Factory to combine data from CDM folders with data from across the enterprise to create an historically accurate, curated enterprise-wide view of data in Azure SQL Data Warehouse. At any point, data processed by any Azure Data Service can be written back to new CDM folders, to make the insights created in Azure accessible to Power BI and other CDM-enabled apps or tools.

New tutorial explores data sharing between Power BI and Azure

A tutorial is now available to help you understand how sharing data between Power BI and Azure using CDM folders can break down data silos and unlock new insights. The tutorial with sample code shows how to integrate data from Power BI into a modern data warehousing scenario in Azure. The tutorial allows you to explore the flows highlighted in green in the diagram above.

In the tutorial, Power BI dataflows are used to ingest key analytics data from the Wide World Importers operational database and store the extracted data with its schema in a CDM folder in ADLS Gen2. You then connect to the CDM folder and process the data using Azure Databricks, formatting and preparing it for later steps, then writing it back to the lake in a new CDM folder. This prepared CDM folder is used by Azure Machine Learning to train and publish an ML model that can be accessed from Power BI or other applications to make real-time predictions. The prepared data is also loaded into staging tables in an Azure SQL Data Warehouse, where it is transformed into a dimensional model.

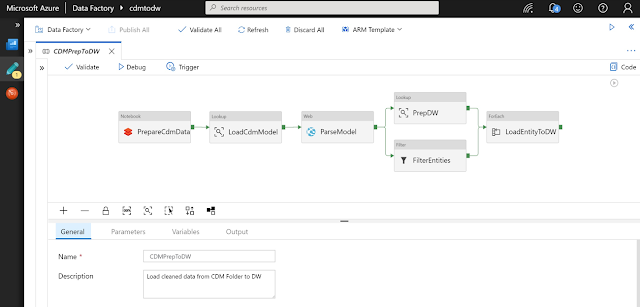

Azure Data Factory is used to orchestrate the flow of data between the services, as well as to manage and monitor the processing at runtime. By working through the tutorial, you’ll see first-hand how the metadata stored in a CDM folder makes it easier to for each service to understand and share data.

Sample code accelerates your data integration projects

The tutorial includes sample code and instructions for the whole scenario. The samples include reusable libraries and code in C#, Python, and Scala, as well as reusable Azure Data Factory pipeline templates, that you can use to integrate CDM folders into your own Azure Data Services projects.

0 comments:

Post a Comment