Cloud computing continues to transform the way businesses operate, innovate, and compete. And whether you’re just moving to the cloud or already have an established cloud footprint, you may have questions about how to pay for the services you need, estimate your costs, or optimize your spending. To help answer these questions, Azure provides a variety of resources and offers to help you get the best value at every stage of your cloud journey.

Read More: DP-500: Designing and Implementing Enterprise-Scale Analytics Solutions Using Microsoft Azure and Microsoft Power BI

This blog post will show you how to approach and think about pricing throughout your cloud adoption journey. We will also give an example of how a hypothetical digital media company would approach their Azure pricing needs as they transition from evaluating and planning to setting up and running their cloud solutions. After reading this post, you will know more about how to select the best Azure pricing option for your business objectives and cloud needs.

Find guidance and resources to navigate Azure pricing options

If you are new to Azure or cloud computing in general, you may want to learn the basics of how cloud services are priced, and what options you have for paying for them. Azure offers a variety of pricing options to suit different needs and scenarios, from free tier and pay-as-you-go to commitment and benefits. Here’s a brief overview of each option:

Free tier: You can get started with Azure for free, and access over 25 services for 12 months, plus $200 credit to use in your first 30 days. You can also use some services for free, such as Azure App Service, Azure Functions, and Azure DevOps, with certain limits and conditions. The free tier is a great way to explore Azure and learn how it works, without any upfront costs or commitments.

Pay-as-you-go: You can pay only for the services you use or consume, based on the measured usage and the unit prices of each service. For example, you can pay for the number of virtual machine (VMs) hours, the amount of storage space, or the volume of data transferred. Pay-as-you-go is a flexible and scalable option that lets you adjust your usage and costs according to your changing needs and demands.

Estimate Azure project costs

If you have a new project to migrate to or build in Azure, you need an accurate and realistic estimate of your project costs to make an informed decision about moving forward. To help with this decision, Azure provides several tools and resources, such as:

TCO calculator: You can use the Total Cost of Ownership (TCO) calculator to estimate how much you can save by migrating your on-premises workloads to Azure. You can input your current infrastructure details, such as servers, storage, and network, and see a detailed comparison of the costs of running them on-premises versus on Azure.

Azure Migrate: You can use Azure Migrate to assess and plan your migration to Azure. You can discover and evaluate your on-premises servers, databases, and applications, and get recommendations on the best Azure services and sizing options for them. You can also get estimated costs and savings for your migration scenario and track your progress and readiness.

Azure Architecture Center: You can get guidance for architecting solutions on Azure using established patterns and practices such as OpenAI Chatbots, Windows VM Deployment, and Analytics end-to-end with Azure Synapse with cost factors included.

Calculate costs of Azure products and services

If you are ready to deploy specific Azure services and you want to budget for them, you may want to consider the different pricing options and offers that are available for each service. Azure provides resources and guidance on how to budget for specific Azure services, such as:

Azure pricing calculator: Estimate your monthly costs based on your expected usage and configuration such as region or virtual machine series.

Product pricing details pages: Find detailed pricing information for each Azure service on its pricing details page. You can see the pricing model, the unit prices, the service tiers, and the regional availability.

Azure savings plan for compute: An easy and flexible way to save up to 65% on select compute services, compared to pay-as-you-go prices. The savings plan unlocks lower prices on compute services when you commit to spend a fixed hourly amount for one or three years. You choose whether to pay all upfront or monthly at no extra cost.

Azure reservations: Reserve Azure resources, such as VMs, SQL Database, or Cosmos DB, for one or three years and save up to 72% on your cloud costs. Improve budgeting and forecasting with a single upfront payment that makes it easy to calculate your investments. Or lower your upfront cash outflow with a monthly payment option at no additional cost.

Azure Hybrid Benefit: Apply your existing Windows Server, SQL Server licenses with active Software Assurance or subscriptions to Azure Hybrid Benefit to achieve cost savings. Save up to 85% compared to standard pay-as-you-go rates and achieve the lowest cost of ownership when you combine Azure Hybrid Benefit, reservations savings, and Extended Security Updates. You can also apply your active Linux subscription to Azure Hybrid Benefit.

Manage and optimize your Azure investments

If you are already using Azure and you want to optimize your spend for your current Azure workloads, you may want to review your usage and costs, and look for ways to enhance your investments. Azure provides several tools and resources to help you with this process, such as:

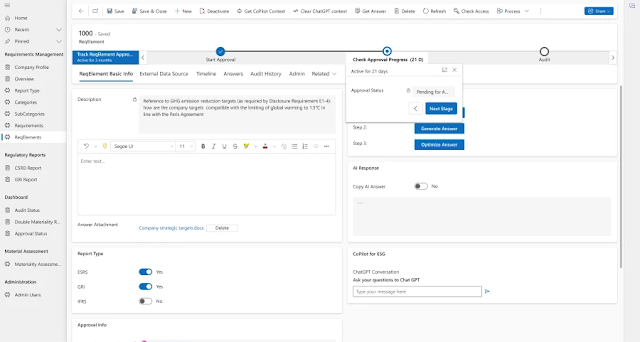

Microsoft Cost Management: You can use Microsoft Cost Management with Copilot to monitor and analyze your Azure spending, and to create and manage budgets and alerts. You can see your current and forecasted costs, your cost trends and anomalies, and your cost breakdown by service, resource group, or subscription. You can also get recommendations on how to optimize your costs.

Azure Advisor: You can use Azure Advisor to get personalized and actionable recommendations on how to improve the performance, security, reliability, and cost-effectiveness of your Azure resources. You can see the potential savings and benefits of each recommendation and apply them with a few clicks.

FinOps on Azure: You can leverage FinOps best practices on Azure to empower your organization by fostering a culture of data-driven decision-making, accountability, and cross-team collaboration. This approach will help you maximize investments and accelerate business growth through improved organizational alignment

An example of a company’s cloud journey and pricing needs

To illustrate how a customer can choose the best pricing option and resources for their cloud journey, let’s look at an example. Contoso, a hypothetical digital media company, wants to migrate their infrastructure and build a new OpenAI Chatbot application in Azure. Here’s how they would think about their Azure pricing needs at each stage of their journey:

Considering Azure: Contoso wants to understand how Azure pricing works. They use the free tier to try out some Azure services to test functionality. They also leverage the pay-as-you-go model to explore how some services are billed.

Assess and plan Azure projects: Contoso needs to estimate their project costs. To compare the costs of running on-premises versus on Azure they input their on-premises server infrastructure in the TCO calculator. They also use the Azure Architecture Center to learn how to develop an OpenAI chatbot with best practices.

Deployment in Azure: Contoso is ready to migrate their environment and deploy their company’s chatbot app and wants to budget for the specific Azure services needed. They leverage the product specific pricing pages and the pricing calculator to estimate their monthly costs based on their expected usage and configuration. They purchase Reservations for their stable and predictable VMs and Azure Database usage. They already have on-premise Windows Server licenses, so they enroll in Software Assurance to get a credit for those licenses with the Azure Hybrid Benefit when deploying their VMs to save on operating costs.

Post-deployment optimization in Azure: After running their environment on Azure for a few months, Contoso wants to review and optimize their workloads. They use Azure Advisor to get personalized and actionable recommendations on how to enhance their cost-effectiveness. Leveraging these recommendations, they purchase Azure savings plan for compute for their dynamic compute workloads that may change regions or scope and right-size their VMs.

Source: microsoft.com