Azure Stream Analytics is a fully managed Platform as a Service (PaaS) that supports thousands of mission-critical customer applications powered by real-time insights. Out-of-the-box integration with numerous other Azure services enables developers and data engineers to build high-performance, hot-path data pipelines within minutes. The key tenets of Stream Analytics include Ease of use, Developer productivity, and Enterprise readiness. Today, we're announcing several new features that further enhance these key tenets. Let's take a closer look at these features:

Rollout of these preview features begins November 4th, 2019. Worldwide availability to follow in the weeks after.

Also Read: 70-745: Microsoft Implementing a Software-Defined Datacenter

Online scaling

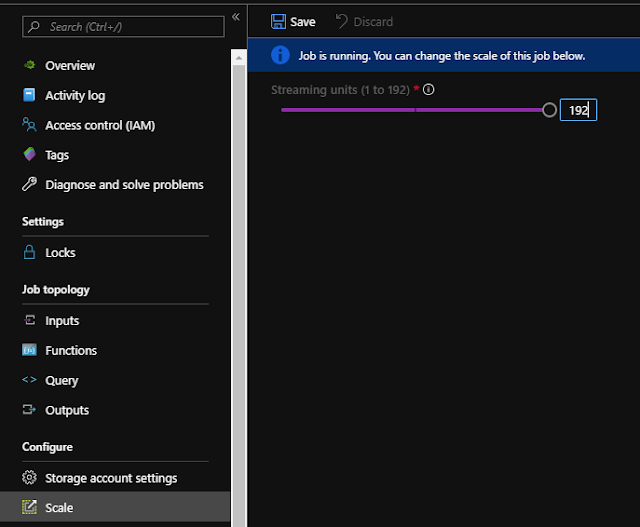

In the past, changing Streaming Units (SUs) allocated for a Stream Analytics job required users to stop and restart. This resulted in extra overhead and latency, even though it was done without any data loss.

With online scaling capability, users will no longer be required to stop their job if they need to change the SU allocation. Users can increase or decrease the SU capacity of a running job without having to stop it. This builds on the customer promise of long-running mission-critical pipelines that Stream Analytics offers today.

Preview Features

Rollout of these preview features begins November 4th, 2019. Worldwide availability to follow in the weeks after.

Also Read: 70-745: Microsoft Implementing a Software-Defined Datacenter

Online scaling

In the past, changing Streaming Units (SUs) allocated for a Stream Analytics job required users to stop and restart. This resulted in extra overhead and latency, even though it was done without any data loss.

With online scaling capability, users will no longer be required to stop their job if they need to change the SU allocation. Users can increase or decrease the SU capacity of a running job without having to stop it. This builds on the customer promise of long-running mission-critical pipelines that Stream Analytics offers today.

Change SUs on a Stream Analytics job while it is running.

C# custom de-serializers

Azure Stream Analytics has always supported input events in JSON, CSV, or AVRO data formats out of the box. However, millions of IoT devices are often programmed to generate data in other formats to encode structured data in a more efficient yet extensible format.

With our current innovations, developers can now leverage the power of Azure Stream Analytics to process data in Protobuf, XML, or any custom format. You can now implement custom de-serializers in C#, which can then be used to de-serialize events received by Azure Stream Analytics.

Extensibility with C# custom code

Azure Stream Analytics traditionally offered SQL language for performing transformations and computations over streams of events. Though there are many powerful built-in functions in the currently supported SQL language, there are instances where a SQL-like language doesn't provide enough flexibility or tooling to tackle complex scenarios.

Developers creating Stream Analytics modules in the cloud or on IoT Edge can now write or reuse custom C# functions and invoke them right in the query through User Defined Functions. This enables scenarios such as complex math calculations, importing custom ML models using ML.NET, and programming custom data imputation logic. Full-fidelity authoring experience is made available in Visual Studio for these functions.

Managed Identity authentication with Power BI

Dynamic dashboarding experience with Power BI is one of the key scenarios that Stream Analytics helps operationalize for thousands of customers worldwide.

Azure Stream Analytics now offers full support for Managed Identity based authentication with Power BI for dynamic dashboarding experience. This helps customers align better with their organizational security goals, deploy their hot-path pipelines using Visual Studio CI/CD tooling, and enables long-running jobs as users will no longer be required to change passwords every 90 days.

While this new feature is going to be immediately available, customers will continue to have the option of using the Azure Active Directory User-based authentication model.

Stream Analytics on Azure Stack

Azure Stream Analytics is supported on Azure Stack via IoT Edge runtime. This enables scenarios where customers are constrained by compliance or other reasons from moving data to the cloud, but at the same time wish to leverage Azure technologies to deliver a hybrid data analytics solution at the Edge.

Rolling out as a preview option beginning January 2020, this will offer customers the ability to analyze ingress data from Event Hubs or IoT Hub on Azure Stack, and egress the results to a blob storage or SQL database on the same.

Debug query steps in Visual Studio

We've heard a lot of user feedback about the challenge of debugging the intermediate row set defined in a WITH statement in Azure Stream Analytics query. Users can now easily preview the intermediate row set on a data diagram when doing local testing in Azure Stream Analytics tools for Visual Studio. This feature can greatly help users to breakdown their query and see the result step-by-step when fixing the code.

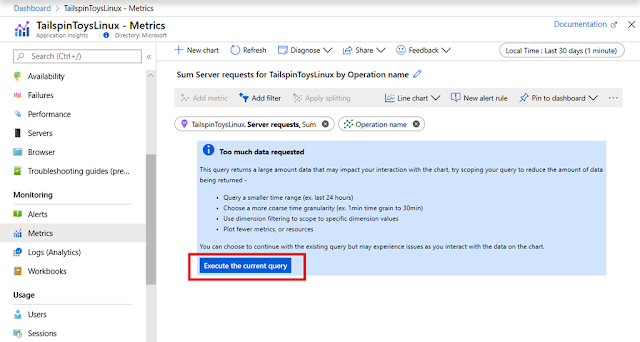

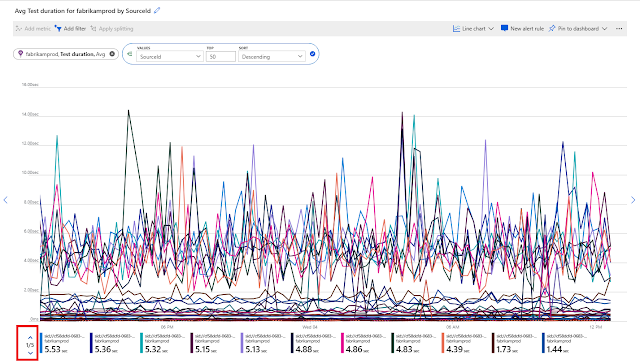

Local testing with live data in Visual Studio Code

When developing an Azure Stream Analytics job, developers have expressed a need to connect to live input to visualize the results. This is now available in Azure Stream Analytics tools for Visual Studio Code, a lightweight, free, and cross-platform editor. Developers can test their query against live data on their local machine before submitting the job to Azure. Each testing iteration takes less than two to three seconds on average, resulting in a very efficient development process.

Live Data Testing feature in Visual Studio Code

Private preview for Azure Machine Learning

Real-time scoring with custom Machine Learning models

Azure Stream Analytics now supports high-performance, real-time scoring by leveraging custom pre-trained Machine Learning models managed by the Azure Machine Learning service, and hosted in Azure Kubernetes Service (AKS) or Azure Container Instances (ACI), using a workflow that requires users to write absolutely no code.

Users can build custom models by using any popular python libraries such as Scikit-learn, PyTorch, TensorFlow, and more to train their models anywhere, including Azure Databricks, Azure Machine Learning Compute, and HD Insight. Once deployed in Azure Kubernetes Service or Azure Container Instances clusters, users can use Azure Stream Analytics to surface all endpoints within the job itself. Users simply navigate to the functions blade within an Azure Stream Analytics job, pick the Azure Machine Learning function option, and tie it to one of the deployments in the Azure Machine Learning workspace.

Advanced configurations, such as the number of parallel requests sent to Azure Machine Learning endpoint, will be offered to maximize the performance.