Azure Cognitive Services brings artificial intelligence (AI) within reach of every developer without requiring machine learning expertise. All it takes is an API call to embed the ability to see, hear, speak, understand, and accelerate decision-making into your apps. Enterprises have taken these pre-built and custom AI capabilities to deliver more engaging and personalized intelligent experiences. We’re continuing the momentum from Microsoft Build 2019 by making Personalizer generally available, and introducing additional advanced capabilities in Vision, Speech, and Language categories. With many advancements to share, let’s dive right in.

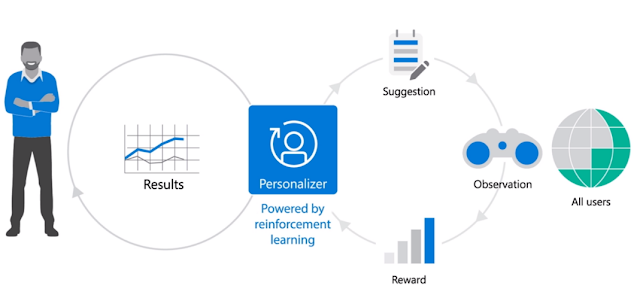

Winner of this year’s ‘Most Innovative Product’ award at O’Reilly’s Strata Conference, Personalizer is the only AI service on the market that makes reinforcement learning available at-scale through easy-to-use APIs. Personalizer is powered by reinforcement learning and provides developers a way to create rich, personalized experiences for users, even if they do not necessarily have deep machine learning expertise.

Giving customers what they want at any given moment is one of the biggest challenges faced by retail, media, and e-commerce businesses today. Whether it’s applying randomized A/B tests or supervised machine learning, businesses struggle to keep up with delivering unique and relevant experiences to each user. This is where Personalizer comes in, exploring new options to stay atop of previously unencountered influences on user behavior through a cutting-edge machine learning technique known as reinforcement learning. This technique allows Personalizer to learn from what’s happening in the world in real-time and update the underlying algorithm as frequently as every few minutes. The result is a significant improvement to your app usability and user satisfaction. When XBOX implemented Personalizer on their homepage, they saw a 40 percent lift in user engagement.

Personalizer: Powering rich user experiences

Winner of this year’s ‘Most Innovative Product’ award at O’Reilly’s Strata Conference, Personalizer is the only AI service on the market that makes reinforcement learning available at-scale through easy-to-use APIs. Personalizer is powered by reinforcement learning and provides developers a way to create rich, personalized experiences for users, even if they do not necessarily have deep machine learning expertise.

Giving customers what they want at any given moment is one of the biggest challenges faced by retail, media, and e-commerce businesses today. Whether it’s applying randomized A/B tests or supervised machine learning, businesses struggle to keep up with delivering unique and relevant experiences to each user. This is where Personalizer comes in, exploring new options to stay atop of previously unencountered influences on user behavior through a cutting-edge machine learning technique known as reinforcement learning. This technique allows Personalizer to learn from what’s happening in the world in real-time and update the underlying algorithm as frequently as every few minutes. The result is a significant improvement to your app usability and user satisfaction. When XBOX implemented Personalizer on their homepage, they saw a 40 percent lift in user engagement.

Form Recognizer: Increase efficiency with automated text extraction and feedback loop

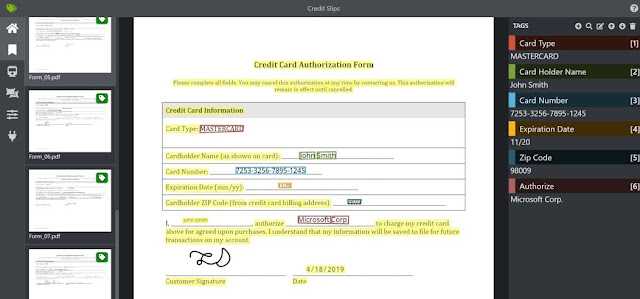

Businesses often rely on a variety of documents that can be hard to read; these documents are not always cleanly printed, and many include handwritten text. Businesses including Chevron use Form Recognizer to accelerate document processing through automatic information extraction from printed forms. This frees their employees to focus on more challenging and higher-value tasks.

Form Recognizer extracts key-value pairs, tables, and text from documents including W2 tax statements, oil and gas drilling well reports, completion reports, invoices, and purchase orders. Today we are announcing the ability to provide human inputs label forms and train a custom model to enable even more accurate data extraction. Users will be able to label forms to extract the values of interest. This feature enables Form Recognizer to support any type of form including values without keys, keys under values, tilted forms, photos of forms, and more. Starting with just five forms, users can train a model tailored to their use case with high-quality results. A new user experience gets you started quickly, selects values of interest, labels, and trains your custom model.

In addition, Form Recognizer can now train a single model without labels for all the different types of forms, and supports training on large datasets and analyzing large documents with the new AsyncAPI. This benefit enables customers to train a single model for the different types of invoices, purchase orders, and more without the need to classify the documents in advance.

We have also enhanced our pre-built receipts capabilities with accuracy improvements, additional new fields for tips, receipt types (itemized, credit card slip, gas, parking, other), and line item extraction detailing all the different items in the receipt. Finally, we have also improved the accuracy of our text recognition enabling extraction of high-quality text from the forms and our table extraction.

Sogeti, part of Capgemeni, is harnessing these new Form Recognizer capabilities. As Arun Kumar Sahu, the Manager of AI ML for Sogeti notes:

“We are working on a document classification and predictive solution for one of the largest automobile auction companies in the US, and needed an efficient way to extract information from various automobile related documents (PDF or image). Form Recognizer was quick and easy to train and host, was cost effective, handled different document formats, and the output was amazing. The new labelling features made it very effective to customize key value pair extraction.”

Speech: Enable more natural interactions and accelerate productivity with advanced speech capabilities

Businesses want to be able to modernize and enable more seamless, natural interactions with their customers. Our latest advancements in speech allow customers to do just that.

At Microsoft Ignite 2018, we introduced our neural text-to-speech capability, which uses deep neural networks to enable natural-sounding speech and reduces listening fatigue for users interacting with AI systems. Neural text-to-speech can be used to make interactions with chatbots and virtual assistants more natural and engaging, convert digital texts such as e-books into audiobooks, and enhance in-car navigation systems. We’re excited to build upon these advancements with the Custom Neural Voice capability, which enables customers to build a unique brand voice, starting from just a few minutes of training audio. The Custom Neural Voice capability can enable scenarios such as customer support provided by a company’s branded character, interactive lesson plans or guided museum tours, and voice assistive technologies. The capability also supports generating long-form content, including audiobooks.

The Beijing Hongdandan Education and Culture Exchange Center is dedicated to using audio to create accessible products for those with visual impairments and improving the lives of the visually impaired by providing aids such as audiobooks. Hongdandan is using the Custom Neural Voice capability to produce audiobooks based on the voice of Lina, who lost her sight at the age of 10. Lina is now a trainer at the Hongdandan Service Center, using her voice to teach others who are visually impaired to communicate well.

With the rapid pace at which business is moving today, remembering all the details from your last important meeting and tracking next steps and key deadlines can be a real challenge. Quickly and accurately transcribing calls can help various stakeholders stay on the same page by capturing critical details and making it easy to search and review topics you discussed. In customer support scenarios, being able to hear and understand your customers and keep an accurate record of information is critical for tracking customer requirements and enabling broader analysis.

However, accurately transcribing organization-specific terms like product names, technical terms, and people's names pose another barrier. With Custom Speech, you can tailor speech recognition models based on your own data so that your unique terms are accurately captured. Simply upload your audio to train a custom model. Now, you can also optimize speech recognition on your organization-specific terms by automatically generating custom models using your Office 365 data in a secure and compliant fashion. With this opt-in feature, organizations using Office 365 can more accurately transcribe company terminology, whether in internal meetings or on customer calls. The organization-wide language model is built only using conversations and documents from public groups that everyone in the organization can access.

Additional new features such as Custom Commands, Custom Speech and Voice containers, Speech Translation with automatic language identification, and Direct Line Speech channel integration with Bot Framework are making it easier to quickly embed advanced speech capabilities into your apps.

Language: Extract deeper insights from customer feedback and text documents

There are a multitude of valuable customer insights captured today—whether in social media, customer reviews, or discussion forums. The challenge is being able to extract insights from that data, so businesses can act fast to improve customer service and meet the needs of the market. With the Text Analytics Sentiment Analysis capability, businesses can easily detect positive, neutral, negative, and mixed sentiment in content, enabling them to keep an ongoing pulse on customer satisfaction, better engage their customers, and build customer loyalty. The latest release of the Sentiment Analysis capability offers greater accuracy in sentiment scoring, as well as the ability to detect sentiment for both an entire document as well as individual sentences.

Another challenge of extracting information from your data is being able to take unstructured natural language text and identify occurrences of entities such as people, locations, organizations, and more. Text Analytics is expanding entity type support to more than 100 named entity types, making it easier than ever to extract meaningful information and analyze relationships from raw text and between terms. Additionally, customers will now be able to detect and extract more than 80 kinds of personally identifiable information in English language text documents.

We are also adding several new capabilities to Language Understanding Intelligent Service (LUIS) that enable developers to build sophisticated models that are conversational. The new capabilities provide the ability to handle more complex requests from users (as an example, if you want to allow customers to truly use natural language, they might order ‘Two Burgers with no onions and replace buns with lettuce wraps’). This provides customers with the advanced ability for hierarchical entities and model decomposition, to build more sophisticated language models that reflect the way humans speak. In addition, we are adding more regions and further enhancing the existing human languages supported in LUIS with the addition of Hindi and Arabic.

Enterprise Ready: Azure Virtual Network for enhanced data security

One of the most important considerations when choosing an AI service is security and regulatory compliance. Can you trust that the AI is being processed with the high standards and safeguards that you come to expect with hardened, durable software systems? Azure Cognitive Services offers over 70 certifications. Today we are offering Virtual Network support as part of Cognitive Services to ensure maximum security for sensitive data. This service also is being made available in a container that can run in a customer’s Azure subscription or on-premises.

0 comments:

Post a Comment