Today’s general availability of proximity placement groups continues to be particularly useful for workloads that require low latency. In fact, this logical grouping construct ensures that your IaaS resources (virtual machines, or VMs) are physically located close to each other and adds new features and best practices for success.

New features

Since preview, we’ve added additional capabilities based on your great feedback:

More regions, more clouds

Starting now, proximity placement groups are available in all Azure public cloud regions (excluding India central).

Portal support

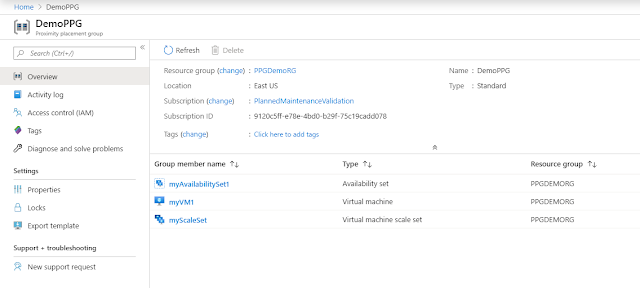

Proximity placement groups are available in the Azure portal. You can create a proximity placement group and use it when creating your IaaS resources.

Move existing resources to (and from) proximity placement groups

You can now use the Azure portal to move existing resources into (and out of) a proximity placement group. This configuration operation requires you to stop (deallocate) all VMs in your scale set or availability set prior to assigning them to a proximity placement group.

Supporting SAP applications

One of the common use cases for proximity placement groups is with multi-tiered, mission-critical applications such as SAP.

Measure virtual machine latency in Azure

You may need to measure the latency between components in your service such as application and database. We’ve documented the steps and tools on how to test VM network latency in Azure.

Learn from our experience

We’ve been monitoring proximity placement groups adoption as well as analyzing failures customers witnessed during the preview and captured the best practices for using proximity placement groups.

Best Practices

Here are some of the best practices that with your help we were able to develop:

- For the lowest latency, use proximity placement groups together with accelerated networking. Accelerated networking enables single root I/O virtualization (SR-IOV) to a VM, greatly improving its networking performance. This high-performance path bypasses the host from the data-path, reducing latency, jitter, and CPU utilization. For more information, see Create a Linux virtual machine with Accelerated Networking or Create a Windows virtual machine with Accelerated Networking.

- When trying to deploy a proximity placement group with VMs from different families and SKUs, try to deploy them all with a single template. This will increase the probability of having all of your VMs successfully deployed.

- A proximity placement group is assigned to a data center when the first resource (VM) is being deployed and released once the last resource is being deleted or stopped. If you stop all your resources (including to save costs), you may land in a different data center once you bring them back. Reduce the chances of allocation failures by starting with your largest VM which could be memory optimized (M, Msv2), storage optimized (Lsv2) or GPU enabled.

- If you are scripting your deployment using PowerShell, CLI or the SDK, you may get an allocation error OverconstrainedAllocationRequest. In this case, you should stop/deallocate all the existing VMs, and change the sequence in the deployment script to begin with the VM SKU/sizes that failed.

- When reusing an existing proximity placement group from which VMs were deleted, wait for the deletion to fully complete before adding VMs to it.

- You can use a proximity placement group alongside availability zone. While a PPG can’t span zones, this combination is useful in cases where you care about latency within the zone like in a case of an active-standby deployment where each is in a separate zone.

- Availability sets and Virtual Machine Scale Sets do not provide any guaranteed latency between Virtual Machines. While historically, availability sets were deployed in a single datacenter, this assumption does not hold anymore. Therefore, using proximity placement groups is useful even if you have a single tier application deployed in a single availability set or a scale set.

- Use proximity placement groups with the Azure Virtual Machine Scale Set new features (now in preview) which now supports heterogeneous Virtual Machine sizes and families in a single scale set, achieving high availability with fault domains in a single availability zone, using custom images with shared image gallery and more.

0 comments:

Post a Comment